Douglas Poland (13-ERD-046)

Abstract

Video data are ubiquitous and growing in volume in many mission areas, but there are no tools for dealing with collections of unannotated video data—the problem of filtering, characterizing, and querying these collections is a pressing problem that is currently intractable. Video data pose daunting challenge because, unlike text, there are no unambiguous semantic primitives (i.e., words) that can be readily extracted, analyzed, presented to, and selected by users. Video content does contain many recognizable multimodal "percepts" (objects of perception), such as the appearance, dynamics, and sounds associated with an event like "car stops, person gets out," but current approaches are too coarse or too unimodal to support these percepts. Our research was directed toward the development of multimodal percepts and methods for exploiting them to address current video representation and retrieval challenges. We began by investigating the rapidly changing state of the art in visual, motion, and audio feature extraction research. In collaboration with the International Computer Science Institute in Berkeley, California, we explored various approaches based on a deep neural network for learning audio representations, and conducted audio-only event-detection experiments. We developed a novel graph-based approach for fusing visual features with short-time motion and audio features, creating a kind of multimodal percept, and performed video triage and query experiments employing graph analytics on this feature graph. Finally, we teamed with the International Computer Science Institute and Yahoo! Labs to assemble, curate, and release YFCC-100M, the largest public multimedia research database to date, containing 99 million images, 800 thousand videos, and associated Flickr meta-data (data about data).1 This data set promises to be a key enabler for multimedia research for years to come and has already generated over 50 citations.

Background and Research Objectives

The intelligence community requires tools to index and query ever-larger collections of disparate video and determine the most relevant videos with minimal missed detections (false negatives). In contrast, market-driven video indexing and retrieval tools emphasize popularity and minimizing false positives. For this project, we proposed developing a hybrid content- and concept-based approach with the expressive power of concept-based approaches (videos indexed via labels) while retaining the generality and novelty of content-based approaches (retrieving videos that share similar features typically based on image content, motion descriptors, or audio).

This project began just as the deep learning revolution was taking off. A publication on using deep convolutional neural networks (a type of feed-forward artificial neural network where the individual neurons are tiled in such a way that they respond to overlapping regions in the visual field) to solve the ImageNet Large Scale Visual Recognition Challenge (a jointly sponsored competition for object detection and image classification at large scale), showed the potential of deeply learned features to solve very complex pattern-recognition problems.2,3 In contrast to engineered features (interest points, texture descriptors, color histograms, etc.), deep neural networks learn hierarchical representations from large data samples. Not only has this approach gone on to rival or exceed human performance on the ImageNet Challenge, but it has been shown to learn features that correspond to visual concepts at various levels, and excels at transfer learning tasks.4,5 Extending this approach to video, however, presents significant challenges. Image data is static, two-dimensional, and the subject of the image tends to be centered in the field of view (in the ImageNet Challenge, the image subject is both centered and closely cropped). In video, as objects move they change in position, rotation, scale, obscuration, lighting, and even leave and re-enter the field of view. In addition, the environment may undergo similar changes, the camera often moves, and there is audio content that is not always connected to the visual content. These challenges call for multimodal deep learning approaches that combine multiple deep neural networks and incorporate attention mechanisms.

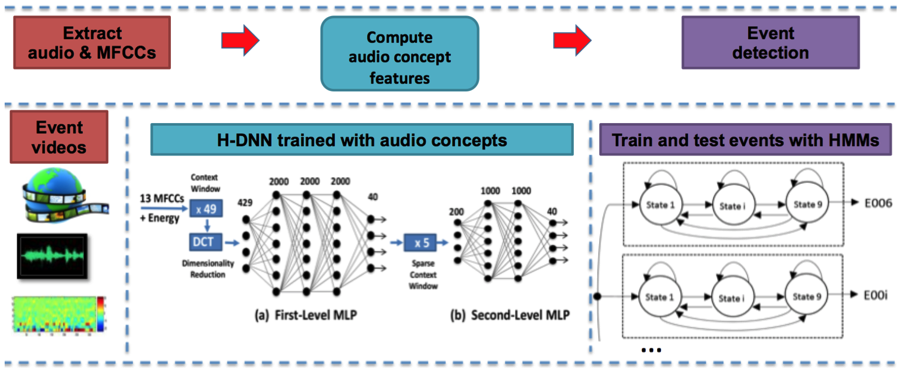

Our overall objective was to take the state of the art in audio, visual, and motion feature extraction as a starting point and to explore how we could learn and leverage multimodal percepts to enable interactive triage and query of unannotated video data. The primary strategy was to experiment with multimodal feature graph representations, exploring different graph construction and analytic methods. We began with importing and extending the International Computer Science Institute's expertise on learning audio features and applying them to video event detection and geographical-location challenges. We collaboratively explored a variety of architectures with the goal of learning audio percepts that were generally useful in video triage and query. We improved both the accuracy and efficiency of these audio feature algorithms as measured on audio-only event detection (Figures 1 and 2). Other outcomes included transfer of the International Computer Science Institute's audio feature codes to LLNL, and audio-processing libraries developed for the University of California, Berkeley, deep learning network called Caffe (Convolutional Architecture for Fast Feature Embedding).6

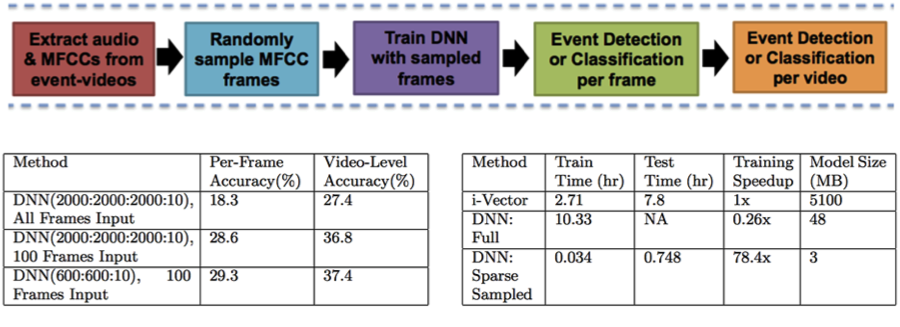

Inspired by visual convolutional neural-network successes, we next explored using deep neural networks without hidden Markov models for audio-based video event detection (see Figure 2). We achieved a 10% improvement in event-classification accuracy by optimizing sampling and deep neural-network topology, with a 200x reduction in the number of input frames that resulted in a 16x reduction in the size of the deep neural network required for maximum accuracy.7 The combination of input frame and deep neural-network size reduction gave an overall 300x and 78.4x speedup in training time compared to the full-audio deep neural-network and i-vector (speech processing) systems, respectively. When evaluated on the newly released YLI-MED data set (index of videos from the Yahoo Flickr image- and video-hosting Website), our deep neural-network system with sparse frame sampling showed higher accuracy than the baseline i-vector results, at a significantly lower computation cost. These improvements in speed and accuracy are relevant for processing videos at a larger scale, as well as for potential deployment of video analysis on mobile platforms.

Our original visual and motion strategy was to develop a multimodal appearance and track descriptor framework around tracking interest points. Progress was positive but slow, then during the first year of this project, deep convolutional neural networks changed the game in visual recognition due to their robustness and success. Our strategy changed to learning how best to apply and evaluate this approach for the purpose of discovering multimodal percepts. We collaborated with researchers who were exploring convolutional neural-network code bases, architectures, and inner workings from a statistical machine learning point of view. For the purpose of our video applications, we found the differences in performance between particular deep neural-network architectures was a second-order effect compared to the effects of the unsolved challenges, outlined earlier, in extending classification research from images to video. Therefore, we used the simpler and more explainable Krizhevsky deep convolutional neural network (“AlexNet”) to generate visual features.

At the same time that deep convolutional neural networks emerged, an engineered motion feature called the dense trajectory features became established as best in class on video event-classification benchmarks.8 Dense trajectory features track interest point trajectories, then construct a complex description of the optical flow and visual appearance around each trajectory. Though very expensive to compute and not terribly elegant, it was clearly the best at capturing motion percepts. We tabled our motion feature research and adopted dense trajectory features as our motion feature baseline.

Our final objective was to develop a multimodal graph representation and query framework. We leveraged our team and the Laboratory’s expertise with graph representations and analytics to develop a flexible graph-based interactive framework that advances the state of content-based video triage and query. As we worked, an opportunity to release and develop a new data set (the YFCC-100M) emerged. Because of the shortage of open video data sets and the lack of annotations (the labels are limited to terse "video level" event descriptors and sparse noisy meta-data), this became a fairly high priority, and we have already had an impact on the community with the YFCC-100M data set. We also found that we needed to perform developmental work on an interface for viewing, vetting, and tagging the results of our triage and query-by-example experiments on this large set of videos in order to support our research.

Scientific Approach and Accomplishments

Audio Feature Learning

Our collaboration with the International Computer Science Institute was focused on audio feature learning and percept discovery. The approach was to learn audio “concepts” using deep neural-network architectures and video-level event tags for supervision; we used both the TRECVID MED 2013 corpus and the YLI-MED open source version of that corpus created by the institute.9,10 Both corpora contain video files tagged with a single class like Flash mob, Parade, or Attempting a board trick. While the concept-based approach has been useful in image detection, audio concepts have generally not surpassed the performance of low-level audio features such as mel-frequency cepstral coefficients as a representation of the unstructured acoustic elements of video events. (In sound processing, the mel-frequency cepstrum is a representation of the short-term power spectrum of a sound.) We explored whether audio-concept-based systems could benefit from temporal information by exploiting the temporal correlation of audio at two levels. The first level involves learning audio concepts that utilize nearby temporal context through implementation of a hierarchical deep neural network (see Figure 1). At the second level, we use hidden Markov models to describe the continuous and nonstationary characteristics of the audio signal throughout the video. Experiments showed that a hidden Markov model system based on audio-concept features can perform competitively when compared with a system based on mel-frequency cepstral coefficients.11

Visual and Motion Feature Learning

As we began exploring deep convolutional neural networks, it quickly became clear that they produced superior visual features, so we abandoned our development of visual-motion features based on engineered interest points. Our experiments confirmed convolutional neural networks had feature superiority for object classification using ImageNet data, scene classification using SUN data, and also for image tag propagation.12,13 For the purposes of multimodal video percepts, these features would ideally carry spatio-temporal attributes. This is an active research topic, and we began to experiment with hybrid deep neural networks that follow convolutional layers with recurrent layers. For the recurrent layers, we chose the long short-term memory layer, first developed for speech processing but later shown useful for learning spatial relationships at multiple scales.14,15 This approach achieved less than 1% error rate on the Mixed National Institute of Standards and Technology database character-recognition challenge with a small network (two convolutional layers with 64 3 x 3 filters each and one long short-term memory layer with 192 cells). However, extending to user-generated video, with its variability in size, scale, motion, and temporal persistence of objects, requires incorporation or learning of spatio-temporal attention mechanisms (as well as a set of annotated multimodal percepts for evaluating performance). These are substantial undertakings that were beyond the scope of this project.

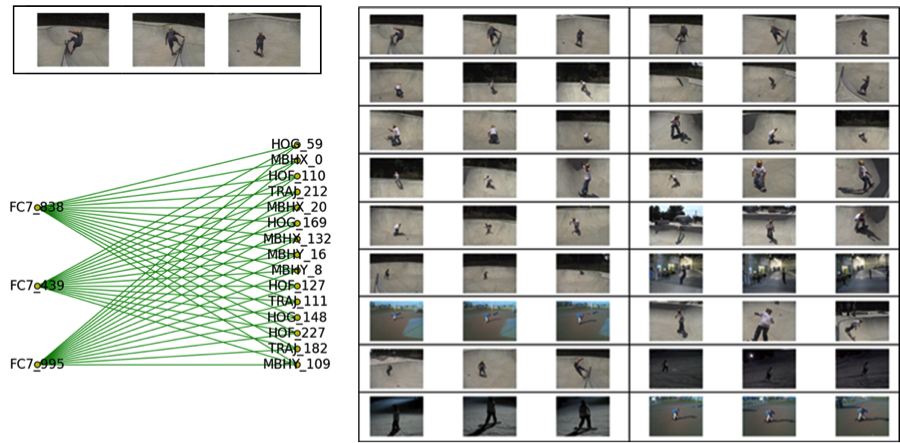

For our multimodal feature graph, we use AlexNet convolutional neural-network visual features computed over entire frames. We experimented with using various convolutional neural-network layers as the inputs to the multimodal feature graph, finding the best results with the penultimate layer (fully connected layer 7, or fc7). This provides, for each frame, a 4,096-element vector that contains a conceptually distributed spatially aggregated representation of the visual content. We cluster these over the test corpus using k-means to define “visual words” for input to our graph.

Motion information is captured by the dense trajectory features, which achieved top performance on several video-level event-classification benchmarks. Dense trajectory features begin with dense optical flow and construct trajectories from tracked interest points. Along these trajectories they compute and concatenate histograms of optical flow, x and y motion-boundary histograms (derivatives of the flow fields), histograms of pixel gradients, and also trajectory shape information. The raw features form 192 element vectors, one for each trajectory, which are then clustered over the corpus to compute a dictionary of cluster centers (“motion words”). Motion word histograms are then computed over each entire video. We would like finer timescales (as opposed to video-level summaries), so we instead compute each of the dense trajectory feature types (x and y motion boundary histograms, histograms of pixel gradients, histograms of optical flow, and trajectory shape information) over short time intervals and define clusters for each type, forming dictionaries for five types of motion words for input to our graph.

Multimodal Feature Graph

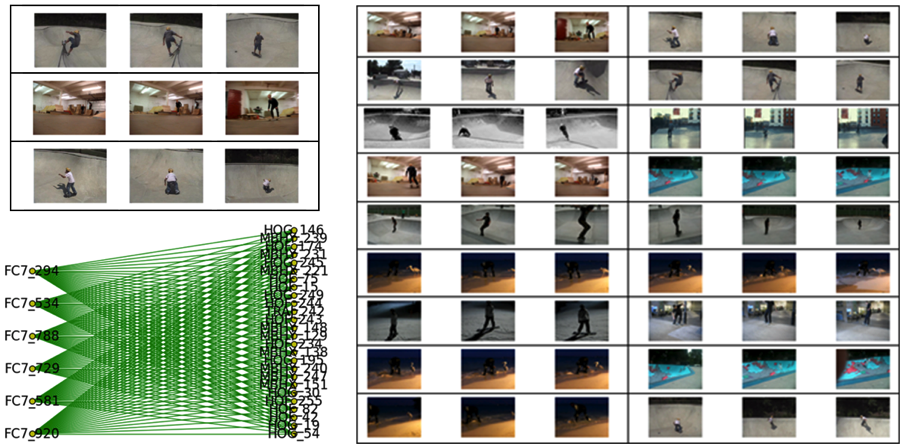

Using the YLI-MED subset of YFCC-100M, features are computed over non-overlapping 60-frame (~2 s) intervals we call “clips.” All feature nodes of different modalities that co-occur within a clip are joined via edges to form a multimodal feature graph (see Figure 3). Each edge is multimodal and has, as attributes, the number of clips where that edge occurs and a pointer to each of those clips. These lists typically contain multiple clips from the same video. Because we want to emphasize recall when we query the graph, we include the top three nodes of each type in the subgraph for each clip. Thus each clip is represented by a subgraph with 45 edges—3 convolutional neural-network feature nodes connected to each of 15 dense trajectory feature nodes. Note that adding audio features led to mixed results—for some classes it tends to help, for others it hurts. The experimental results shown here do not include audio features.

We interact with this graph in two ways. First, given one or more clips that define our query, we perform a random walk on the graph from each of the nodes in those clips and return the highest overall ranked nodes and edges using an algorithm called the random walk subgraph. This random walk algorithm is a truncated approximation to the CenterPiece subgraph algorithm.16 The first step is to run random walk with restart (also known as PageRank17) starting at each query node, which scores neighboring nodes with respect to each query node (this is “personalized PageRank”). The second step combines these scores to identify a subgraph that often includes nodes not directly represented in the query set, but related via some number of co-occurrence hops. The CenterPiece subgraph algorithm performs a more exhaustive random walk and more sophisticated node ranking, but is more computationally expensive; therefore we have deferred adoption pending a programmatic need. for the third and last step, we return a list of clips ranked in order of their strength of overlap with the computed subgraph.

In our second method for interacting with this graph, given a set of clips returned from an random walk subgraph query, we apply latent Dirichlet allocation, an unsupervised clustering technique. For this technique, we treat each clip as a “bag of edges,” analogous to running latent Dirichlet allocation to generate topics from a collection of documents (clips = documents and edges = words). Note that each “word” here is defined by its multimodal endpoint nodes, so we obtain multimodal topical clusters. Latent Dirichlet allocation computes the importance of each word in each topic and the multimodal topics are weighted edge lists (subgraphs), so we can submit a topic as a refined random walk subgraph query. This provides a mechanism to perform result set expansion or splitting in an iterative ad hoc fashion until one obtains a model that combines features in the desired manner (as evidenced by the ranked results) without having to specify the features. Figures 3–5 illustrate how this enables interactive query by example, obtaining a precision of 98% for the top 200 results, on a skateboard trick query, while also bringing attention to other types of board tricks (such as wakeboard and snowboard).

YFCC-100M

Along with our partners at the International Computer Science Institute and Yahoo! Research, we released YFCC-100M, the largest fully open multimedia data corpus to date. Amazon Web Services has since joined this effort and will be hosting the data and computed features. We utilized a 3-million-central-processing-unit hour grant on LLNL's Catalyst (the 150 teraFLOP/s high-performance computing cluster developed by a partnership of Cray, Intel, and Lawrence Livermore) to compute features for our own and others’ use, and have been cited over 50 times to date. We began a dialog with the broader community and organized a workshop for the ACM Multimedia 2015 conference to highlight ours and others’ work on the corpus and to build momentum for generating new annotations and challenge problems that will enable a leap into fully spatio-temporal multimodal deep learning.18

Impact on Mission

This project supports the Laboratory’s national security mission and the core competency in high-performance computing, simulation, and data science by developing the foundations of a new capability for indexing and querying video in ways that provide human analysts with the most relevant results and minimal missed detections.

Conclusion

Our research has had significant impact, helping expand programmatic opportunities and strengthen programmatically important technical collaborations. We developed in-house audio, visual, and motion feature learning expertise and code libraries. We have performed foundational work on joint visual-motion deep learning architectures and established a strategic relationship with the International Computer Science Institute. Furthermore, we made great progress and contributions in leveraging multimodal percepts and developing a research prototype that demonstrates a capability for interactive content-based video triage and query, laying a foundation for specific programmatic applications. We have also helped to lay the foundations for ongoing research in spatio-temporal multimodal deep learning, both in terms of algorithm research and, of equal or greater importance, initiating an effort to generate the data needed to support it.

References

- Thomee, B., et al., “The new data and new challenges in multimedia research.” CoRR, arXiv:1503.01817 (2015).

- Krizhevsky, A., I. Sutskever, and G. Hinton, “ImageNet classification with neep nonvolutional neural networks.” Advances in Neural Information Processing Systems 25 (NIPS 2012), p. 1106 (2012).

- Russakovsky, O., et al., “ImageNet large scale visual recognition challenge.” CoRR, arXiv:1409.0575 (2014).

- Simonyan, K., and A. Zisserman, “Very deep convolutional networks for large-scale image recognition.” CoRR, arXiv:1409.1556v6 (2014).

- Zhou, B., et al., “Object detectors emerge in deep scene CNNs.” CoRR, arXiv:1412.6856v2 (2014).

- Jia, Y., et al., “Caffe: Convolutional architecture for fast feature embedding.” CoRR, arXiv:1408.5093 (2014).

- Ashraf, K., et al., Audio-based multimedia event detection with DNNs and sparse sampling. ACM Intl. Conf. Multimedia Retrieval (ICMR 2015), Shanghai, China, June 23–26, 2015. LLNL-CONF-679325.

- Wang, H., et al., “Action recognition by dense trajectories.” IEEE Conf. Computer Vision and Pattern Recognition (CVPR 2011), p. 3169 (2011).

- Over, P., et al., “TRECVID 2013—An overview of the goals, tasks, data, evaluation mechanisms and metrics.” Proc. TRECVID 2013. National Institute of Standards and Technology, USA (2013).

- Bernd, J., et al., The YLI-MED corpus: Characteristics, procedures, and plans. International Computer Science Institute. TR‐15‐00, 1arXiv:1503.04250 (2015).

- Elizalde, B., et al., Audio-concept features and hidden Markov models for multimedia event detection. Interspeech Workshop on Speech, Language and Audio in Multimedia (SLAM 2014), Penang, Malaysia, Sept. 11–12, 2014.

- Xiao, J., et al., SUN database: Large-scale scene recognition from abbey to zoo. IEEE Conf. Computer Vision and Pattern Recognition (CVPR 2010), San Francisco, CA, June 13–18, 2010.

- Mayhew, M., and K. Ni, Assessing semantic information in convolutional neural network representations of images via image annotation. (2015). LLNL-CONF-670551.

- Hochreiter, S., and J. Schmidhuber, “Long Short-Term Memory.” Neural Comput. 9(8), 1735 (1997).

- Graves, A., S. Fernandez, and J. Schmidhuber, “Multi-dimensional recurrent neural networks.” Artificial Neural Networks—ICANN 2007, p. 549 (2007).

- Tong, H., and C. Faloutsos, Center-piece subgraphs: Problem definition and fast solutions. ACM SIGKDD Intl. Conf. Knowledge Discovery and Data Mining (KDD 2006), Philadelphia, PA, Aug. 20–23, 2006.

- Brin, S., and L. Page,“The anatomy of a large-scale hypertextual Web search engine.” Proc. 7th Intl. Conf. World Wide Web 7 (WWW7), p. 107 (1998).

- Friedland, G., C. Ngo, and D. A. Shamma, Proc. 2015 Workshop Community-Organized Multimodal Mining: Opportunities for Novel Solutions. Association for Computing Machinery, New York, NY (2015).

Publications and Presentations

- Ashraf, K., et al., Audio-based multimedia event detection with DNNs and sparse sampling. ACM Intl. Conf. Multimedia Retrieval (ICMR 2015), Shanghai, China, June 23–26, 2015. LLNL-CONF-679325.

- Bernd, J., et al., Kickstarting the commons: The YFCC100M and the YLI corpora. ACM Intl. Conf. Multimedia Retrieval (ICMR 2015), Shanghai, China, June 23–26, 2015. LLNL-CONF-678126.

- Carrano, C. J., and D. N. Poland, Ad hoc video query and retrieval using multi-modal feature graphs. (2015). LLNL-PRES-670430.

- Choi, J., et al., “The placing task: A large-scale geo-estimation challenge for social-media videos and images.” Proc. ACM Multimedia 2014 Workshop on Geotagging and Its Applications in Multimedia (GeoMM ’14), p. 27 (2014). LLNL-TR-663455.

- Mayhew, M., and K. Ni, Assessing semantic information in convolutional neural network representations of images via image annotation. (2015). LLNL-CONF-670551.

- Ni, K. S., et al., The Yahoo–Livermore–ICSI (YLI) multimedia feature set. (2014). LLNL-MI-659231.

- Thomee, B., et al.,The new challenges in multimedia research. (2014). LLNL-JRNL-661903.