Barry Chen (17-SI-003)

Executive Summary

This research project is developing a deep-learning neural network system to discover and assess patterns in multimodal data gathered worldwide across all media fields for indicators of nuclear proliferation capabilities and activities, enhancing our data analytic capabilities to support national security, nonproliferation, cyber and space security, intelligence, and biosecurity.

Project Description

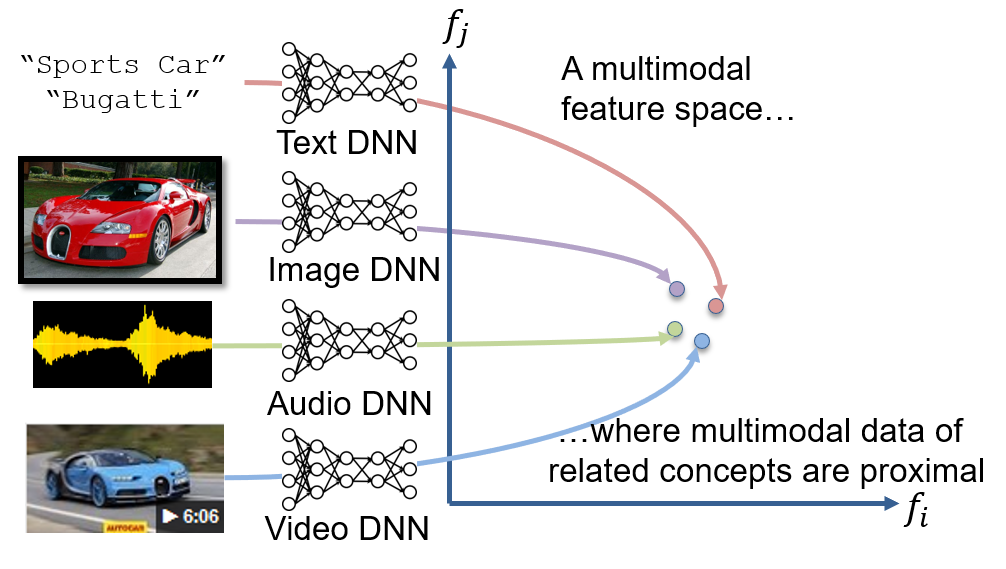

We are developing a large-scale, multimodal, deep learning system (a type of artificial intelligence based on algorithms that enable the program to learn to recognize objects on its own) that can discover and assess patterns in worldwide science, technology, political, and economic data for indicators of nuclear proliferation capabilities and activities. As the quantity of information about these activities continues to grow exponentially, automated systems for locating, harvesting, integrating, and mapping massive data streams are essential for guiding human decision makers to the important threads of information in the sea of available data. We intend to develop such a system and demonstrate both its utility in assessing nuclear proliferation activities and its potential to expand and be applied to global-scale challenges. This research will bring together and extend advances in deep learning with emerging trends in computing technology to create a deep-learning system capable of discovering patterns in large (over 100 million samples) multimodal data sets, explain the chain of reasoning used to arrive at the discovered patterns, and extend the exploration of computer artificial neural systems (models inspired by biological neural networks) to massive scales, using both the largest high-performance computing systems and low-power, deliverable packages. Our approach is to (1) develop new deep-learning algorithms and techniques for learning multimodal features where conceptually similar data are proximal, enabling high-quality pattern retrieval, (2) develop high-performance computing training software for large-scale training data and models, (3) deploy models, and (4) validate the efficacy of our system in assisting nonproliferation analysts.

As collections of multimodal data (text, images, video, transactional, and other sensor data types) continue to grow, the ability to automatically discover patterns in massive, sparsely labeled data becomes even more important for many Livermore mission areas. We hope to address this need by developing the first, large-scale multimodal deep-learning system capable of discovering and explaining multimodal patterns in massive data for accelerating the pace and expanding the coverage of nonproliferation analysis. The key element of this learning system is the multimodal semantic feature area at the heart of the “semantic wheel” framework. In this framework, deep-learning models (i.e., “spokes”) map multiple types of data into a unified semantic feature space to facilitate human analysis. The key characteristic of these high-dimensional semantic spaces is that distance between points is related to semantic content similarity. For example, text phrases related to elements of nuclear technology will be near images or materials shipment records related to similar technologies. Deep-learning systems with the confluence of these characteristics are not currently being developed in industry and academia. While we will focus on nonproliferation assessment capabilities, the work accomplished here can be extended to intelligence and other national security programs. The results of this project could position the Laboratory as a leader in advanced analytics capabilities.

Mission Relevance

This research supports the NNSA goals of reducing nuclear dangers and strengthening our science, technology, and engineering base. By making possible the discovery of complex patterns across multiple types of data, this project has the potential to enhance our advanced data analysis capabilities to support missions in national security, cyber and space security, nonproliferation, intelligence, and biosecurity. In addition, the technology we intend to develop supports the Laboratory's core competency in high-performance computing, simulation, and data science.

FY17 Accomplishments and Results

In FY17, we (1) created a nuclear technology dataset from open source media focused on equipment and materials indicative of the enrichment and reprocessing stages for use as benchmark data for quantifying retrieval performance of our algorithms; (2) created new algorithms for learning features from unlabeled images and video and demonstrated their effectiveness for retrieval and recognition in both ground-based and overhead data; (3) pursued the visualization of neurons (instead of optimization approaches) via sensitivity analyses for better interpretability; and (4) enhanced the Livermore Big Artificial Neural Network (LBANN) training software to enable the training of deep, greedy, layer-wise autoencoders and unsupervised context learners.

Publications and Presentations

Choi, J., et al. 2017. "The Geo-Privacy Bonus of Popular Photo Enhancements." International Conference on Multimedia Retrieval, Bucharest, Romania, June 6–9, 2017. LLNL-CONF-739933.

Dryden, N. J., et al. 2017. "Communication Quantization for Data-Parallel Training of Deep Neural Networks." Machine Learning in HPC Environments. Salt Lake City, UT, November 13–18, 2017. LLNL-CONF-700919.

Grathwohl., W., and A. Wilson. 2017. "Disentangling Space and Time in Video with Hierarchical Variational Auto-Encoders." Computer Vision and Pattern Recognition, Honolulu, HI, July 22–25, 2017. LLNL-CONF-725749.

Jacobs, S. A., et al. 2017. "Towards Scalable Parallel Training of Deep Neural Networks." Machine Learning in HPC Workshop (MLHPC), Supercomputing (SC17), Denver, CO, November 17–20, 2017. LLNL-CONF-737759.

Jing, L. et al. 2017. "DCAR: A Discriminative and Compact Audio Representation for Audio Processing." IEEE Transactions on Multimedia. PP(99). LLNL-JRNL-739946.

Ni, K., et al. 2017. "Sampled Image Tagging and Retrieval Methods on User Generated Content." British Machine Vision Conference, London, UK, Sept. 4–7, 2017. LLNL-CONF-712477.