Markus Schordan (17-ERD-023)

Executive Summary

This project seeks to improve the detection and correction of data-race bugs, which occur when sequences of computed events happen in unintended order, jeopardizing the integrity of parallel programs using shared-memory architectures. These improvements will increase the efficiency of the high-performance computing systems that underlie many of the nation’s security missions.

Project Description

High-performance computing is susceptible to data-race bugs. Data races occur when sequences of computed events do not happen in the intended order, creating a significant barrier to the integrity of parallel programs using shared-memory architectures. Many approaches to data-race detection are based on traditional static-analysis techniques that typically execute on a program’s source code or control-flow graph (the graphical representation of all possible orders of events in the execution of a program). Similar to classical data-flow analyses, such tools can produce false alarms that report spurious race conditions on error-free programs. We are developing a software verification technique that requires as input only a program’s source code and can automatically detect a data-race bug or determine that the program is data-race free. By basing our approach on model checking, allowing us to report an error that verifies the absence of race conditions, we plan to implement a technique that does not produce false alarms. Our technique presents a valuable addition to the testing of parallel programs because it can automatically detect and then ensure the absence of data races, thus reducing development effort. Once we know that a program is definitely free of data races under certain automatically determined conditions, our understanding and remediation of other bugs will also improve. No testing for data races will be necessary for those parts of a program that have been proved to be free of these conditions. Our work will be applicable to multiprocessing programs using both central processing unit and graphics processing unit forms of threading execution. Our methodology could not only be used to better understand and/or debug a program, but also to reduce the number of tests that are run for a given program. In case a data race is determined, the paths in the program execution that can lead to a data race are reported to the user, and may greatly aid them in correcting the code.

We will produce an automatic formal verification that detects data races at compile time and an evaluation of our approach based on up-to-date versions of proxy applications and the history of projects where data races were found in the past. We are considering software verification techniques based on previous experience in formal software verification and successful participation in international verification competitions using the proxy application structures for Livermore's particle transport code, known as Kripke, consisting of both concrete and abstract states that represent the behavior of the analyzed program. We intend to advance the state of the art to address the complexities of a range of computer languages used in Laboratory applications that run on shared memory architectures, in particular C++ and OpenMP, as well as Raja (Ray-Tracer in Java), which has not been addressed in any verification work. In contrast to usual verification techniques, our proposed approach searches for and outputs valid specifications instead of requiring the user to provide them. These specifications are constrained such that the verification can either pass or an error can be determined in the program. The user only has to read and understand the generated specification, but does not need to write it. Our cumulative improvement will reduce the number of tests needed and increase efficiency and accuracy within the high-performance-computing software-development life cycle.

Mission Relevance

This research addresses the NNSA goals of advancing the technological and engineering competencies that are at the foundation of NNSA missions and expands our ability to deal with broad national challenges. This work supports Livermore’s core competency in high-performance computing, simulation, and data science by ensuring that existing and new parallel applications are data-race free and work correctly on future high-performance-computing machines. Furthermore, developers who write programs for shared-memory architectures could benefit from a tool that implements our approach.

FY17 Accomplishments and Results

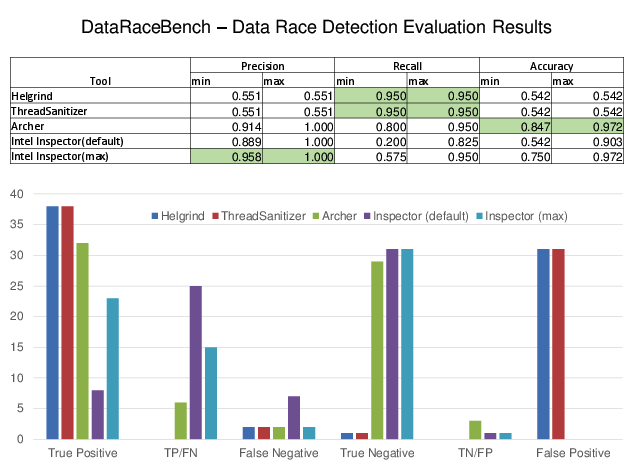

In FY17, we (1) investigated proxy-applications and identified the requirements for our tool to operate on these codes; (2) created a benchmark suite that properly organizes all identified code patterns of interest; (3) performed an in-depth evaluation of existing dynamic analysis tools with our benchmark suite; (4) submitted a conference paper to the SuperComputing 2017 conference, which has since been nominated for best paper; and (5) submitted our benchmark suite, DataRaceBench, to Livermore's review & release to make it available to others and set the stage for evaluation and comparison of data race detection tools.

Publications and Presentations

Liao, C., et al. 2017. "DataRaceBench: A Benchmark Suite for Systematic Evaluation of Data Race Detection Tools." The International Conference for High Performance Computing, Networking, Storage and Analysis. Nov.12-17, Denver, CO. LLNL-CONF-728717.