David Widemann (15-ERD-050)

Abstract

The goal for this project was to demonstrate that deep neural networks coupled with semantic embeddings could be used to process large amounts of text, imagery, and video data for national security applications. Such neural networks perform low-level tasks, allowing analysts to concentrate on higher level analyses. We researched problems including document classification, mapping video to text, text summarization, semantic search and retrieval, and sentence classification. The common theme throughout these tasks was the construction of neural networks with embedded layers to create robust feature representations for text, video, and imagery data. This research helped Lawrence Livermore National Laboratory garner several externally funded projects, attract new talent, and form research collaborations with academic partners.

Background and Research Objectives

The vast flood of digital information due to the rise of social networks, email, real-time chat, blogs, etc., offer enormous opportunities for analysts to gain insight into patterns of activity and detection of national security threats. However, to benefit from these opportunities, systems must be developed to analyze not only the enormous quantity of data, but also the diverse forms that it comes in. The ever-increasing volume of data and the ever-shifting landscape of illicit weapons of mass destruction (WMD) production makes a purely human-driven system difficult to scale and slow to respond to fast-emerging threats. The current strategy of employing a veritable army of analysts to manually build graphs and connect divergent pieces of information is inefficient and can lead to inconsistencies and contradictory conclusions. We believe that the task of inferring connections and finding patterns by analyzing vast stores of data is best done by computers, freeing the analyst to make high-level decisions.

Towards this end, the goals of this project were to:

- design deep neural networks capable of ingesting large amounts of data and performing low-level decision-making, thus freeing up analyst time;

- construct semantically meaningful feature representations for differing data modalities; and

- demonstrate these machine-learning algorithms on a Lawrence Livermore national security problem set.

Scientific Approach and Accomplishments

This section describes several accomplishments related to achieving the aforementioned goals.

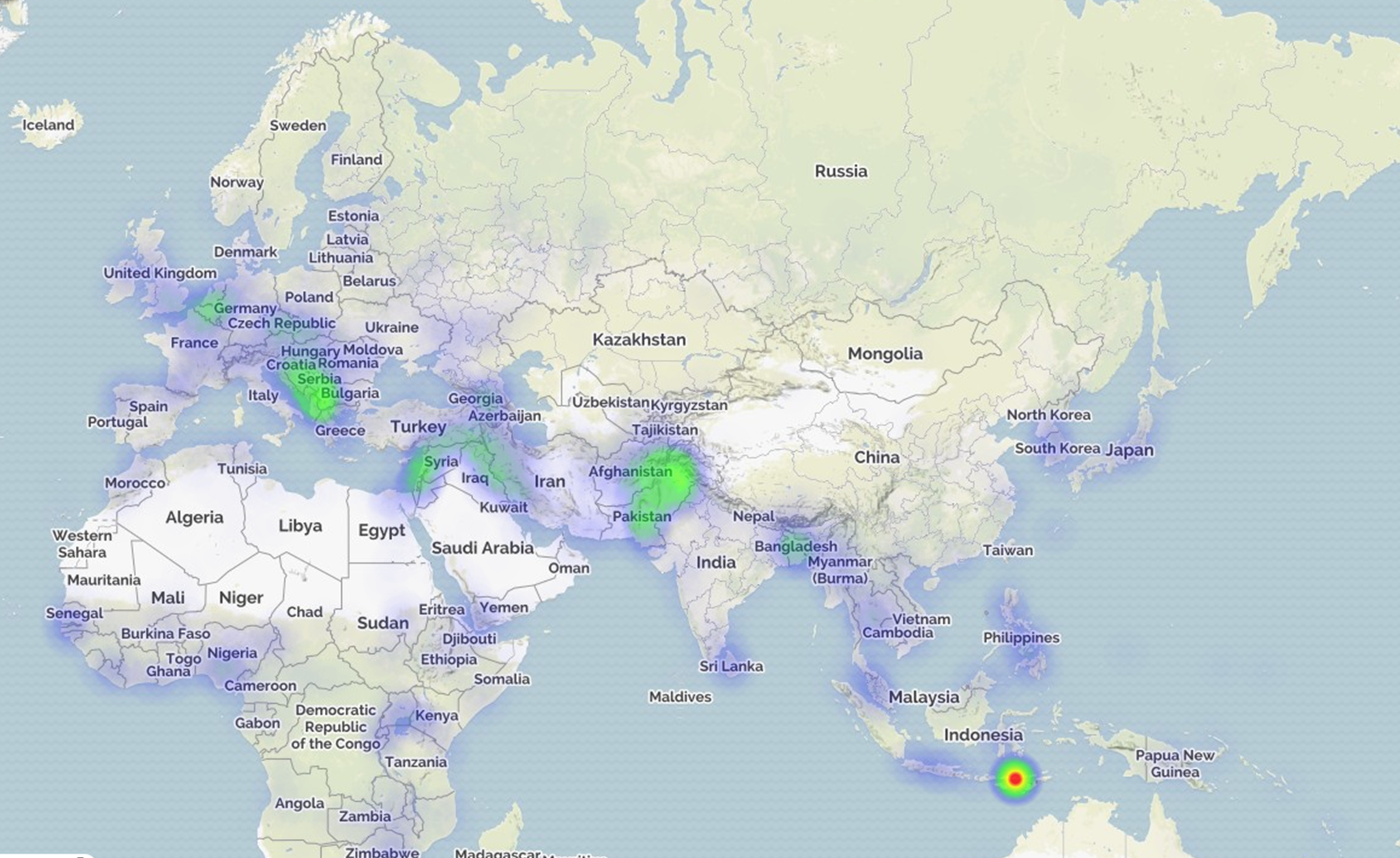

Spatio-Temporal Heatmaps

Monitoring threat levels around the globe using open news sources is a challenging task. The analyst must sift through an enormous number of search returns to determine which articles are relevant to his or her query. There is also the issue of translating non-English text to English. Ideally, the analyst would be able to read all of the text and be able to infer the threat level for his or her application at hand. Example applications include monitoring nuclear non-proliferation activities and predicting forced migrations. In practice, the analyst must triage which articles to read because of time constraints.

In our project, we constructed a spatio-temporal heatmap that is able to ingest all of the text sources and display when various regions are most active for the input search query. The algorithm uses a combination of semantic word embeddings that are learned via context usage (Mikolov 2013a, 2013b, Pennington 2014). Using word vectors, articles are then mapped into a high dimensional vector space that allows for scoring each article against a Gaussian mixture model generated from the search query (see Figure 1). (A Gaussian mixture model is a probabilistic model that assumes all the data points are generated from a mixture of a finite number of Gaussian distributions with unknown parameters.) The data is triaged by ranking each article by score. The analyst is then able to address the most relevant tarticles first. The success of the spatio-temporal heatmap demo played an important role in advancing other machine learning projects at the Laboratory.

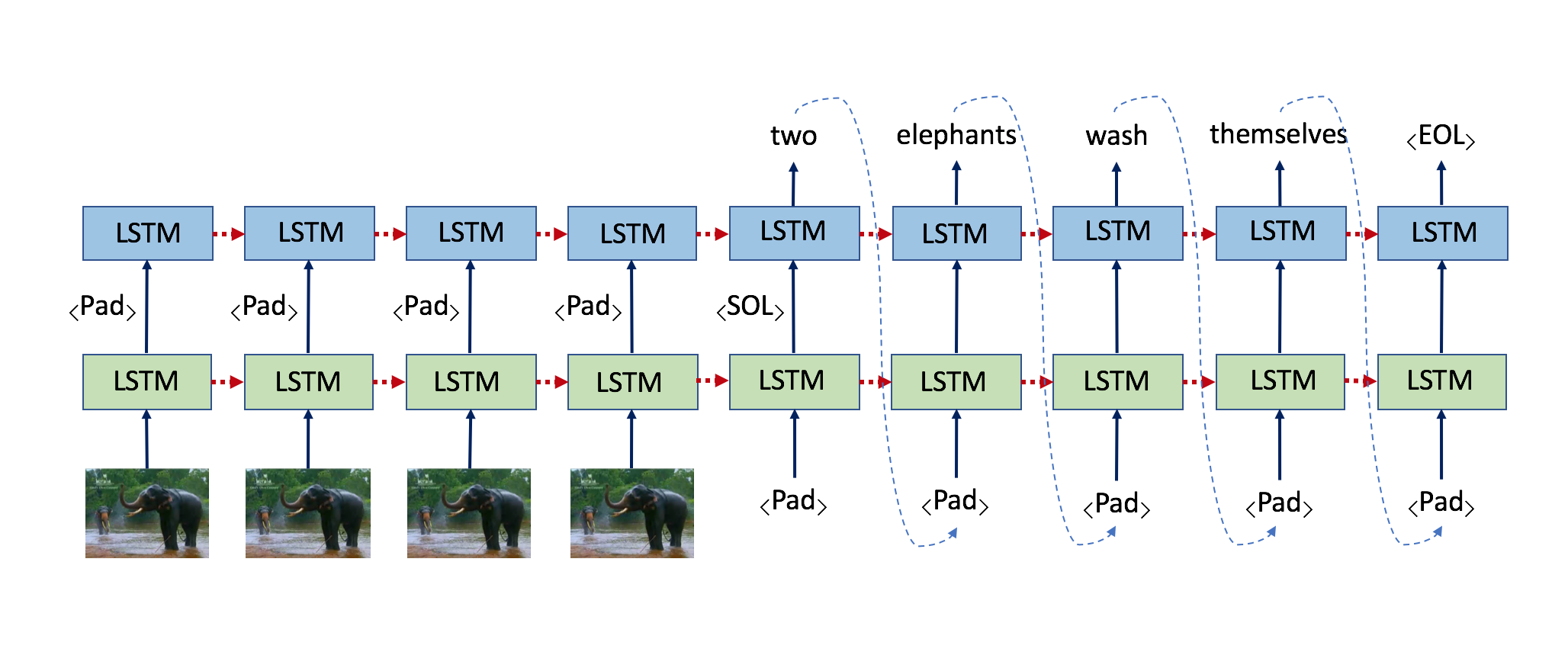

Video to Text

Open source videos from websites such YouTube are another data source from which analysts draw inferences and make conclusions. The main constraint for analysts within this data modality is that it is difficult to perform search-and-retrieve functions against videos using text input queries. Often, specific frames within videos will be relevant, but they will not be textually annotated appropriately. Another issue is that analyzing videos takes longer than text. For video, the analyst must sequentially watch the video at approximately the frame rate to form an opinion.

To address these issues, we coded deep neural networks that are capable of ingesting videos and outputting text descriptions of what is occurring in the video (Venugopalan 2015). This allows not only for retrieving the correct video but also for retrieving the correct frames within the video (see Figure 2). The algorithm's architecture for this task uses a convolutional neural network on each frame of the video to generate video features. The video features are input into a recurrent neural network that uses stacked long short-term memory cells to predict a sentence describing the video.

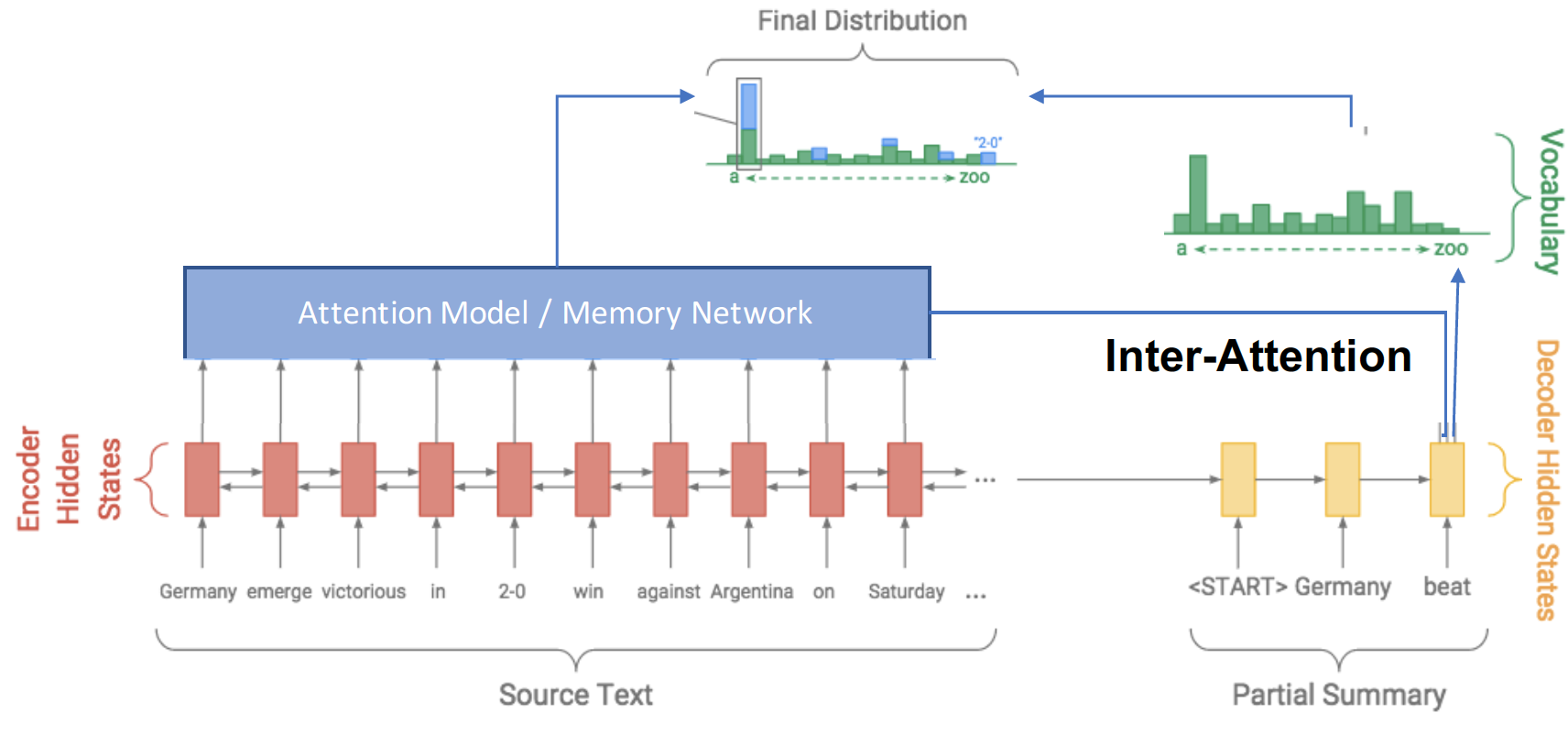

Text Summarization

Analysts often need to read large amounts of text to draw their conclusions. Unfortunately, much of this reading is on irrelevant text. Along with having algorithms rank articles by their relevance, we can also use text summarization to compress the size of each article. We developed neural network models that take an article as input and use extractive and abstractive techniques to generate a compressed version of the article. The compressed version will often be reduced by 80% of its original size. This allows the analyst to read a brief summary of an article to decide whether it is necessary to peruse the full article.

The architectures for these text summarization models use novel attention mechanism layers (Nallapati 2016) to determine which parts of the input text are most pertinent. The attention mechanism learns which elements of the input sequence to map to an element of the output sequence. The output summary text is then generated using a combination of neural networks that use encoder layers to learn text features and decoder layers that use attention mechanisms to output summaries (see Figure 3).

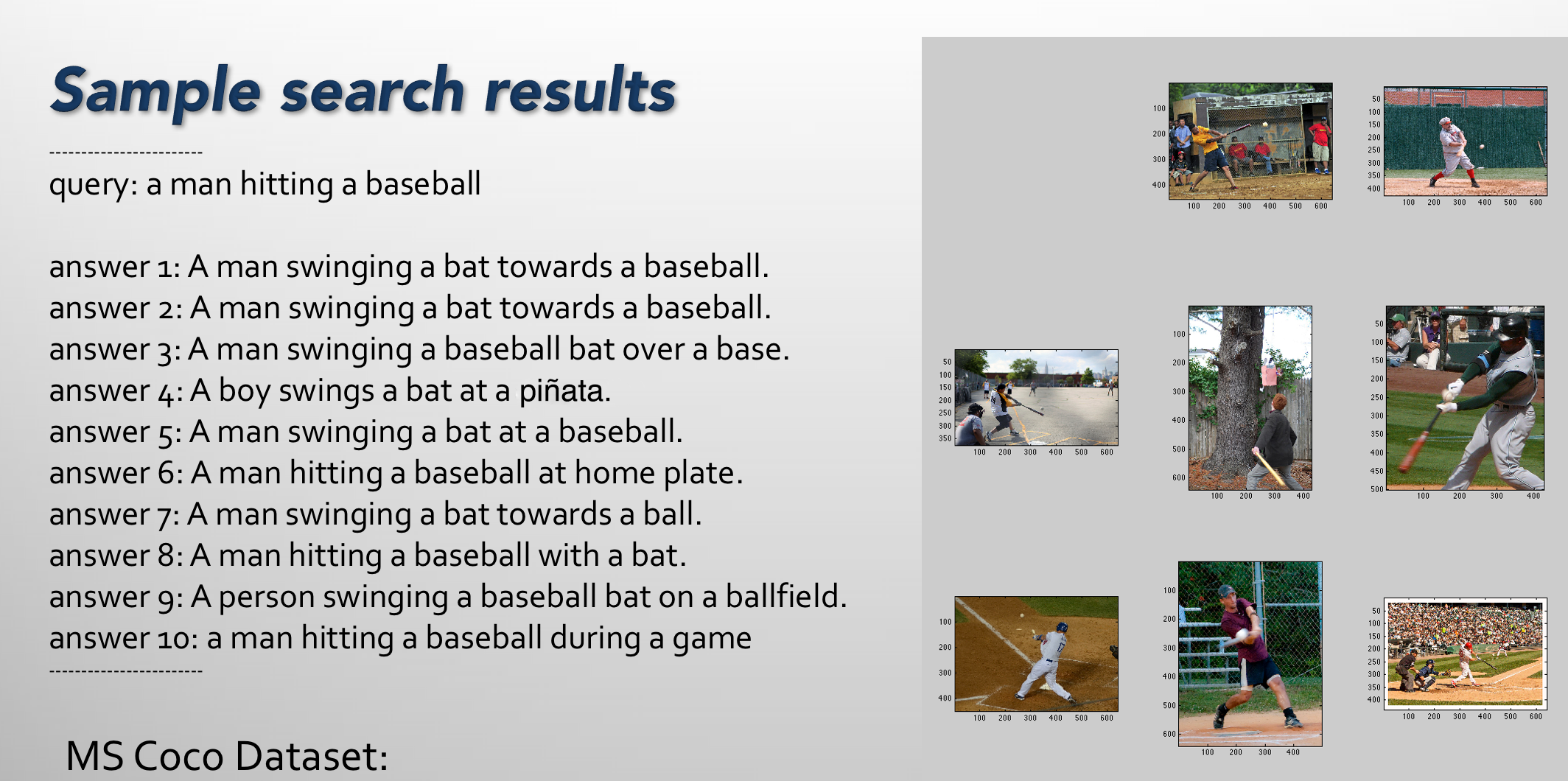

Robust Order Preserving Embeddings (ROPE)

Analysts currently use keyword search to retrieve documents. This is problematic because there are many documents of interest that contain words that are synonymous with the search terms but do not contain the keywords themselves. We have developed an algorithm, Robust Order Preserving Embeddings (ROPE) (Wang 2016), that is capable of searching semantically. This means that if the analyst searches "dog," documents containing analogous terms such as "puppy" or "canine" will also be returned.

The ROPE algorithm uses Word2Vec, which is a group of related models used to produce word embeddings, to learn semantic representations for words. Text data is then encoded at the sentence level using word vectors that are projected into a high dimensional vector space. The same is done for querying search terms. As illustrated in Figure 4, once this text data is represented as features in high dimensional vector the Euclidean norm can be used to return sentences that are closest to the query (Candès 2004). A US Government agency has funded a study to demonstrate ROPE's effectiveness for their datasets.

Sentence Classification

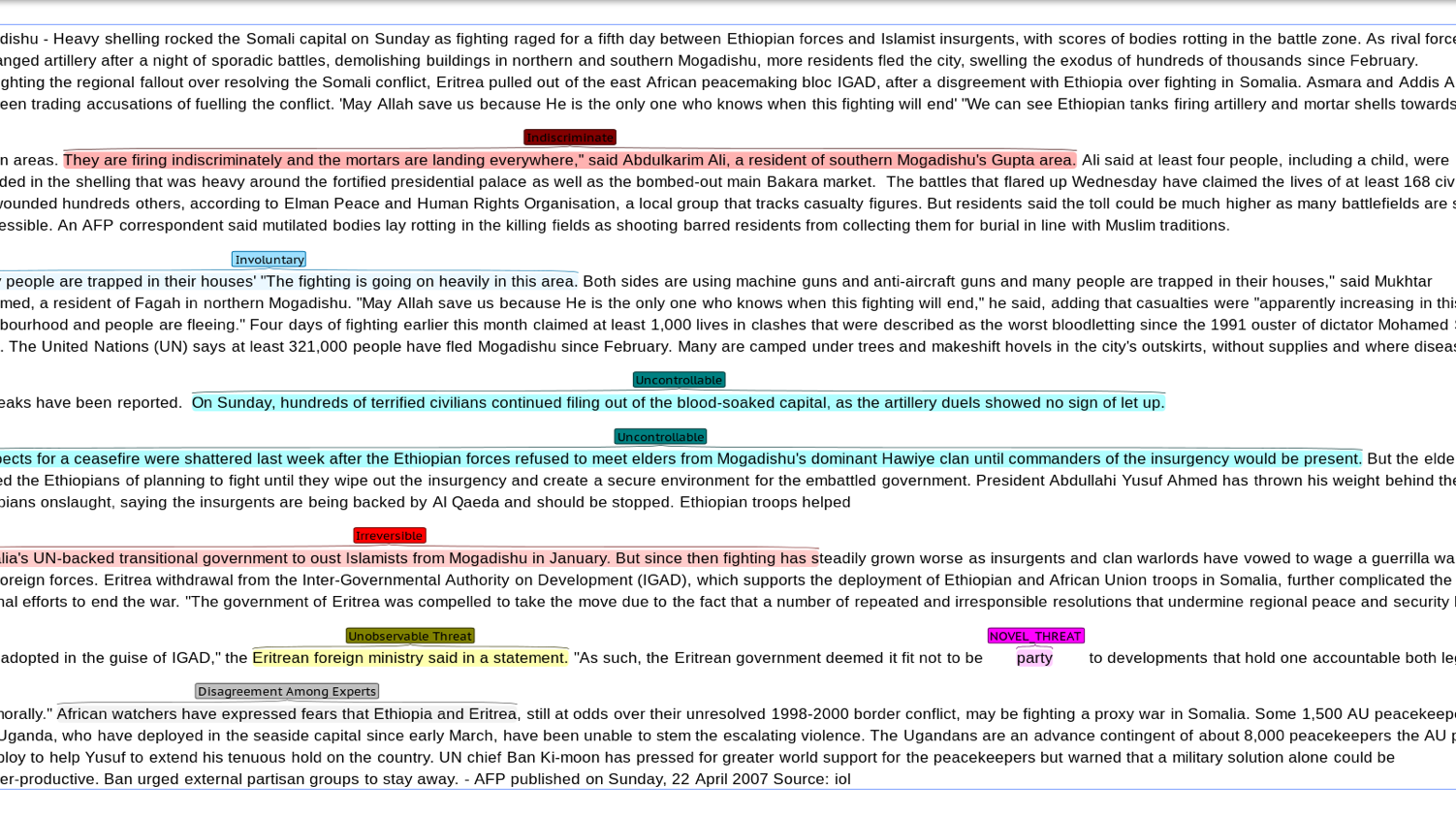

We also collaborated with researchers at Georgetown University in Washington, D.C., to predict events such as forced migration and ethnic cleansing (Collmann 2016). Their data analysis requires researchers to submit queries that return thousands of news articles that need to be read and annotated. Each article is scored and then entered into a predictive model. The task of reading and annotating is very time consuming; it takes researchers on the order of months to annotate the articles.

We developed a recurrent neural network that is capable of annotating these articles in an automated fashion. The algorithm ingests an article, parses it into sentences, and passes each sentence into a neural network that has been trained to label the DREAD threat class (referring to a classification system for computer security risk assessment) of the sentence, based on a common rating system used in the field of cyber security. While the algorithm is not perfect, it has the ability to assign a class probability for each sentence. These probabilities are used to sort sentences, allowing researchers to focus on sentences that require more human attention (see Figure 5).

Impact on Mission

Our work supports the NNSA goal of providing expert knowledge and operational capability for counterterrorism, counterproliferation, and nuclear threat response. By moving the burden of integrating the pieces together from the analyst to the machine, our project frees analysts to perform high-level decision-making and analysis tasks rather than spend significant time "in the weeds." Our objective was to create an improved computational capability to provide decision makers with early warning to threats in a fast-moving ever-changing national security and threat landscape. Developing this capability is well aligned with the strategic focus area of cyber security. Our approach aims to create better awareness of threats facing the nation and support the Laboratory's core competency in high-performance computing, simulation, and data science.M

Conclusion

The body of work from this project will be transferred to internal national security applications and be used by academic collaborators. The research has also been used to garner additional funding. This project demonstrated the effectiveness of using deep neural networks for national security applications and has shown that there are still many open research questions to address.

Our interaction with analysts has shown a need for algorithms that are capable of

- natural language understanding for question and answering tasks,

- image segmentation for object recognition,

- machine translation for handling non-English text,

- zero-shot learning or the ability to learn from a very small number of labeled samples (Socher 2014), and

- outputting results that are explainable so that analysts can understand the algorithm's decision-making process.

Continued funding for research in these areas will demonstrate Lawrence Livermore's commitment to state-of-the-art machine learning and data analytics.

References

Candès, E. J,. and T. Tao. 2004. "Near-Optimal Signal Recovery from Random Projections: Universal Encoding Strategies." IEEE Trans. Inform. Theory 52: 5406–5425.

Collmann, J., et al. 2016. “Measuring the Potential for Mass Displacement in Menacing Contexts."J. Refug. Stud. 29 (3):273–294. doi:10.1093/jrs/few017.

Mikolov, T., et al. 2013a. "Efficient estimation of word representations invector space." Proc. Intl. Conf. Learning Representations (CLR 2013).1–12.

——— 2013b."Distributed Representations of Wordsand Phrases and Their Compositionality." NIPS 1–9.

Nallapati, R., B. Xiang, and B. Zhou. 2016. "Sequence-to-Sequence RNNs for Text Summarization." CoRR abs/1602.06023.

Pennington, J., R. Socher, and C. D. Manning. 2014. “GloVe: Global Vectors for Word Representations." EMNLP. doi:10.3115/v1/D14-1162.

Socher, M., et al. 2014. "Zero-shot learning through cross-modal transfer." Proc. 26th Intl. Conf. Neural Information Processing Systems 1:935–943.

Venugopalan, S., et al. 2015. "Sequence to Sequence - Video to Text." CoRR abs/1505.00487.

Widemann, D., E. X. Wang, and J. Thiagarajan. 2016. ROPE: Recoverable Order-Preserving Embedding of Natural Language. LLNL-TR-682663.

Publications and Presentations

Li, Q.et al., 2016. Influential Node Detection in Implicit Social Networks using Multi-task Gaussian Copula Models. NIPS 2016 Time Series Workshop, Barcelona, Spain, Dec. 5-10, 2016. LLNL-PROC-710678.

Sattigeri, P., and J. J. Thiagarajan. 2016. Sparsifying Word Representation for Deep Unordered Sentence Modeling. Association for Computational Linguistics 2016, Berlin, Germany, Aug. 7–12, 2016. LLNL-CONF-699499.

Widemann, D. P., and J. Ordonez. 2016. Semantic Evaluation Using Robust Order-Preserving Embeddings. LLNL-TR-699098.

Widemann, D., E. X. Wang, and J. Thiagarajan. 2016. ROPE: Recoverable Order-Preserving Embedding of Natural Language. LLNL-TR-682663.