Ignacio Laguna Peralta (15-ERD-039)

Abstract

The DOE has identified resilience as one of the major challenges to achieve exascale computing—specifically, the ability of the system and applications to work through frequent faults and failures. An exascale machine (a quintillion floating point operations per second) will comprise many more hardware and software components than today's petascale machines, which will increase the overall probability of failures. Most NNSA defense-program simulations use the message-passing interface programing model. However, the message-passing interface standard does not provide resilience mechanisms. It specifies that, if a failure occurs, the state is undefined and applications must abort. To address the exascale resilience problem, we propose to develop multiple resilient programming abstractions for large-scale high-performance computing applications, with an emphasis on compute node and process failures (one of the most notable failures) and in the message-passing interface programing model. We will investigate the performance of the abstractions in several applications and study their costs in terms of programming capability.

We expect to deliver resilience programing abstractions that will enable efficient fault tolerance in stockpile stewardship simulations at exascale. The abstractions will comprise a set of programming interfaces for the message-passing interface programming model and node and process failures. The abstractions will encapsulate several failure recovery models to reduce the amount of code and reasoning behind implementing these models at large scale. As a result of this research, LLNL code teams will be more productive in their large-scale simulations by concentrating more on the science behind the simulation rather than on the coding aspects to deal with frequent failures, especially in exascale simulations.

Mission Relevance

This project directly supports one of the core competencies of Lawrence Livermore, specifically high-performance computing, simulation, and data science. Simulations (to extend the lifetime of nuclear weapons in the stockpile, for example) will require the resilience abstractions that we will provide in this project to make effective use of exascale computing in support of the strategic focus area in stockpile stewardship science and a central Laboratory mission in national security.

FY15 Accomplishments and Results

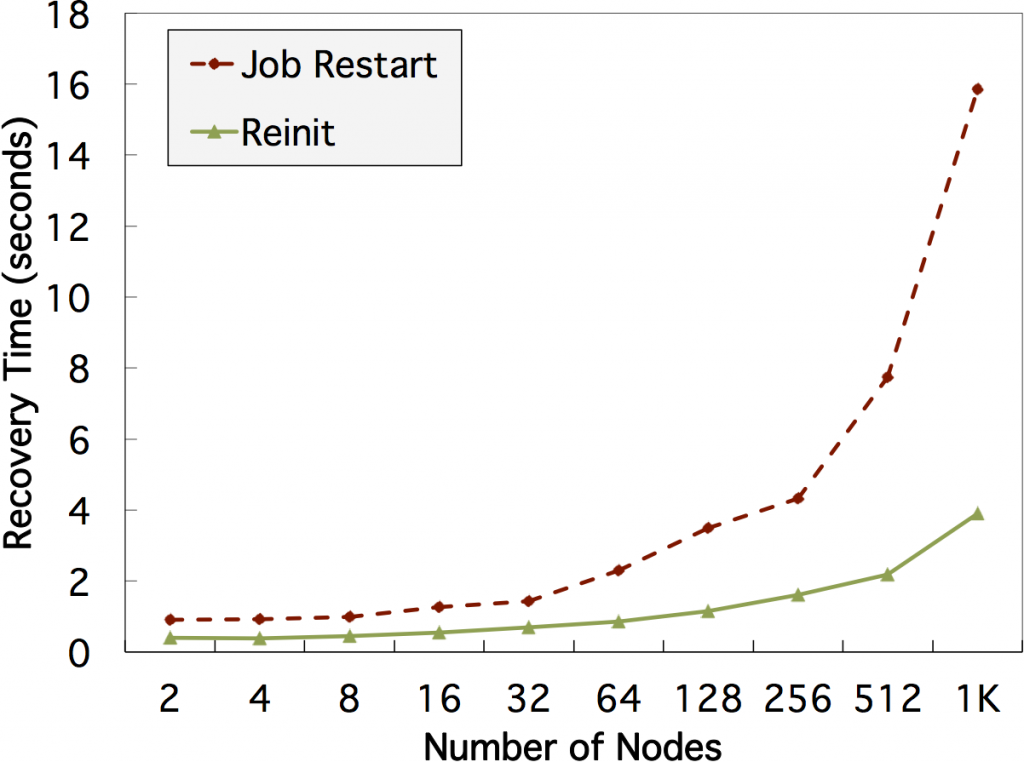

In FY15 we (1) constructed and validated a performance model for shrinking message-passing interface recovery, which is one of the resilience abstractions that we proposed initially; (2) demonstrated this failure recovery model in the LLNL-developed molecular dynamics plasma simulation code ddcMD; (3) created a prototype initial version of the global-restart model (or Reinit) in a commonly used message-passing interface library (Open MPI); and (4) observed performance improvements by using this model in comparison to traditional checkpoint and restart when a job is killed after a failure (see figure), which allows a machine that crashes and is subsequently restarted to continue from the checkpoint. We expect to better understand the implications of using this model from the point of view of applications. Next year we will use the collaborative tier-1 CORAL supercomputer system benchmarks as a test bed for this effort. CORAL is the next major phase in the DOEs scientific computing roadmap and path to exascale computing.

We have designed and implemented a novel approach to handle process failures, called Reinit, which allows high-performance computing applications to recover faster at scale. The figure shows recovery time measurements for process failures in the Sierra machine at LLNL. Reinit takes less than 4 s to recover with 1K nodes and 12K processes. Recovery with Reinit is 4 times faster than traditional job restarts.