Lucas Jaffe | 18-FS-014

Overview

While deep learning technologies for computer vision have developed rapidly since 2012, modeling of remote sensing systems has remained focused around human vision. In particular, remote sensing systems are usually constructed to optimize the trade-off between cost and sensing quality, with respect to human image interpretability. While some recent studies have explored remote sensing system design as a function of simple computer vision algorithm performance, there has been little work relating this design to the state-of-the-art in computer vision: deep learning with convolutional neural networks. We developed experimental systems to conduct this analysis, showing results with modern deep learning algorithms and recent overhead image data. Our results were compared to standard image quality measurements based on human visual perception, and we concluded not only that machine and human interpretability differ significantly, but that computer vision performance is largely self-consistent across a range of disparate conditions. We present this research as a cornerstone for a new generation of sensor design systems that focus on computer algorithm performance instead of human visual perception.

Background and Research Objectives

In the past decade, overhead image data collection rates have expanded to the petabyte scale (Warren et al. 2015, Moody et al. 2016). This imagery is used for a wide variety of applications including disaster relief (Voigt et al. 2007), environmental monitoring (Wulder and Coops 2014), and intelligence, surveillance, and reconnaissance (ISR) (Heinze et al. 2008). Given the scale of this data and the need for fast processing, human analysis alone is no longer a tractable solution to these problems.

Correspondingly, algorithmic processing for this imagery has improved rapidly, with a recent emphasis on the use of convolutional neural networks (CNNs). CNNs, originally proved significant by Yann LeCun in the late 1990s for solving simple image recognition problems (LeCun et al. 1999), rose to prominence in 2012 after a breakthrough many-layer (deep) architecture was introduced by Alex Krizhevsky (Krizhevsky et al. 2012). The use of learned feature representations from CNNs quickly replaced a host of hand-crafted image features, including histograms of oriented gradients (HOG) (Dalal and Triggs, 2005), local binary patterns (LBP) (Ojala et al. 2002), and Haar-like features (Viola and Jones 2001).

Given the importance of visual recognition in overhead imagery, the criticality of using machines for this task, and the dominance of CNNs as a vehicle for doing so, it follows that we want to acquire imagery which is well-suited to visual recognition with CNNs. Consequently, the sensing systems that acquire this imagery must be designed to gather imagery optimal for visual recognition with CNNs.

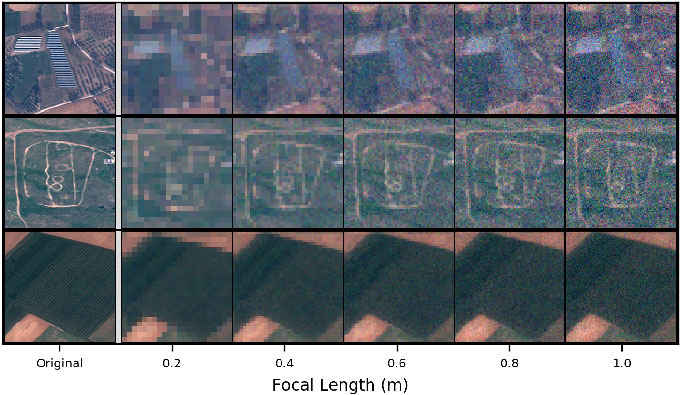

For this study, we designed a methodology to compare human and machine visual recognition performance on a dataset of satellite images, including empirical experiments that showcase the variability of machine recognition under different conditions (see figure). Our objective was to determine whether human and machine recognition performance are different and whether machine recognition performance is self-consistent. Further, we showed that our methods can be used to design new satellites that gather imagery optimal for machine recognition, instead of human recognition.

Impact on Mission

This study supports national missions in space security for the reduction of global threats. We developed capabilities to meet national challenges in ISR. Specifically, this research targeted two space security areas of interest: small satellite technologies (our analytic system can be used to engineer satellite optics) and algorithm development and simulation (we researched algorithms targeted to satellite image data).

Conclusion

We developed a methodology for optimizing remote sensing parameters with respect to the performance of deep learning models on real overhead image data. We demonstrated a tool that implements this methodology and conducted a variety of experiments using different sensor and learning parameters.

In these experiments, we showed that human and machine visual recognition performance can differ significantly. We also showed that the General Image Quality Equation (GIQE) does not correspond to CNN performance within the observed parameter space. Beyond comparisons to human vision, we demonstrated that visual recognition performance for CNNs is self-consistent under a variety of conditions. Most importantly, we showed that our method generalizes to unseen data.

An implication of this work is that all overhead systems that (1) have been designed to optimize for the National Imagery Interpretability Rating Scale (NIIRS) using the GIQE and (2) produce imagery that is analyzed with CNNs have been designed non-optimally. Designing systems based on the methods described in this paper could yield more optimal systems for CNN processing. Further, these methods can be applied to any visual recognition model. When a visual recognition model superior to CNNs is introduced, the same study can be conducted and compared against the results presented here.

Federal agencies have expressed significant interest in this research, which has the potential to be expanded both in theoretical and practical directions. Additional theoretical work could include utilizing our methods to develop a new version of the GIQE that can compute a version of the NIIRS specifically for machine recognition. A possible practical application would be to apply our methods to a specific design problem, such as designing a satellite imaging system with a budget limit and recognition-capability requirements.

References

Dalal, N. and B. Triggs. 2005. "Histograms of Oriented Gradients for Human Detection." In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2005. Volume 1, pp. 886–893. IEEE.

Heinze, N., et al. 2008. "Automatic Image Exploitation System for Small UAVs." In Airborne Intelligence, Surveillance, Reconnaissance (ISR) Systems and Applications V, Volume 6946, p. 69460G. International Society for Optics and Photonics.

Krizhevsky, A., I. Sutskever, and G. E. Hinton. 2012. "Imagenet Classification with Deep Convolutional Neural Networks." In Advances in Neural Information Processing Systems, 1097–1105. NIPS

LeCun, Y., et al. 1999. "Object Recognition with Gradient-Based Learning." In Shape, Contour and Grouping in Computer Vision, edited by D. A. Forsyth, et al. 319–345. Springer.

Moody, D. I., et al. 2016. "Building a Living Atlas of the Earth in the Cloud." 2016 50th Asilomar Conference on Signals, Systems and Computers, 1273–1277. IEEE.

Ojala, T., M. Pietikainen, and T. Maenpaa. 2002. "Multiresolution Gray-Scale and Rotation Invariant Texture Classification with Local Binary Patterns." In IEEE Transactions on Pattern Analysis and Machine Intelligence 24(7), 971–987.

Viola, P. and M. Jones. 2001. "Rapid Object Detection Using a Boosted Cascade of Simple Features." In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2001. Volume 1, pp. I–I. IEEE.

Voigt, S., T., et al. 2007. "Satellite Image Analysis for Disaster and Crisis-Management Support." In IEEE Transactions on Geoscience and Remote Sensing 45(6), 1520–1528.

Warren, M. S., et al. 2015. "Seeing the Earth in the Cloud: Processing One Petabyte of Satellite Imagery in One Day." In IEEE Applied Imagery Pattern Recognition Workshop, 2015. pp. 1–12. IEEE.

Wulder, M. A. and N. C. Coops. 2014. "Make Earth Observations Open Access: Freely Available Satellite Imagery Will Improve Science and Environmental-Monitoring Products." Nature 513 (7516), 30–32.

Publications and Presentations

Jaffe, L. W., et al. 2017. "Relating Satellite Imaging to Data Separability." LLNL-PROP-742437.

Jaffe, L. W., M. E. Zelinski, W. A. Sakla. 2018. "Remote Sensor Design for Automated Visual Recognition." LLNL Data Science Institute Workshop, Livermore, CA. LLNL-PRES-755028.