Chunhua Liao (12-ERD-026)

Abstract

We have studied the possibility of developing a programming model framework and demonstrated how to use it to support the construction of various programming models for heterogeneous architectures tailored to different application requirements. The framework is written in the C++ programming language. The corresponding components, referred to as programming model building blocks, have been iteratively released with the ROSE compiler framework under a Unix-like open-source license, providing maximum freedom for users from both research and application developer communities. Users are able to contribute new components, thereby continually increasing the functionality provided by our framework. Within this framework, we developed a wide range of reusable, customizable language, compiler, and run-time building blocks to address the performance, heterogeneity, and resilience challenges of modern high-performance computing node architectures. We further developed example programming models that support a single graphics processing unit as well as multiple units, and demonstrated competitive performance. Using computational kernels and DOE mini-applications, our evaluation results showed that our approach is feasible and beneficial. The resulting framework is an important contribution to the software foundations of high-performance computing, permitting software teams to write applications and design programming models tailored to their applications.

Background and Research Objectives

High-performance computing at the exascale will require node architectures that have thousands of cores, deep memory hierarchies, and heterogeneous components. This will significantly increase the complexity of designing and adopting programming models that map applications to these architectures. Coupled with the fact that standardized node-level programming models, such as OpenMP, often lag several years behind their target architectures, a significant risk exists that no model will be available for programming exascale architectures when the machines are finally deployed. We explored a novel approach to addressing the exascale programming model challenge: creating an open framework to assist users (both programming-model researchers and application developers) in building node-level programming models.

Programming models are categorized by the abstract machines they describe to the application developer. Programming models for sequential machines can be expressed using common general purpose languages (e.g., Fortran, C, or C++). Parallel programming models are usually expressed using these same general purpose languages plus either library support (for example, a standard message passing interface) or the combined support of language extensions, a compiler, and a run-time library (for example, OpenMP). This approach to building new programming models is well established, and commonly used within LLNL.

Unfortunately, it is difficult to design or evolve programming models that can effectively represent fast-changing architecture designs and accommodate vastly diverse application domains, given the conflicting goals of performance, programmability, and portability. Creating a programming model is a complex task that requires a balanced allocation of responsibility and close collaboration among different layers of the software stack. Any single design goal (such as parallelism) can be achieved either by fully delegating it to one level (such as a parallelizing compiler) or relying on seamless collaboration of several levels (such as a combination of parallel language extensions, compiler transformation, and run-time library support). The future exascale machines are expected to have interconnected computation nodes with thousands of cores, deep memory hierarchies, and heterogeneous components (central processing units and graphics processing units). These machines will introduce even more challenges in the areas of parallelism, locality, heterogeneity, energy efficiency, and resiliency, in addition to the challenge of standardized node-level programming models lagging behind their target architectures. Finding solutions to this looming problem requires a capability for efficient research in the node-level design space of programming models. Additionally, application developers must be allowed to easily tailor programming models to their own domain-specific needs.

Our objective was to create a framework that reduced the cost and complexity of designing, implementing, and adopting programming models. By enabling the rapid prototyping of programming model designs, our framework is designed to help computer scientists explore node-level design to enable challenging exascale issues to become much more tractable. Our framework also enables experienced application developers and multidisciplinary teams to build their own customized programming models to address exascale issues within their domain. A comprehensive framework that addresses all exascale architectures goes beyond the scope of the project. Instead we have evaluated and demonstrated our concept within the reduced scope of mapping applications to heterogeneous architectures (that is, central processing and graphics processing units).

Scientific Approach and Accomplishments

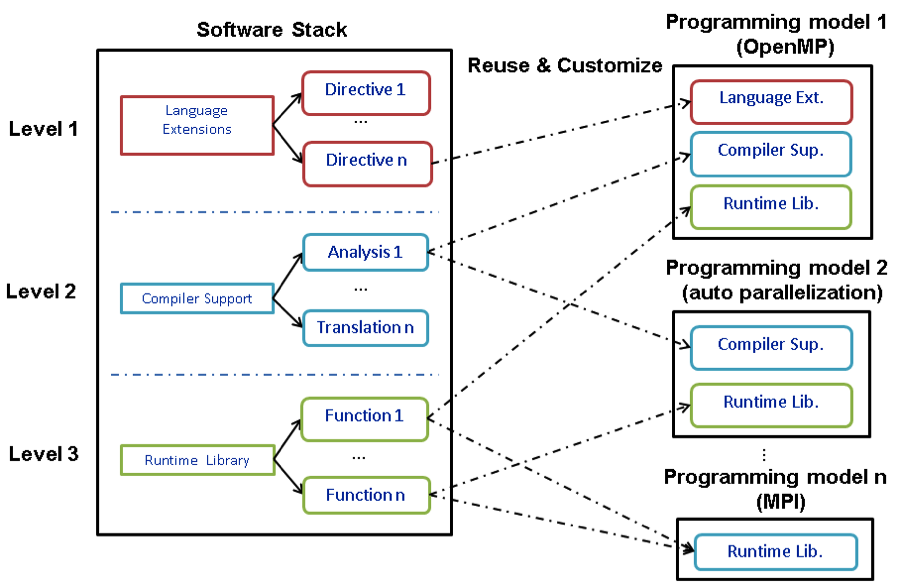

We took a building-block approach to addressing the programming model challenge of exascale computing. All language, compiler, and run-time building blocks are organized in a programming model framework to facilitate the creation of new programming models. Our developed framework, X-GEN (shown in Figure 1), exposes users—programming model researchers as well as experienced application developers—to the three levels of the software stack: language, compiler, and library. X-GEN provides three levels of fundamental building blocks common to all node-level programming models. The first language level consists of directives expressing different semantics related to parallel computing, such as hardware devices, data distribution, loop scheduling, barrier, synchronization, and so on. The second level, the compiler, provides compiler transformation and analysis building blocks, such as loop tiling, unrolling, side-effect analysis, and dependence analysis. The third level, run-time, has building blocks to support thread management, loop scheduling, data management and so on.

With this framework, researchers and developers can use some of the building blocks to instantiate a new programming model. A user could add additional building blocks into the existing programming model to address the data-locality challenge of exascale. In another example of designing a brand new programming model for a heterogeneous node using central and graphics processing units, a user could quickly design a work-queue threading strategy where tasks are queued (on both types of processing units) and dispatched for execution when there are idle resources. At the language level, the application is written in C++ and OpenCL and adorned with pragmas provided by our framework to highlight tasks for either central processing units or graphics processing units (#device) and dependence relationships (#depend on) among them. At the compiler level, analysis and translation tools from our framework are used to generate tasks and transfer data between the processors. A task scheduler selected from the run-time building blocks (taskSchedule() ) can be used for issuing tasks and synchronizing execution of the various units. The application now consists of a high-level code and a programming model, both specified by the user. The high-level code describes the algorithm, and the programming model describes the mapping to the heterogeneous architecture. To port the application to a new architecture, such as Blue Gene/Q, it is expected that only the programming model will have to be modified.

This project began in the second quarter of FY12. In this period, we focused on defining programming-model building blocks to address the issues of baseline parallelism and data locality. Specifically, we investigated representative applications and kernels and manually translated them to leverage graphics processing units, developing three levels of building blocks: (1) language-level directives to express parallelism and data transferring for graphics processing units; (2) compiler-level building blocks for directive parsing, kernel extraction, and instrumentation support; and (3) run-time support to facilitate programming tasks for graphics processing units, including memory allocation and data transfer. We also worked with intern students to explore compiler optimizations for power efficiency and a software-based redundancy transformation to address resilience challenge for exascale computing.1,2

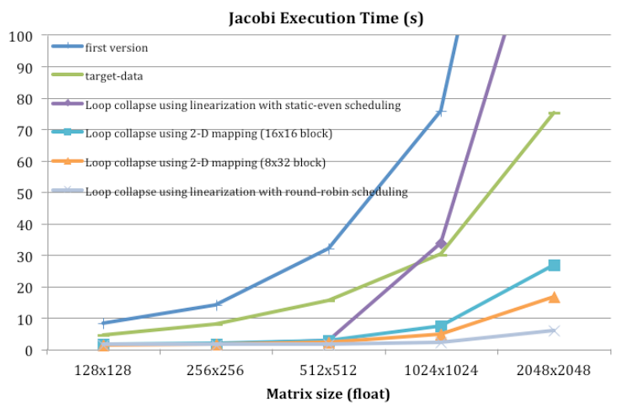

In FY13, we investigated advanced analysis and optimization building blocks to improve the performance and power efficiency of programming models built on our framework. Specifically, we (1) designed and implemented power-management building blocks for important computation kernels, (2) optimized data locality to make better use of hierarchical memory in graphics processing units, (3) worked with intern students on exploring resilience and correctness building blocks,3,4 and (4) released the first-ever nontrivial programming model, the Heterogeneous OpenMP (HOMP).5 Figure 2 shows performance variants of Jacobi, using different building blocks designed to support NVIDIA graphics processing units.

HOMP can automatically translate code with OpenMP accelerator directives into compute unified-device architecture codes. With other major features—including compute unified-device architecture kernel generation and data offloading, reduction, and reusing—we demonstrated how building blocks could be used to address exascale challenges such as heterogeneity and energy efficiency.

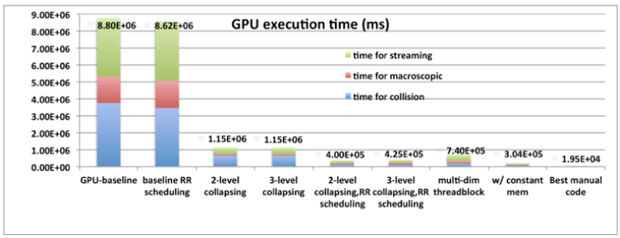

In FY14, we investigated new building blocks for node-level programming models and used these building blocks to explore the design of new programming models. In particular, we (1) added optimization building blocks for heterogeneous computing using the NVIDIA graphics processing unit, including compile-time loop collapsing for exposing more parallelism, as well as a run-time round-robin loop scheduler; (2) demonstrated the effectiveness of the building blocks by incorporating them into the OpenMP accelerator programming model of the OpenMP 4.0 specification; (3) documented the overall concept of the project and how it enables customization and hybridization using a miniature application derived from the real-world BoxLib software library (used for writing parallel, block-structured adaptive mesh-refinement applications) as a test application; and (4) evaluated OpenMP 4.0 directives using two representative DOE stencil mini-applications.6 Figure 3 shows one of the key results of this work: a set of building blocks are applied to incrementally improve the performance of a mini-application running on central- and graphics-processing-unit platforms.

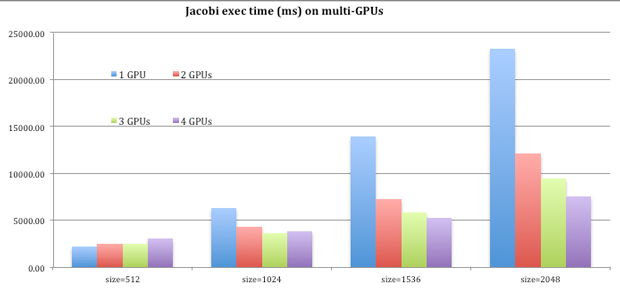

In FY15, we focused on exploring new building blocks to exploit unique accelerator memory features and scaled up beyond one single accelerator. In particular, we (1) investigated building blocks that can exploit the local scratch-pad memory of computational accelerators and proposed new directive, cache(), to exploit constant and shared memory of NVIDIA graphics processing units; (2) developed novel language directives, data_distribute(), to express semantics related to multiple graphics-processing-units support, including data and computation distribution; and (3) designed and developed the compiler and run-time support for multiple-graphics-processing-unit support, including a two-stage translation strategy and data management run-time.7 Figure 4 shows the published performance results of Jacobi stencil computation, using the multiple-graphics-processing-unit building blocks developed in X-GEN.7

Impact on Mission

Ensuring that applications work well with current and future high-performance computing architectures is essential for every mission at the Laboratory. As new architectures become available, programming models will need to be updated or even overhauled to better adapt applications to these new architectures. Our project has developed in-house expertise with new programming models that will help design and use high-performance computers, in support of LLNL’s core competency in high-performance computing, simulation, and data science.

Conclusion

We developed an in-house expertise to experiment with new node-level programming models that help both hardware vendors design new machines and application developers use them. In providing a versatile framework for constructing future programming models, our work significantly shortens the time required to design and adopt the new programming models that must accompany exascale machines. The prototype implementation, HOMP, enabled us to be the first to thoroughly evaluate the OpenMP accelerator model applied to port DOE stencil mini-applications to heterogeneous computers. Our framework revolutionizes the high-performance-computing software stack, permitting software teams to not only write applications, but also design programming models tailored for their applications. The rich results of this project have proven that the building-block approach to designing exascale node-level programming models is feasible and effective. By focusing on addressing the heterogeneity challenge of the exasale node architectures, we demonstrated that using a programming model framework with reusable building blocks can dramatically reduce the time to develop new programming models and at the same time, achieve competitive performance. The programming models built using our framework also expose new opportunities to advanced developers so they can further customize the models to fit their specific application needs.

In the future, we are interested in scaling up the scope to include distributed memory systems using multiple computation nodes. New language extensions to our existing node-level programming model will be explored to express semantics related to single-program, multiple-data style computations and guide compilers to automatically generate message-passing interface codes running on clusters. The resulting uniform programming model will open new opportunities for studying seamless integration of both on-node and beyond-node parallelism for exascale computing.

References

- Lidman, J., et al., ROSE::FTTransform—A source-to-source translation framework for exascale fault-tolerance research. 2nd Intl. Workshop Fault-Tolerance for HPC at Extreme Scale (FTXS 2012), Boston, MA, June 25–28, 2012. LLNL-CONF-541631.

- Rahman, S., et al., Studying the impact of application-level optimizations on the power consumption of multi-core architectures. ACM Intl. Conf. Computing Frontiers, Cagliari, Italy, May 15–17, 2012. LLNL-CONF-599780.

- Lidman, J., et al., An automated performance-aware approach to reliability transformations. Euro-Par 2014 Parallel Processing 20th Intl. Conf., Porto, Portugal, Aug. 25–29, 2014. LLNL-CONF-658972.

- Ma, H., et al., Symbolic analysis of concurrency errors in OpenMP programs. 2013 Intl. Conf. Parallel Processing, 42nd Ann. Conf., Lyon, France, Oct. 1–4, 2013. LLNL-CONF-640048.

- Liao, C., et al., “Early experiences with the OpenMP accelerator model.” OpenMP in the Era of Low Power Devices and Accelerators, vol. 8122, p. 84. Springer, New York, NY (2013). LLNL-CONF-636479.

- Lin, P., et al., Experiences of using the OpenMP accelerator model to port DOE stencil applications. 11th Intl. Workshop OpenMP, Aachen, Germany, Oct. 1–2, 2015. LLNL-CONF-665282.

- Yan, Y., et al., "Supporting multiple accelerators in high-level programming models." Proc. 6th Intl. Workshop Programming Models and Applications for Multicores and Manycores (PMAM '15), p. 170 (2015). LLNL-CONF-663596.

Publications and Presentations

- Liao, C., et al., “Early experiences with the OpenMP accelerator model.” OpenMP in the Era of Low Power Devices and Accelerators, vol. 8122, p. 84. Springer, New York, NY (2013). LLNL-CONF-636479.

- Lidman, J., et al., An automated performance-aware approach to reliability transformations. Euro-Par 2014 Parallel Processing 20th Intl. Conf., Porto, Portugal, Aug. 25–29, 2014. LLNL-CONF-658972.

- Lidman, J., et al., ROSE::FTTransform—A source-to-source translation framework for exascale fault-tolerance research. 2nd Intl. Workshop Fault-Tolerance for HPC at Extreme Scale (FTXS 2012), Boston, MA, June 25–28, 2012. LLNL-CONF-541631.

- Lin, P., et al., Experiences of Using the OpenMP Accelerator Model to Port DOE Stencil Applications. 11th Intl. Workshop on OpenMP, Aachen, Germany, Oct. 1–2, 2015. LLNL-CONF-665282.

- Ma, H., et al., Symbolic analysis of concurrency errors in OpenMP programs. 2013 Intl. Conf. Parallel Processing, 42nd Ann. Conf., Lyon, France, Oct. 1–4, 2013. LLNL-CONF-640048.

- Rahman, S., et al., Studying the impact of application-level optimizations on the power consumption of multi-core architectures. ACM Intl. Conf. Computing Frontiers, Cagliari, Italy, May 15–17, 2012. LLNL-CONF-599780.

- Royuela, S., et al., Auto-scoping for OpenMP tasks. Intl. Workshop OpenMP (2012), Rome, Italy, June 11–13, 2012. LLNL-CONF-534493.

- Yan, Y., et al., "Supporting multiple accelerators in high-level programming models." Proc. 6th Intl. Workshop Programming Models and Applications for Multicores and Manycores (PMAM '15), p. 170 (2015). LLNL-CONF-663596.