Maya Gokhale (13-ERD-025)

Abstract

Anticipating the unfolding revolution in memory technology, we pursued research to devise system software and memory hardware to optimize memory access for both data science and scientific application. Key targets were capacity—near seamless out-of-core execution to large data sets spanning dynamic random-access memory (DRAM) and nonvolatile random-access memory (NVRAM)—and irregular, cache-unfriendly accesses, particularly those using indirect access through index arrays and other noncontiguous access patterns.

We quantitatively prototyped and evaluated a data-centric compute node memory architecture. The large unified memory/storage subsystem combines DRAM and NVRAM, giving individual compute nodes access to multi-terabyte-scale memory at a fraction of the cost and power of present day DRAM-only memory subsystems. The unified memory is accessed through the data-intensive memory map (DI-MMAP) Linux operating system software module developed in the project1 that delivered up to fourfold speed compared to standard Linux on a metagenomics application.2 The software module is deployed on the LLNL Catalyst cluster as part of the standard software environment.

Using field-programmable gate arrays, we developed prototype active memory and storage controllers that accelerate memory-bound data-intensive applications by up to 3.4x while simultaneously reducing energy up to 2.6x.3 The active controller logic operates within the memory or storage subsystem, offloading application-specific computation and data access from the main central processing unit. The active memory controller hardware blocks were the basis of a record of invention and subsequent patent application. Additionally, our work won the 2015 Best Paper award at the International Symposium on Memory Systems in Washington, D.C.4

To validate the unified active memory, we developed a massively parallel, throughput-oriented data-centric graph traversal framework and demonstrated its superior performance scalable from a single NVRAM-augmented central processing unit up to the largest supercomputer with 131,000 central processing units.5 A streamline-tracing scientific data-analysis application that uses the unified memory-access Linux module has enabled novel locality-preserving algorithms well-suited to future exascale memory systems.6

Background and Research Objectives

Recent trends in the architecture of computer central processing units indicate that future processors will have many cores integrated on a single die, with a greatly reduced amount of memory available to each core relative to today’s architectures. The drastic reduction in memory per core is related to the high cost of DRAM in both power and dollars. The looming problem of memory bandwidth and capacity will affect high-performance computer applications on exascale supercomputers. Data-intensive computing is characterized by both very large application working sets and increasingly unstructured and irregular data access patterns. Data-centric applications are affected much more by memory latency, bandwidth, and capacity limitations than traditional high-performance computing applications. Without research into new system architectures and software, the present architectural trends will severely impact LLNL’s data science applications. We proposed to design, prototype, and evaluate a data-centric node architecture consisting of a many-core central processing unit, a large memory that seamlessly combines dynamic and NVRAM, and an active storage controller based on a field-programmable gate array that can run data-intensive kernels accessing NVRAM.

Recent trends in computer system architecture suggest both roadblocks and new opportunities in memory and storage. DRAM capacity, bandwidth, and latency will become increasingly constrained because of technology feature-scaling and power requirements. At the same time, new forms of NVRAM are emerging with density, latency, bandwidth, power, and endurance characteristics that may revolutionize memory system architectures.

These effects are particularly relevant to data-intensive computing. Over the past few years, it has become apparent that in the transition from multi-petascale to exascale computing, high-performance computing in the coming decade faces those same challenges confronting data-centric applications today. Without research into new memory system architectures and related software, the present architectural trends will severely impact LLNL’s data science and future exascale applications.

Our objectives with this project are to propose, design, prototype, and evaluate a unified active memory/storage system to address these challenges, with evaluation being conducted over a set of representative data-centric problems.

Scientific Approach and Accomplishments

The project had three major thrusts: (1) DI-MMAP to provide applications with memory-like latency to terabytes of compute node local solid-state disk memory through new caching algorithms; (2) application acceleration through processing near memory/storage (Minerva active storage and the data rearrangement engine); and (3) evaluation through continued development of a graph traversal framework (the highly asynchronous visitor queue graph traversal, or Havoq-GT) as a data-science-driving application and streamline tracing as a scientific data-analysis application.

Data-intensive Memory Map (DI-MMAP)

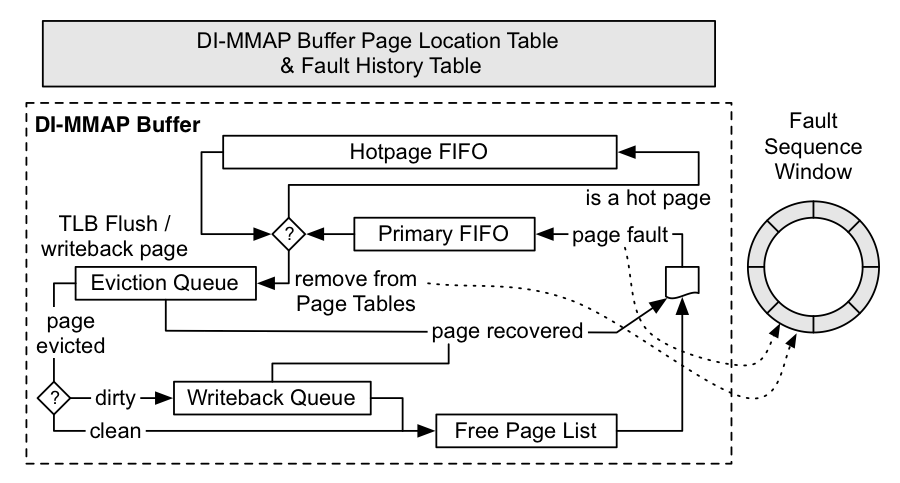

The DI-MMAP runtime module integrates NVRAM into the computing node’s memory hierarchy to enable scalable computations on large out-of-core datasets. This approach enables an application to access data in node-local NVRAM (“out-of-core”) as if it were in main memory DRAM. An external file is mapped into the application’s address space and is transparently accessed as if it were all in memory. The approach was optimized to allow latency-tolerant applications to be oblivious to transitions from dynamic to persistent memory when accessing out-of-core data. The DI-MMAP’s performance scales with increased thread-level concurrency and does not degrade as under memory pressure. The DI-MMAP features a fixed-size page buffer, minimal dynamic memory allocation, a simple first-in first-out buffer replacement policy, a preferential caching for frequently accessed NVRAM pages, and a method to expose page fault activity to running applications, as shown in Figure 1.

Active Storage and Memory: Minerva and Data Rearrangement Engine

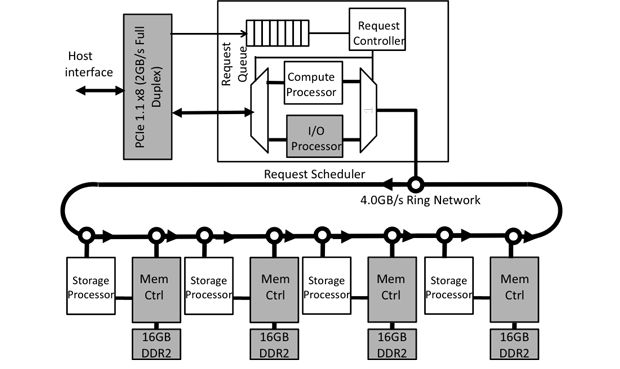

The Minerva active storage controller7 extends one of the first phase-change memory storage array controllers from the University of California, San Diego storage research group, by incorporating a storage processor module directly connected to each memory controller, as shown in Figure 2. Hardware infrastructure was implemented to schedule and dispatch compute kernels. The design uses a generic command/status mechanism to push computation to storage controllers and retrieve results. A runtime library enables the programmer to offload computations to the solid-state disk without dealing with many of the complications of the underlying architecture and inter-controller communication management. Minerva is suitable for large-scale applications that, rather than reusing data, stream over large data structures, or randomly access different locations. As a result, they make poor use of existing memory hierarchies and perform poorly on the conventional system because of a large input/output overhead. By processing data within the input/output subsystem, Minerva offloads input/output intensive computing to the storage controller.

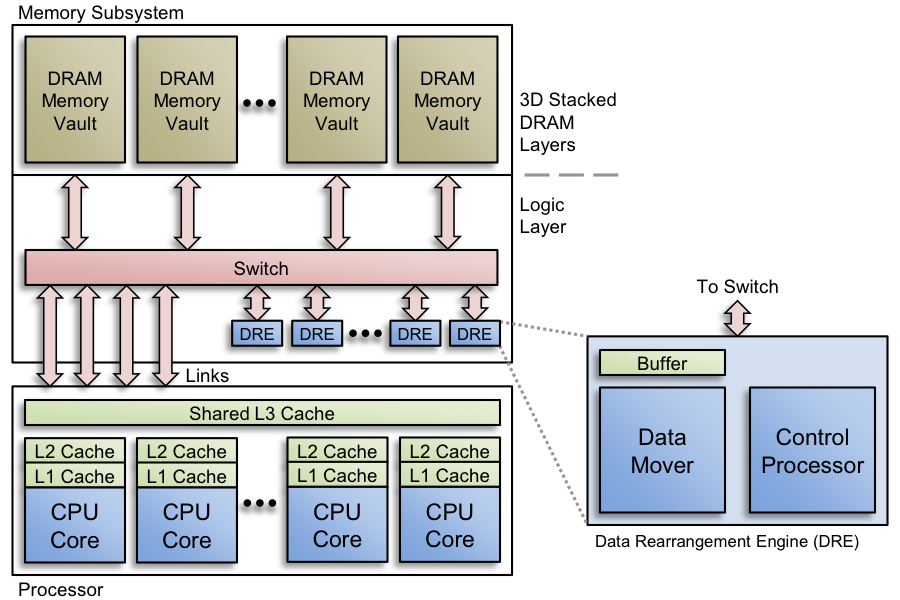

The data rearrangement engine also addresses the increasing gap between compute power and memory bandwidth. A large, deep cache hierarchy benefits locality-friendly computation, but offers limited improvement to irregular, data-intensive applications. The data rearrangement engine is a novel approach to accelerating irregular applications through in-memory data restructuring. Unlike other proposed processing-in-memory architectures, the rearrangement hardware performs data reduction, not compute offload. As shown in Figure 3, a data rearrangement engine resides in the memory subsystem. It uses direct memory access and gather/scatter units in the data mover to rearrange data in the memory vaults and store into the buffer for subsequent use by the application in the central processing unit. The data rearrangement engine can also move data from the buffer back into the memory vaults when the central processing unit has updated the buffer. Using a custom field-programmable gate array emulator, we quantitatively evaluated performance and energy benefits of near-memory hardware structures that dynamically restructure in-memory data to cache-friendly layout, minimizing wasted memory bandwidth. Results on representative irregular benchmarks using the Micron hybrid memory cube memory model show speedup, bandwidth savings, and energy reduction in all cases. Application speedup ranges from 1.24 to 4.15x. The number of bytes transferred is reduced by up to 11.69x, reflecting the efficiency of data rearrangement. Energy improvement ranges from 1.49 to 2.7x. Memory access at an 8-byte granularity shows energy reduction possible of up to 7.84x.

Applications: Graph Traversal and Streamline Tracing

Processing large graphs is becoming increasingly important for many domains such as social networks, bioinformatics, etc. Unfortunately, many algorithms and implementations do not scale with increasing graph sizes. To improve scalability and performance on large social-network graphs, we created a novel asynchronous approach to compute graph traversals. The method shows excellent scalability and performance in shared memory, NVRAM (using DI-MMAP), and distributed memory. The highly parallel asynchronous approach hides data latency because of both poor locality and delays in the underlying graph data storage. Our experiments using synthetic and real-world datasets showed that our asynchronous approach is able to overcome data latencies and provide significant speedup over alternative approaches. Breadth-first search using the Havoq-GT framework appeared in the top ten for scale on several Graph500 competitions. The results showed excellent scalability on large scale-free graphs up to 131K cores of the IBM BG/P system, and outperform the best known Graph500 performance on BG/P Intrepid by 15%.

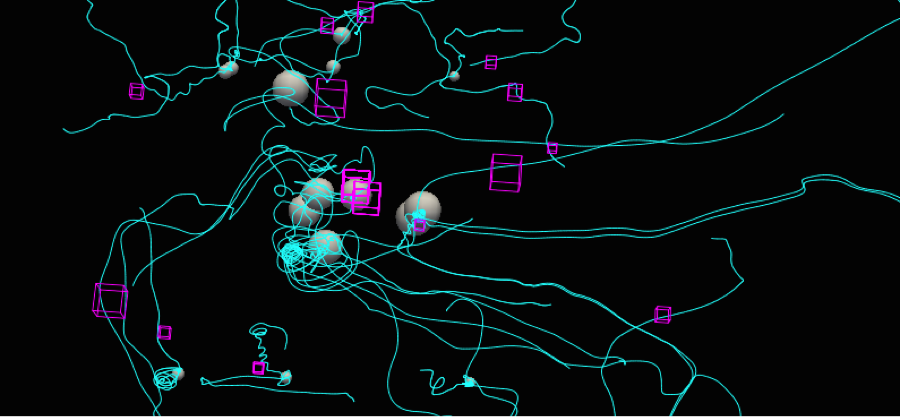

Streamline tracing is an important tool used in many scientific domains for visualizing and analyzing flow fields (Figure 4). A shared memory multi-threaded approach to streamline tracing was developed that targets emerging data-intensive architectures.8 The approach was optimized through a comprehensive evaluation of strong and weak scaling implications of a variety of parameters. Using the DI-MMAP kernel-managed memory map for out-of-core streamline tracing was found to outperform optimized user-managed cache.

Impact on Mission

Big data—requiring the continuous processing and analysis of sensor, experimental, and simulation data—is a dominant Laboratory challenge requiring scalable, flexible architectures to match a wide range of applications and budgets. Our research addresses a critical mission need for data-centric computing and benefits data science applications for both informatics and simulation data analysis, in support of the Laboratory’s core competency in high-performance computing, simulation, and data science. The project generated software and associated artifacts for current use, and further established a strong foundation for future research and development.

Conclusion

Our research anticipated the unfolding revolution in memory technology and enabled systems software and memory hardware to optimize memory access for both data science and scientific application. Key targets were capacity—near seamless out of core execution to large data sets spanning DRAM and NVRAM—and irregular, cache-unfriendly accesses, particularly those using indirect access through index arrays and other noncontiguous access patterns.

The DI-MMAP module is included in the Catalyst software environment and has influenced pre-exascale procurements. A DI-MMAP follow-on is a funded task in a DOE Office of Science Exascale OS/Runtime project. Additionally, the DI-MMAP module is used by the Livermore Metagenomics Analysis Toolkit, an open-source software in use in the bioinformatics community.9 The DI-MMAP and Havoq-GT are available as open source. In addition, two new projects were funded by an external sponsor to conduct research on graph traversal and data rearrangement in active memory. Our active memory research is being investigated by FastForward2 vendors, and a patent is being pursued for the data rearrangement logic intellectual property.

References

- Van Essen, B., et al., “DI-MMAP—A scalable memory-map runtime for out-of-core data-intensive applications.” Cluster Comput.18, 15 (2013). LLNL-JRNL-612114. http://dx.doi.org/10.1007/s10586-013-0309-0

- Ames, S., et al., Design and optimization of a metagenomics analysis workflow for NVRAM. IEEE Intl. Parallel Distributed Processing Symp. (IPDPS), Phoenix, AZ, May 19–23, 2014. LLNL-CONF-635882. http://dx.doi.org/10.1109/IPDPSW.2014.200 Lloyd, S., and M. Gokhale, "In-memory data rearrangement for irregular, data-intensive computing." Computer 48(8), 18 (2015). LLNL-JRNL-666692.

- Gokhale, M., G. S. Lloyd, and C. W. Hajas, "Near memory data structure rearrangement." MEMSYS15 Intl. Symp. Memory Systems, Washington, D.C., Oct. 5–8, 2015. LLNL-PROC-675466. http://dx.doi.org/10.1145/2818950.2818986

- Pearce, R., M. Gokhale, and N. M. Amato, Faster parallel traversal of scale free graphs at extreme scale with vertex delegates. Supercomputing 2014, New Orleans, LA, Nov. 16–21, 2014. LLNL-CONF-658291. http://dx.doi.org/10.1109/SC.2014.50

- Van Essen, B., et al., Developing a framework for analyzing data movement within a memory management runtime for data-intensive applications. 6th Ann. Non-Volatile Memories Workshop, San Diego, CA, Mar. 1–3, 2015.

- De, A., et al., "Minerva: accelerating data analysis in next-generation SSDs." IEEE 21st Intl. Symp. Field-Programmable Custom Computing Machines (FCCM 2013), Seattle, WA, Apr. 28–30, 2013. http://dx.doi.org/%2010.1109/FCCM.2013.46

- Jiang, M., et al., Multi-threaded streamline tracing on data-intensive architectures. IEEE Symp. Large Data Analysis and Visualization, Paris, France, Nov. 9–10, 2014. LLNL-CONF-645076. http://dx.doi.org/10.1109/LDAV.2014.7013199

- Ames, S. K., et al., “Scalable metagenomic taxonomy classification using a reference genome database." Bioinformatics 29(18), 2253 (2013). https://academic.oup.com/bioinformatics/article/29/18/2253/240111

Publications and Presentations

- Ames, S., et al., Design and optimization of a metagenomics analysis workflow for NVRAM. IEEE Intl. Parallel Distributed Processing Symp. (IPDPS), Phoenix, AZ, May 19–23, 2014. LLNL-CONF-635882. http://dx.doi.org/10.1109/IPDPSW.2014.200

- Gokhale, M. B., and G. S. Lloyd, Memory integrated computing. (2014). LLNL-POST-654365.

- Gokhale M., G. S. Lloyd, and C. W. Hajas, "Near memory data structure rearrangement." MEMSYS15 Intl. Symp. Memory Systems, Washington, D.C., Oct. 5–8, 2015. LLNL-PROC-675466. http://dx.doi.org/10.1145/2818950.2818986

- Jiang, M., et al., Multi-threaded streamline tracing on data-intensive architectures. IEEE Symp. Large Data Analysis and Visualization, Paris, France, Nov. 9–10, 2014. LLNL-CONF-645076. http://dx.doi.org/10.1109/LDAV.2014.7013199

- Lloyd, S., and M. Gokhale. "In-memory data rearrangement for irregular, data-intensive computing." Computer 48(8),18 (2015). LLNL-JRNL-666692.

- Pearce, R., M. Gokhale, and N. M. Amato. Faster parallel traversal of scale free graphs at extreme scale with vertex delegates. Supercomputing 2014, New Orleans, LA, Nov. 16–21, 2014. LLNL-CONF-658291. http://dx.doi.org/10.1109/SC.2014.50

- Van Essen, B., and M. Gokhale, 2014. Real-time whole application simulation of heterogeneous, multi-level memory systems. Workshop on Modeling and Simulation of Systems and Applications, Seattle, WA, Aug. 13–14, 2014. LLNL-ABS-655897.

- Van Essen, B., et al., “DI-MMAP—a scalable memory-map runtime for out-of-core data-intensive applications.” Cluster Comput. 18, 15 (2013). LLNL-JRNL-612114. http://dx.doi.org/10.1007/s10586-013-0309-0