Ming Jiang | 16-ERD-036

Overview

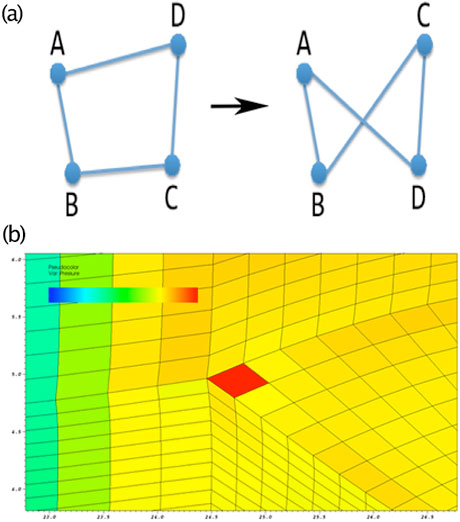

As high-performance computing (HPC) becomes ever more powerful, scientists are able to perform highly complex simulations that couple multiple physics at different scales. The price that comes with this capability is that the simulations become much more difficult to use. Simulation workflows are highly complex and often require a manual tuning process that is a significant inconvenience for users. Simulation failures are one of the main causes of that difficulty. A common example can be found in hydrodynamics simulations that use the Arbitrary Lagrangian-Eulerian (ALE) method, which requires users to adjust a set of parameters to control the mesh motion during the simulation. Finding the right combination of parameters to adjust in order to avoid the failures and to complete the simulation is often a trial-and-error process. There is an urgent need to partially automate simulation workflows that use this parameter-adjustment process in order to reduce user burden and improve productivity. To address this need, we developed novel predictive analytics for simulations and an in situ infrastructure for integration into simulation workflows. Our goal was to predict simulation failures and proactively avoid them as much as possible.

Our research objectives for developing predictive analytics for simulations included (1) deriving statistical models for conditions in the simulation state leading to failures and how to adjust parameters to avoid those conditions and (2) developing novel machine-learning algorithms (supervised and online) specifically for simulation workflows. Our research objective for in situ infrastructure for integration included (1) the design a first-of-a-kind, unique, in situ infrastructure that integrates traditional HPC simulations with big data analytics (Reed and Dongarra 2015) and (2) the development of new visual analytics to explore a high-dimensional learning-feature space and explain machine-learning model performance. In general, HPC simulations and big data analytics are on a converging path. As the scientific community is preparing for the next-generation extreme-scale computing, big data analytics are becoming an essential part of the scientific process for insights and discoveries (Reed et al. 2015). Effectively integrating big data analytics into HPC simulations still remains a daunting challenge (da Silva et al. 2017), requiring new workflow technologies to reduce user burden and improve efficiency.

For the predictive analytics, we developed a supervised learning framework (Jiang 2016) that can predict simulation failures by using the simulation state as learning features. Our framework uses the Random Forest learning algorithm and can be integrated into production-quality ALE codes. We presented a novel learning representation for ALE simulations, along with procedures for generating labeled data and training a predictive model. For the integration of predictive analytics, we developed an in situ infrastructure to integrate the inference capabilities with the running simulation in order to adjust the parameters dynamically. This infrastructure is coupled with Pegasus, a workflow management system (da Silva et al. 2017) that includes components for data collection and user interface.

Recently (Do 2018), we developed a node-local approach that leverages non-volatile random-access memory to enable data-analytics workflows. We evaluated our approach by comparing it to the setup on the Lustre file system, in which data is stored and accessed globally. In this approach, the data analysis only starts after the simulation has completed (post-processing). In order to reduce the expensive storage needs for storing large datasets collected over multiple time-steps, the analytics is performed on partial data generated by a few iterations. We plan to explore the node-local technique for analyzing generated simulation data in situ using event-based triggers for launching workflows as future work.

Impact on Mission

Because ALE-based simulations are vital components of many applications developed at Lawrence Livermore National Laboratory, this research has broad application across the NNSA's and Laboratory's mission space, particularly stockpile stewardship. There is also a direct tie-in of this research to the next-generation code-development effort to improve general simulation workflow management at the Laboratory. Our research in predictive analytics and in situ simulation infrastructure enhances the Laboratory's core competencies in high-performance computing, simulation, and data science.

Conclusion

In this project, we addressed the urgent need to automate some aspects of the development process of simulation workflows. Our goal was to predict simulation failures ahead of time and proactively avoid them as much as possible. We developed novel predictive analytics using a supervised-learning framework to derive classifiers that can predict simulation failures by using the simulation state. We also developed an in situ infrastructure for integration into simulations, an infrastructure that is capable of running the predictive analytics along with the simulation using the Pegasus workflow management system.

References

Benson, D. 1992. "Computational Methods in Lagrangian and Eulerian Hydrocodes." Computer Methods in Applied Mechanics and Engineering 99(2): 235–394. doi: 10.1016/0045-7825(92)90042-I.

Do, T., et al. 2018. "Enabling Data Analytics Workflows using Node-Local Storage." International Conference for High Performance Computing, Networking, Storage, and Analysis (SC18), Dallas, TX, November 2018.

Donea, J., et al. 2004. "Arbitrary Lagrangian-Eulerian Methods." In Encyclopedia of Computational Mechanics , Volume 1: Fundamentals, edited by Erwin Stein, R. de Borst, and Thomas Hughes, John Wiley & Sons, 2004.

Jiang, M., et al. 2016. "A Supervised Learning Framework for Arbitrary Lagrangian-Eulerian Simulations." IEEE International Conference on Machine Learning and Applications, Anaheim, CA, December 2016. doi: 10.1109/ICMLA.2016.0176.

Reed, D. A. and J. Dongarra. 2015. "Exascale Computing and Big Data." Communications of the ACM 58(7): 56–68. doi: 10.1145/2699414.

da Silva, R., et al. 2017. "A Characterization of Workflow Management Systems for Extreme-Scale Applications." Future Generation Computer Systems 75: 228–238. doi: 10.1016/j.future.2017.02.026. LLNL-JRNL-706700.

Zukas, J., ed. 2004. "Introduction to Hydrocodes." In Studies in Applied Mechanics 49: 1–313. New York, Elsevier.

Publications and Presentations

Ayzman, Y. 2016. "MEAD: Multidimensional Exploration of ALE Data." LLNL Summer Student Poster Session, Livermore, CA, August 2016. LLNL-POST-699122.

Burrows, B. 2017. "An Ensemble Based Approach to Quantifying the Numerical Accuracy of Arbitrary Lagrangian-Eulerian Simulations," LLNL Summer Student Poster Session, Livermore, CA, August 2017. LLNL-POST-736057.

Jiang, M. 2016. "A Supervised Learning Framework for Arbitrary Lagrangian-Eulerian Simulations," 15th IEEE International Conference on Machine Learning and Applications, Anaheim, CA, December 2016. LLNL-POST-714918.

——— . 2017. "Machine Learning for Semi-Automating Mesh Management in ALE Simulations." LLNL Computation External Review Committee, 2017. LLNL-PRES-730390.

Jiang, M., et al. 2016. "A Supervised Learning Framework for Arbitrary Lagrangian-Eulerian Simulations," IEEE International Conference on Machine Learning and Applications, Anaheim, CA, December 2016. LLNL-CONF-698953 and LLNL-POST-714918.

Riewski, E. 2018. "Iterative Learning for Node Relaxation in NIF Simulations." LLNL Summer Student Poster Session, Livermore, CA, August 2018. LLNL-POST-755909.

da Silva, R., et al. 2017. "A Characterization of Workflow Management Systems for Extreme-Scale Applications," Elsevier Future Generation Computer Systems 75: 228-238. LLNL-JRNL-706700.

Stern, N. 2018. "Feature Reduction for Machine Learning of Mesh Relaxation in Kull Multi-Physics Code." LLNL Summer Student Poster Session, Livermore, CA, August 2018. LLNL-POST-755706.

Swischuk, R. 2016. "Predicting Simulation Failures using Classification Ensembles." LLNL Summer Student Poster Session, Livermore, CA, August 2016. LLNL-POST-699266.