Alan Kaplan (16-ERD-034)

Project Description

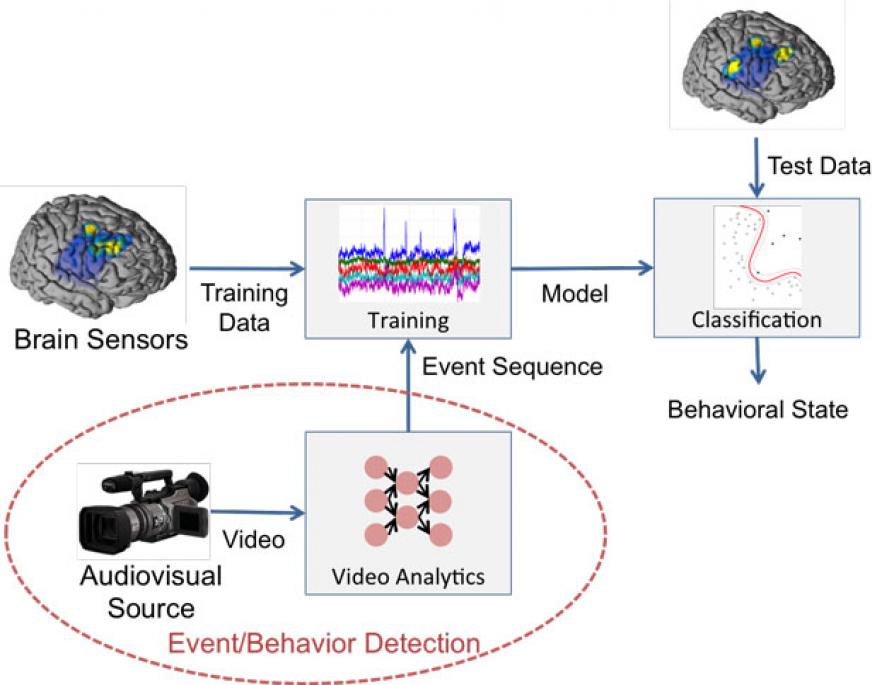

The President's Brain Initiative aims to revolutionize our knowledge of the human brain as well as advance treatment and prevention procedures for brain disorders. Researchers involved with this initiative work with biomedical "big data" and require algorithmic expertise, statistical methods, and high-performance computing to fully exploit these heterogeneous data sets at scale. To help meet these requirements, we have partnered with neuroscientists from the University of California, San Francisco (UCSF) in a project that lies at the intersection of neuroscience, statistics, large-scale data analytics, and machine learning. We intend to develop novel analytic approaches for large-scale, multi-dimensional data acquired from patients monitored for clinical assessment. This data set is from a large population of patients, surgically implanted with intracranial brain sensors that record the electrical activity from the cerebral cortex, who are monitored 24 hours per day for weeks. The resultant data is best suited to characterize aggregate brain function and phenomena relating to longer-developing brain states. We will collect and work with this data set that specifically targets the relationship between neurological signals and patient behavior and use it to model relationships between brain activity and natural, uninstructed behavioral and emotional states. In addition, synchronized, high-definition video with audio will be collected to record unstructured (i.e., natural) patient behavior. By correlating neural signals with events captured on video, we will be able to relate brain function to observable behavior.

Using UCSF's one-of-a-kind data set, the combined expertise of their neuroscience investigators, and Livermore's statistical and machine-learning capabilities, as well as our world-class computational resources, we expect to develop novel approaches in neural signal analysis and multimodal modeling to determine the neural patterns underlying human activities observed from video. Expected results will contribute to the areas of brain-signal characterization, video analytics, and joint multimodal modeling. In brain-signal characterization, we will develop novel sparse-signal representations to isolate brain function. In video analytics, state-of-the-art algorithms in deep learning will be leveraged to extract relevant features and isolate time segments with potential signals of interest. Finally, modeling will be performed at both short and long timescales to build hierarchical, temporal representations of underlying processes that jointly describe brain signals and human behavior. In addition, given that data sets for each patient are on the order of terabytes in size, the algorithms we develop will extend the Laboratory's distributed computing libraries, augment existing software infrastructure, and build additional deep-learning capabilities for graphics-processor-based computational systems. Results from this project have the potential to yield new insights about basic and clinically relevant functions of the human brain, as well as about more practical aspects (e.g., information content) of these relatively new data sources.

Mission Relevance

Our research objective of developing analytic approaches for large-scale, multiple-dimensional, biomedical big data directly addresses the Laboratory's core competency of high-performance computing, simulation, and data science, as well as the core competency of bioscience and bioengineering.

FY16 Accomplishments and Results

In FY16 we (1) developed models for both neurological and video data to be used in classifying human behavior; (2) developed and tested methods for distinguishing motor movements from human brain local-field-potential data; (3) implemented a method for characterizing the effects of emotional affect on brain states; (4) implemented, with respect to modeling behavior in video, a facial expression detection algorithm that achieves near state-of-the-art performance on a benchmark data set; (5) began developing a method to detect fine-scaled human subject motion in video; and (6) secured an agreement with the UCSF, and began receiving data on electrical activity from the cerebral cortex, including hand annotations of patient behavior.

Publications and Presentations

- Kaplan, A. D., et al., Data driven neurological pattern discovery. (2016). LLNL-PRES-695740.

- Kaplan, A. D., M. B. Mayhew, and A. Sales, Dynamic models for electroencephalography. (2016). LLNL-PRES-692179.