Jayaraman Jayaraman Thiagarajan (17-ERD-009)

Executive Summary

are exploring advances in sampling design for computational modeling to produce the maximal amount of information about unknown processes with the minimal number of samples. Applications include uncertainty quantification for stockpile stewardship, improved weather or climate ensembles, an optimized energy grid, and improved sensitivity analysis in materials science, and high-energy physics.

Project Description

Computational modeling of complex dynamical systems is prevalent in applications ranging from high-energy-density physics to climate science. Such simulations are routinely used to interpret experimental data, explore the impacts of different models, or to inform design decisions. Because these systems are generally too complex to fully understand, they are treated as black boxes that require a set of input parameters and produce various quantities of interest as outputs. Choosing these parameters and understanding the variability and sensitivity of the solutions to changing inputs is rapidly becoming one of the primary challenges in many application areas. Common examples are uncertainty quantification, inverse problems, or various optimization approaches. In such applications, the first step is to create an initial, uniform, random sampling to develop a baseline of knowledge. The simulation is then evaluated at these sample points, and the baseline is used to initialize the rest of the workflow. This workflow is pervasive and its applications are wide-ranging. In its most generic form, the goal of sampling is to produce the maximal amount of information about some unknown process with the minimal number of samples. However, despite influencing the performance of the entire workflow, the quality of the initial sample design is rarely analyzed or optimized. Instead, in practice, simple solutions that are known to produce inferior results are used. We intend to fundamentally advance sample design by generalizing the relevant sampling theory to arbitrary dimensions, developing scalable synthesis algorithms, and designing constrained sampling techniques, for example, to create multi-resolution, adaptive, or nested samples. We intend to develop a new theory and algorithms to create highly optimized sample designs in high dimensions. This type of sample design is a crucial first step in many mission-critical workflows, and preliminary results have shown an order-of-magnitude improvement over existing approaches. We will develop and deploy simple-to-use tools to create high-dimensional sample designs by exploiting recent theoretical advances. In particular, we will use the direct link between spatial and spectral characteristics of a point sample coupled with new optimization approaches to create new types of sampling approaches.

Spectral sampling, where one optimizes sample designs by simultaneously controlling the spatial and spectral properties, has long been the preferred solution for low-dimensional image reconstruction problems, especially in computer graphics. However, there exists neither a theoretical generalization nor scalable algorithms for using spectral sampling beyond two-dimensional images. We expect to fundamentally advance sample design methodologies by developing a new theoretical framework for analyzing spectral sampling distributions and constructing different families of spectral sampling patterns for different applications, scalable synthesis algorithms for sample creation, and constrained variants of sample optimization to create multi-resolution, adaptive, and nested samples. We will develop a set of tools to create optimized, high-dimensional sampling patterns that will lead to new sample designs, new sample synthesis algorithms, and new insight into the characteristics of sampling approaches in general. A successful project will result in simple-to-use, yet powerful tools to create these sample designs for a wide range of applications. We will install these tools on Livermore systems as well as integrate them into existing tool chains such as PSUADE (Problem Solving Environment for Uncertainty Analysis and Design Exploration, a software toolkit designed to facilitate uncertainty quantification tasks) and the UQ pipeline (a workflow system used to orchestrate large uncertainty quantification studies). This will allow a wide variety of Laboratory applications to directly use the most advanced techniques, which we expect will produce results of the same quality with an order-of-magnitude fewer samples, or alternatively, produce much more insight for the effort. This research has the potential to significantly reduce the time-to-insight in many projects as well as to save hundreds of thousands of computing hours. Whether it is uncertainty quantification for stockpile stewardship or weapons design, better weather or climate ensembles, optimizing the energy grid, or supporting sensitivity analysis and inverse modeling in material science and high-energy physics, the advanced sampling strategies we intend to develop will promise high-quality results using significantly fewer resources.

Mission Relevance

By developing new theories and algorithms useful for a broad range of predictive sciences, our research is directly aligned with the high-performance computing, simulation, and data science core competency. Because of its fundamental nature, this research has the potential to impact many Livermore mission research challenge areas, and is relevant to DOE goals in science, energy, and in nuclear security.

FY17 Accomplishments and Results

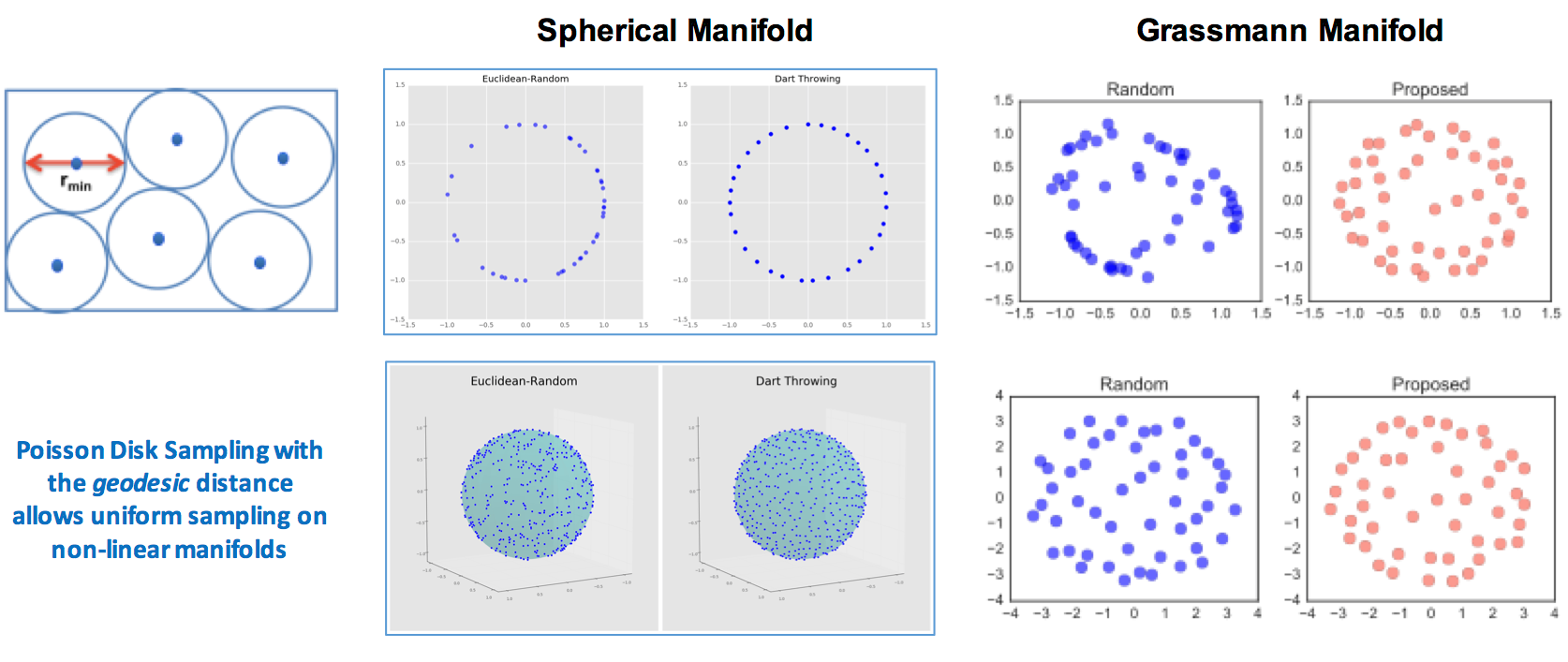

In FY17 we (1) designed two sampling distributions, the Poisson disk sampling for applications that need improved spatial properties (e.g., surrogate modeling) and the Stair blue noise sampling (sampling patterns with a blue noise power spectrum that can prevent discernible artifacts by replacing them with incoherent noise) for applications that need improved spectral properties (e.g., image reconstruction and Monte Carlo integration); (2) developed a new sample synthesis algorithm and a C++ programming language implementation to produce high-quality spectral samples in moderate dimensions, and tested between two and ten dimensions; and (3) evaluated the proposed samples in surrogate modeling with different simulation codes and image reconstruction.

Publications and Presentations

Anirudh, R, et al. 2017. "Poisson Disk Sampling on the Grassmannian: Applications in Subspace Optimization." IEEE CVPR. July 21–26 2017, Hawaii, HI. LLNL-POST-735098.

Kailkhura, B., et al. 2016. "Theoretical Guarantees for Poison-Disk Sampling Using Pair Correlation Function." IEEE ICASSP. March 21-25. Shanghai, China. LLNL-CONF-682297.

——— 2017. "Stair Blue Noise Sampling." ACM T. Graphic. 35. <http://dx.doi.org/10.1145/2980179.2982435> LLNL-JRNL-703039.

Thiagarajan, J., B. Kailkhura, P.T. Bremer, 2016. "Spectral Sampling in High Dimensional Parameter Spaces." SIAM UQ. April 5-8 2016. Lausane, Switzerland. LLNL-PRES-729358.