Tomas Oppelstrup (14-ERD-094)

Abstract

Kinetic Monte-Carlo simulation is a widely used tool within a wide range of research disciplines. In particular, it has been used to study microstructure evolution within the materials science and physics communities. These simulations are almost exclusively carried out in serial, and so far the only ways to run them in parallel have required a compromise between accuracy and parallel efficiency. Our goal was to develop the first massively parallel kinetic Monte-Carlo code that is not only efficient but also exact in the sense that parallel execution precisely reproduces the corresponding sequential simulation. To demonstrate the capability of the new code, we applied it to two benchmark applications: the evolution and growth of crystal grains in a solid metal, and thin-film growth from vapor or deposition. We have implemented a highly scalable kinetic Monte-Carlo code, SPOCK (Scalable Parallel Optimistic Crystal Kinetics). It can scale well up to 400,000 cores and has performed record-breaking simulations in crystal grain growth in two and three dimensions, with simulation sizes up to 200-billion lattice sites, which is two to three orders of magnitude larger than those found in the literature. These simulations also constitute the largest known discrete-event simulations, with over 300-trillion processed events. These simulations allowed grain growth over two orders magnitude of diameter in three dimensions and three orders of magnitude in two dimensions, and can confirm that the grain size distribution follows the assumed shape and scales with time in the predicted manner. In addition, we have also carried out a few other types of crystal growth simulations at smaller scales, and have support to adapt the code to handle solidification problems. We are pursuing support to develop the SPOCK into a more capable multiphysics code including long-range fields to handle, for example, electrostatic and stress effects and address problems within electrophoretic deposition and batteries.

Background and Research Objectives

Kinetic Monte Carlo is a simulation method where material evolution is simulated through a collection of stochastic events, such as an atom hopping to a neighboring lattice site. It has been used to successfully simulate crystal and thin-film growth, dopant migration in semiconductors, and microstructure evolution because of radiation damage. In all these cases, relevant timescales (such as those set by realistic deposition rates or nuclear reactor life times) can be reached. The main problem with kinetic Monte Carlo is that the simulation progresses by executing a sequence of interdependent discrete stochastic events, which makes it hard to parallelize the code. For this reason, accurate kinetic Monte-Carlo simulations are at present excluded from using current supercomputers, and cannot reach relevant mesoscopic length-scales.

The main goal of this project was to create a parallel and exact kinetic Monte-Carlo simulator (SPOCK),1 exploiting the fact that kinetic Monte Carlo is a discrete event process and using the Time Warp parallelization paradigm developed for discrete-event simulations. In particular, we would build the new simulator on the ROSS parallel discrete-event simulation framework (a massively parallel discrete-event simulation tool for the modeling of very-large-scale systems).2 The major benefit is that with a parallel code, kinetic Monte-Carlo simulations can be scaled up to the size that fits in memory of the biggest supercomputer, as opposed to what fits in memory of a single node. Of course, an efficient parallel code also allows simulations to complete faster than a serial code. To demonstrate the capability of the new parallel kinetic Monte-Carlo simulator, we identified two test problems: crystal grain growth, and film growth.

Scientific Approach and Accomplishments

A fundamental objective of the project was to construct a parallel kinetic Monte-Carlo simulation code, where running in parallel does not compromise the quality or correctness of the simulation, and which seems to have never been performed before. Our resulting code, SPOCK (Scalable Parallel Optimistic Crystal Kinetics), can run efficiently on 400-thousand processors (Figure 1), and we have simulated problems involving hundreds of billions of particles on the LLNL supercomputer Vulcan.1 Extrapolating from these simulations, we can simulate over one-trillion particles on Sequoia. This demonstrates that the code is scalable to today's largest supercomputers. In terms of size, we have performed the largest grain-growth simulations by two orders of magnitude in both two- and three dimensions. These simulations also constitute the biggest discrete-event simulations to date.

The fundamental idea to expose parallelism in the formally sequential kinetic Monte-Carlo method was to employ the Time Warp paradigm. Time Warp is an optimistic algorithm, in which each processor speculatively executes the events that it is aware of. In the case of a causal conflict, such as a message informing about a dependency on a previously unknown past event generated by another processor, the speculatively executed events are undone back to the time of the conflict. Then forward simulation is resumed, incorporating the received dependencies. Once an event-based model has been decomposed into logical processes (e.g., spatial domains) communicating with each other through messages, the Time Warp mechanism will find and exploit parallelism embedded in the global sequence of events. The Time Warp algorithm can be proven to exactly reproduce a sequential execution of the simulation, and has theoretical efficiency benefits over conservative parallelization mechanisms, despite the complicated bookkeeping and potential undoing and redoing of computations.

We decided to build SPOCK on the ROSS parallel discrete-event simulation framework,2 a public domain code that implements Time Warp and has shown great scalability on benchmark problems.3 During the course of the project, it became apparent that the ROSS discrete-event simulator framework was lacking some necessary features needed for the kinetic Monte Carlo. In particular, there was no mechanism for retraction of scheduled events, and there was no support for writing regular restart files of large simulations. Both of these features are crucial for our large-scale kinetic Monte-Carlo simulations. In a kinetic Monte-Carlo simulation, a putative next event for an atom (for example), is sampled from a probability distribution depending on the neighboring atomic configuration. If that configuration changes between the issuing and the execution of that putative event, it is retracted, and a new putative next event is calculated from the new configuration. In fact, it is typical that the majority of all scheduled events are retracted (this is the main source of discrepancy between the green and red lines in Figure 1).

In large simulations, it is customary to save regular restart files so that the simulation trajectory can be analyzed afterwards, and so that the simulation can be continued in the event that the time limit of a single job is not long enough or the computation crashes because of a hardware failure. These restart files need to be written in parallel for them not to require an exorbitant amount of run time. Time Warp is a completely asynchronous simulation paradigm, and care must be taken to obtain a consistent snapshot of the simulation configuration that can serve as restart data.

We implemented necessary mechanisms to support event retraction and to recycle the memory occupied by retracted and thus no-longer-needed events. We also implemented a way to read and write simulation configuration data in parallel, to allow restarting a partially completed simulation, and to regularly output consistent configuration data. The missing features in ROSS and our implementation solution were shared with the ROSS team, and are likely to become available in future versions of ROSS, and thus benefit a wider discrete event-simulation community. In particular, the retraction mechanism and a parallel data-reduction strategy was shared with a Livermore team examining virus evolution.

The implementation of efficient and memory-lean Time Warp models requires the writing of reverse code—that is, code that can undo the effect of a given already-executed event. This is to allow rollback in the case of a causality error resulting from the speculative execution nature of the algorithm. Writing reverse code can be tricky, and even minute errors often lead to bugs that are difficult to debug. Another LDRD project at Livermore (14-ERD-062, "Planetary-Scale Agent Simulations") has been developing Backstroke, a tool for automatically generating reverse code. This is very valuable, because the reverse code will be error-free and save much implementation, debugging, and maintenance time in the development of parallel discrete-event models. A simplified version of the SPOCK parallel kinetic Monte-Carlo code has been used extensively as a benchmark application for Backstroke, and as a guide for Backstroke development to fulfill likely requirements of real-world discrete-event simulations.4,5 Our work was presented at the MMM2014 (Multiscale Materials Modeling) and PADS2016 (Principles of Advanced Discrete Simulation) conferences, as well as the XPDES (eXtreme scale Parallel Discrete Event Simulation) workshops in 2015 and 2016.

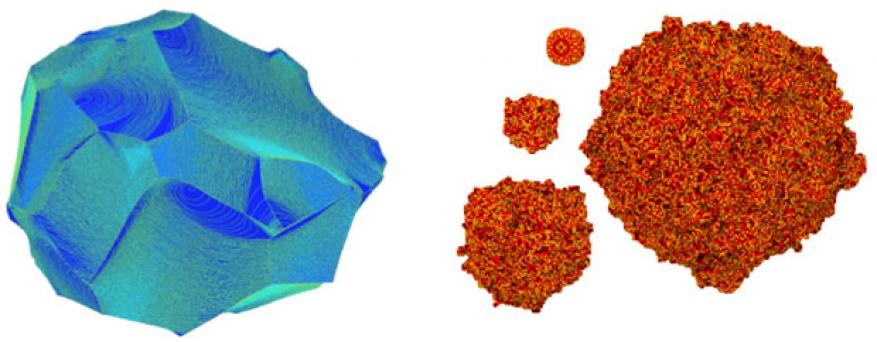

In our original research proposal, we identified crystal film growth as one of two test applications. This was delayed because of unanticipated work to ready ROSS for the large-scale simulations we intended to perform. Ultimately, therefore, we decided to focus on simulations of solidification, in particular, a crystal nucleus growing from the melt. We have run preliminary simulations of this type of solidification (Figure 2). While important heat-transfer physics required for quantitatively good results is missing, the preliminary simulations indicate the relative efficiency of the parallel kinetic Monte-Carlo approach. The SPOCK code is able to complete a solidification simulation with 33-million atoms in about 5 minutes or on a dozen compute nodes, while an equivalent molecular dynamics simulation takes several days on 2-thousand nodes. This extrapolates to being able to run much larger simulation sizes using kinetic Monte Carlo compared to molecular dynamics, and also at lower under-coolings where the molecular dynamics becomes comparatively even at a slower pace.

Impact on Mission

Development of mesoscale atomistic simulation will enable LLNL to meet important mission needs for stockpile stewardship and energy security with growth of thick beryllium films for fusion ignition capsules, grain boundary engineering for enhanced radiation tolerance, fabrication of unconventional material architectures by additive manufacturing, and highly ramified (branch-like) material interfaces for energy storage. In addition, understanding material behavior at the mesoscale through advanced simulations on high-performance supercomputers supports the Laboratory's core competencies in advanced materials and manufacturing, and high-performance computing, simulation, and data science, and was identified as a national priority in a recent Department of Energy Basic Energy Sciences advisory committee report, From Quanta to the Continuum: Opportunities for Mesoscale Science.

The direct mission impact of this project so far has been the identification of a potential new method for efficiently treating solidification problems enabled by the impressive scaling of the SPOCK code. Some preliminary simulations show that the efficiency of SPOCK allows a kinetic Monte-Carlo simulation of solidification of a particular 33-million-atom system to be carried out in about 5 minutes on a dozen compute nodes. In comparison, running an equivalent simulation with molecular dynamics takes several days using 2-thousand nodes. Furthermore, the kinetic Monte-Carlo simulation takes about the same time regardless of the degree of under-cooling, while the molecular dynamics simulation takes increasingly longer the closer to the interesting small under-cooling limit one gets. Presently, SPOCK lacks support for treating heat transfer, crucial to obtaining accurate solidification results. Our preliminary simulations have resulted in ongoing support from the Laboratory's Advanced Simulation and Computing, Physics and Engineering Models program to adapt the code to handle this type of problem, including implementing the necessary heat-transfer capability. Indirect impacts have been the dissemination of parallel discrete-event simulation knowledge and experiences to other Livermore projects: specifically a virus evolution simulator and the Backstroke reverse-code generator.

Conclusion

We have created a parallel kinetic Monte-Carlo code SPOCK, and demonstrated that it can run efficiently on the largest supercomputers and handle simulations of record-breaking size. The code can run on-lattice particle models as well as spin lattice problems, both with arbitrary short-range interactions—for example, several particle or lattice spacings. Next steps for research can be divided into two main categories. The first is developing more interaction potentials for simulating crystal evolution under different conditions and of different types of particles or molecules. The second is to integrate more advanced physics into the code, such as having a co-evolving heat field to properly handle solidification problems, and long-range electrostatic fields to enable simulation of, for example, electrophoretic deposition and batteries. We have obtained support from NNSA’s Advanced Simulation and Computing, Physics and Engineering Models program to adapt SPOCK and develop the necessary heat-transfer physics to enable high-fidelity solidification simulations, including implementing a temperature field co-evolving with particle configuration.

References

- Oppelstrup, T., et al., SPOCK: exact parallel kinetic Monte-Carlo on 15 million tasks. ACM SIGSIM Conf. Principles of Advanced Discrete Simulation (PADS), Banff, Canada, May 15–18, 2016.

- Carothers, C. D., K. S. Perumalla, and R. M. Fujimoto, “Efficient optimistic parallel simulations using reverse computation.” ACM TOMACS 9, 224 (1999).

- Barnes Jr., P. D., et al., Warp speed: executing time warp on 1,966,080 cores. ACM SIGSIM-PADS ’13, Montreal, Canada, May 19–23. 2013.

- Schordan, M., et al., Automatic generation of reversible C++ and its performance in a scalable kinetic Monte-Carlo application. ACM SIGSIM Conf. Principles of Advanced Discrete Simulation (PADS), Banff, Canada, May 15–18, 2016.

- Schordan, M., et al., “Reverse code generation for parallel discrete event simulation.” Lecture Notes in Computer Science, vol. 9138, p. 95. Springer International Publishing, New York (2015). LLNL-CONF-667678. https://doi.org//10.1007/978-3-319-20860-2_6

Publications and Presentations

- Oppelstrup, T., et al., SPOCK: Exact parallel kinetic Monte-Carlo on 1.5 million tasks. ACM SIGSIM Conf. Principles of Advanced Discrete Simulation (PADS), Banff, Canada, May 15–18, 2016. LLNL-CONF-681474

- Schordan, M., et al., Automatic generation of reversible C++ and its performance in a scalable kinetic Monte-Carlo application. ACM SIGSIM Conf. Principles of Advanced Discrete Simulation (PADS), Banff, Canada, May 15–18, 2016. LLNL-CONF-681318.

- Schordan, M., et al., “Reverse code generation for parallel discrete event simulation.” Lecture Notes in Computer Science, vol. 9138, p. 95. Springer International Publishing, New York (2015). LLNL-CONF-667678. https://doi.org//10.1007/978-3-319-20860-2_6