Maya Gokhale (16-ERD-005)

Project Description

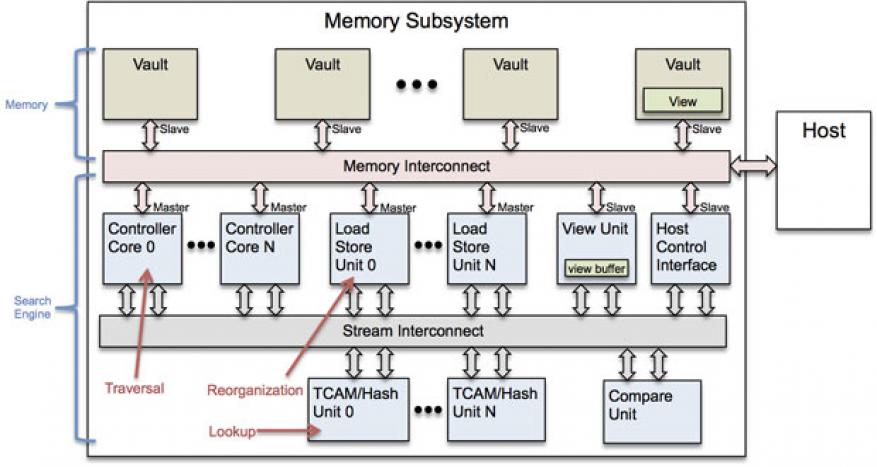

The memory wall—the growing disparity of speed between a central processing unit and the memory outside the unit's chip—is perhaps the most prominent obstacle to processing expanding data volumes. While improvements continue to increase the number of processing cores on a chip, improvements to memory bandwidth and latency at the equivalent scale are not forthcoming, resulting in teraflop compute cores that must sit idle waiting on data. New three-dimensional memories, such as the hybrid memory cube with its very-high-speed serial interface, offer greatly improved bandwidth over double-data-rate memory at the expense of latency. However, it is a continuing challenge to keep the central processing unit cores doing useful work because memory bandwidth improvements mainly benefit regular, streaming access patterns. We are addressing the issues of memory latency, bandwidth, and power by incorporating associative indexing functionality into the memory itself. Commonly employed in data science and simulations, particularly in scientific computing that incorporates sparse data structures, associative indexing encompasses search methods in which data is accessed by content, pattern, or key rather than directly by the memory address at which the data is stored. We will design, prototype, and evaluate a collection of associative-indexing hardware modules optimized for in-memory search. Incorporating logic with memory has become feasible with the emergence of three-dimensional memory stacks that put a memory controller in a base logic layer. In contrast to other methods that place central processing units in the memory, our novel approach offloads data access tasks to the memory, allowing the high-performance central processing unit to compute on the actual data stream. Achieving the ultimate goal of this work—accelerating data lookup and pattern search while reducing energy use—will result in very significant improvements in performance and energy for a wide range of data-centric workloads.

Data-intensive workloads often analyze large, complex, inter-related data structures and exhibit irregular, data-dependent memory-access patterns that can only use a fraction of available bandwidth. Such access patterns result in wasted memory bandwidth and power and limited performance. In our approach, an in-memory indexing accelerator will create, update, and search sparse data structures using index arrays, linked lists, trees, and hash tables (tables used to map keys to values). Our experimental evaluation will use a field-programmable gate-array emulator to quantitatively assess the performance and power usage of alternative hardware implementations. By exploring specialized acceleration functions for scalable compute-node performance improvement, we are seeking solutions to the memory-access bottleneck that is a major challenge in the design of future architectures for exascale computing. We expect to conduct (1) a performance and energy study of hash (associative arrays) and linked data structures in memory, (2) an evaluation of sparse matrix and graph operations for performance and energy, and (3) an evaluation of key–value stores, which is a computer program for storing, retrieving, and managing associative arrays. These records are stored and retrieved using a key that uniquely identifies the record, and is used to quickly find data within a database.

Mission Relevance

This project advances the Laboratory's core competency of high-performance computing, simulation, and data science through its efforts to accelerate data lookup and pattern search while reducing energy use. The architecture foundations established by this work will have an impact on future exascale architectures, smaller-scale clusters, and single-node configurations. This project will also support the Laboratory's mission focus on cyber security, space, and intelligence by meeting the massive data-processing needs common to the applications in those fields.

FY16 Accomplishments and Results

In FY16 we (1) designed a switch and network infrastructure to which indexing modules connect and over which they communicate; (2) analyzed alternative configurations of indexing modules on the switch infrastructure and created a prototype in the emulator; (3) completed software benchmarks to exercise the hardware modules and completed the design and software implementation of the library interface, including bulk operations; (4) implemented two key–value stores; (5) created an open-source limited version of the emulator with trace capture and delay units; (6) filed a patent application for a hardware design of the switch and network infrastructure; and (7) provided data on timing details of the hybrid memory cube to our external collaborator, ARM, Ltd., which develops microprocessor technology for a variety of digital electronics devices.

Publications and Presentations

- Gokhale, M., S. Lloyd, and C. Hajas, "Near memory data structure rearrangement." Proc. 2015 Intl. Symp. Memory Systems. (2015). LLNL-PROC-675466. http://dx.doi.org/10.1145/2818950.2818986

- Gokhale, M., S. Lloyd, and C. Macaraeg, Hybrid memory cube performance characterization on data-centric workloads. IA3 2015: Workshop on Irregular Applications: Architectures and Algorithms, Austin, TX, Nov. 15, 2015. LLNL-CONF-676776.

- Lloyd, S., R. Pearce, and M. Gokhale, Emulator, Version: 1.0. (2016). LLNL-CODE-681658.