Eric Wang (15-ERD-050)

Abstract

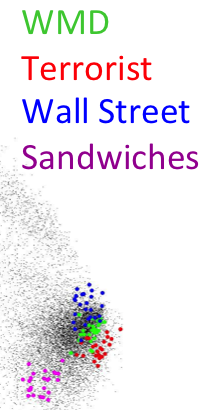

The vast amount of digital information from social networks, e-mail, real-time chat, and blogs offer enormous opportunities for analysts to gain insight into patterns of activity and detection of national security threats. However, systems must be developed to analyze not only the enormous quantity of data, but also the diverse forms that it comes in. We propose to improve analysts' abilities to discover illicit production of weapons of mass destruction by automatically matching intelligence to process templates created by Lawrence Livermore subject matter experts. A large, robust, and unified semantic vector space (semantic space denotes a mathematical vector structure where concepts or words that are related are proximate, as shown in figure) will be created from large collections of documents. Additionally, novel algorithms will be developed to map non-text data such as imagery, graphs, process templates, and video into the semantic space. We will integrate all technologies developed in this project into existing LLNL computing assets. If successful, this will give analysts a powerful automated tool for analysis across enormous data sets. We intend to assemble a diverse team of in-house machine-learning and high-performance computing experts, and build upon existing algorithms to develop the initial version of our system.

We expect our proposed system will be able to provide analysts with a powerful, highly automated and unified tool for quickly identifying, comparing, and even anticipating threats of illicit production of weapons of mass destruction in an ever-shifting global intelligence landscape. The system will be scalable to various data sets and incorporate many different data modalities including text, entity graphs, process templates, images, and video. All data modalities will share a common, robust semantic space trained on vast real-world data collections, enabling cross-modal context-aware searching, analysis, and prediction of fast-emerging threats. We will also employ a variety of metrics to measure the efficacy of our system, both at an individual component level as well as at a threat-detection level. Individual pieces will be measured by community-accepted benchmarks such as the probability distribution of text; the search precision recall for documents, imagery, and video; as well as new metrics for cross-modal transfer. System-level performance metrics will be developed to show how to measure improved analyst productivity when using our tools as compared to baseline. The system will be designed to integrate into the existing LLNL computing infrastructure.

Mission Relevance

By moving the burden of integrating the pieces together from the analyst to the machine, a successful project will free analysts to perform high-level decision-making and analysis tasks rather than spend significant time in the weeds. We will create an improved computational capability to provide decision makers with early warning to threats in a fast-moving ever-changing intelligence and threat landscape. Developing this capability is well aligned with the strategic focus area of cyber security, space, and intelligence. Our approach will result in better awareness of threats facing the nation and support the Laboratory's core competency in high-performance computing, simulation, and data science.

FY15 Accomplishments and Results

In FY15 we (1) brought the semantic embedding tools in-house onto our Spark cluster-computing platform; (2) successfully demonstrated early image-to-semantic embedding linkage, allowing cross-modal search and retrieval of images and documents; (3) obtained preliminary results on entity extraction and mapping graphs onto the semantic space; and (4) continued our integration in the existing LLNL data-analysis framework.