Barry Rountree (14-ERD-065)

Abstract

The field of high-performance computing faces a profound change as we move towards exascale computation with one quintillion floating point operations per second. For the first time, users will have to optimize codes in the presence of limited and variable electrical power. Exascale computing presents a new performance problem of how to best get the most science out of each watt, rather than out of each node. Any code run at scale will have to address this issue. While it may be initially possible to deal with these limitations by over-provisioning and under-utilizing scarce power resources, we decided to demonstrate how addressing these limitations can lead to full utilization of power resources and an order-of-magnitude improvement in throughput. Our ultimate goal was to influence the design of the first several generations of exascale systems and their software ecosystems to maximize performance per watt of power. We expect to influence the design of exascale systems, having demonstrated that power-aware approaches will reliably result in significant performance improvements. Our project focused on solving three problems in power-constrained performance optimization: (1) how to mathematically bound potential performance under a power constraint; (2) how to approach this bound using a runtime system; and (3) how to create a power-aware job scheduler. By meeting these three objectives, we had an impact far beyond our initial expectations and have successfully transitioned this research to multiple ongoing funding streams.

Background and Research Objectives

In 2012, a substantial amount of academic efforts focused on making high-performance computing more energy-efficient.1–5 Most of these efforts attempted to trade off performance for energy savings, and while substantial energy savings were demonstrated repeatedly, the performance loss prevented the work from being developed or deployed further in large supercomputing centers. In particular, DOE centers at the time prioritized “performance-at-all-costs” in order to maximize the science done over the lifetime of a given machine. This year also marked a growing awareness that power (measured in watts), not energy (measured in watt-hours), would be a first-order design constraint in future exascale systems. Exascale computing presented a new performance problem of how to best get the most science out of each watt, rather than out of each node. Any code run at scale will have to address this issue, and if solutions are not ready for code teams when the first power-limited systems are delivered, the result will be unnecessarily poor performance.

The year 2012 also marked the first public documentation of Intel’s running average power limit (RAPL) technology, which for the first time allows a hardware-enforced, user-specified processor power bound. In the first paper published applying this technology to high-performance computing, we provided a proof-of-concept that RAPL could indeed be effective in a DOE environment.6 This LDRD project set out to define a new research direction away from energy savings, focusing instead on performance optimization under a power bound. RAPL provided a mechanism for experimenting with power bounds, exascale gave us the rationale, and our early work provided a template—create performance models and translate them into runtime and scheduling systems.7–9

Scientific Approach and Accomplishments

Through this project, we (1) developed a mathematical optimization approach for optimizing performance under a power bound that did not rely on integer programming, (2) developed techniques for state-of-the-art in runtime power management, and (3) created ways to handle job scheduling on a power-limited high-performance computing system.10–12 These accomplishments were the result of close collaboration with Prof. David Lowenthal at the University of Arizona in Tuscon.

Performance Optimization

Our first accomplishment involved providing a solution to the problem of how to optimize performance under a power bound.10 Program execution of an MPI+OpenMP problem (that is, a hybrid of a message passing interface with open multiprocessing capability) was represented as a directed acyclic graph where edges represented computation and communication dependencies and nodes represented the boundaries of “tasks” (computation that occurred between two MPI communication calls). This formulation allowed the length of the critical path through the graph to be equal to execution time. Each task had a measured execution time and power level for each possible combination of discrete central processing unit (CPU) clock frequencies and the number of active OpenMP threads. The problem was to choose a schedule of per-task frequencies and OpenMP thread counts that minimized execution time (the critical path) while staying under a given power bound. As formulated, the potential clock frequencies are discrete, as are the resulting potential execution times, and tasks are not fully-ordered across processors (tasks are fully ordered on any given processor). The result is an integer programming formulation which cannot scale beyond a handful of tasks.

Our primary contribution was two simplifications. In the first, tasks were fixed in relation to each other so as to reach reasonable solutions. In the second, CPU clock frequencies were treated as a linear value and rounded down to the nearest discrete value during postprocessing. These two simplifications allowed power bound to be evaluated whenever a new task began. The result showed that static power assignment could result in performance up to 41% worse than the theoretical optimal, which motivated our continuing work in power-constrained performance optimization. (See Figure 1.)

Runtime Power Management

We took the lessons learned from our performance optimization work to create the Conductor runtime system.12 Instead of relying on the exhaustive generation of traces to determine ideal configurations, Conductor explores the central processing unit clock frequency and OpenMP thread count spaces during the initial timesteps of the application. Tasks that block message passing interface communication calls are by definition off the critical path, and a configuration is chosen to slow the task to the point where idle time is minimized. Tasks that do not block may be on the critical path and the system chooses the configuration that allows as-fast-as-possible execution.

After the initial configuration iterations, power is reallocated among processors such that the total power of all processors meets the job power bound. Power is rebalanced continuously at timestep boundaries throughout the rest of the run. Evaluation of the algorithm on the Cab cluster at Livermore showed significant improvements over static power allocations, but most of these improvements came from the configuration exploration rather than dynamic reallocation of power. Execution time improvement over several benchmarks and production codes averaged 19.1%, with a best-case improvement of 30%.

Job Scheduling

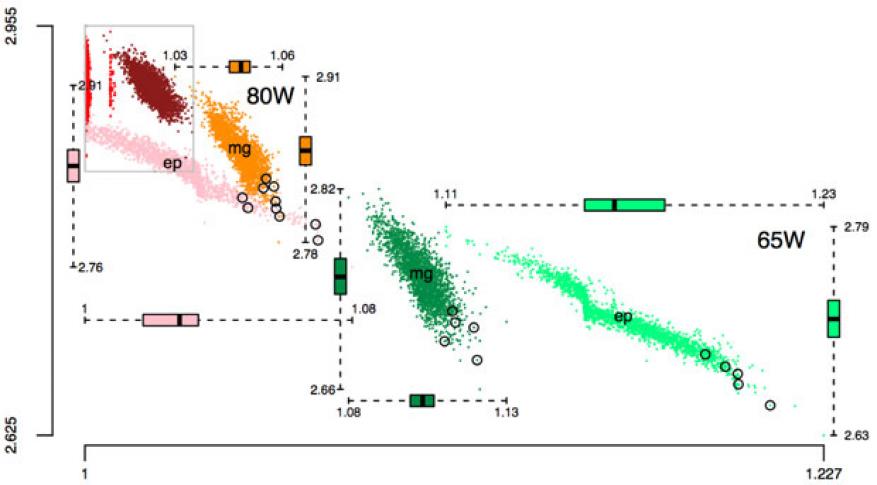

We also moved up the software stack to explore the impact of limited power on job scheduling.11 A power-limited system by definition is hardware-overprovisioned: There is more hardware than can be used at maximum power simultaneously. For jobs that run on the entire machine, a runtime system is sufficient for approaching optimal performance. Where multiple jobs are running, a job scheduler must allocate power among jobs, giving each job its own individual power bound. Where runtime systems attempt to minimize execution time, schedulers are evaluated on their ability to minimize submission-to-execution-completion time (“end-to-end” time), throughput, and/or utilization.

Our research resulted in the Resource Manager for Power (RMAP) algorithm, which is implemented as a plug-in to the simulator that is part of the Simple Linux Utility for Resource Management (SLURM) job scheduling system used at Livermore.11 The initial algorithm is straightforward: Jobs are submitted with a request for a certain amount of time and power and a certain number of nodes. Jobs are scheduled on a first-in, first-out basis. This simple algorithm tends to leave “holes” in the schedule: Two large jobs in succession may have to run serially with the result that smaller jobs submitted later are delayed until both jobs are complete. This problem can be mitigated by “backfilling,” whereby smaller jobs that arrive later can be placed into the holes caused by scheduling larger jobs. The innovation in our work was in understanding that while the suggested number of nodes for a job will likely give optimal job execution time performance, that particular configuration may not be optimal for end-to-end performance, throughput, or utilization. By modifying the backfilling algorithm to account for nodes and power, and by allowing jobs to be given a different number of nodes than requested, we were able to realize a 31% (19% on average) and 54% (36% on average) faster average turnaround time when compared to worst- case provisioning and naive overprovisioning, respectively.

Other Accomplishments

Power research requires low-level machine measurement and configuration. This kind of work is not appropriate for production clusters, and when we first proposed our research, we did not have facilities onsite that could support such research. This project allowed us to purchase equipment for use in Livermore's Center for Applied Scientific Computing Power Lab, including four machines on an isolated network that allowed Laboratory staff, students, and visiting faculty the ability to run invasive experiments. The Power Lab supported the evaluation of multiple approaches to power measurement, ranging from external meters to baseboard controllers on the motherboard to meters on the processor itself, and finally, external power meters. The Power Lab also allowed development, testing, and validation of system-level software that uses these meters. The two most important of these software projects were libmsr and msr-safe.13,14 The latter provides a whitelisted, batch-oriented method of reading and writing model-specific registers on the processor. These registers handle, among other things, power capping and measurement, thermal control, and hardware performance counters. As a userspace library, libmsr provides a “friendlier” user interface to these registers, allowing users to specify watts and degrees Celcius, instead of scaled bit strings. The libmsr and msr-safe projects have been incorporated into the Tri-lab Operating System Stack and the Performance Application Programming Interface, and are now a requirement for Intel’s Global Energy Optimization software.

Impact on Mission

Many core missions in national and energy security at the Laboratory are dependent on the predictive simulation capability of large-scale computers, which are moving into the exascale realm. Exascale systems will be intrinsically power limited. Our research focused on enabling optimized software in a new era of power-constrained supercomputing, in support of Livermore's core competency in high-performance computing, simulation, and data science. Our success included new capabilities, new work brought into the Lab, and new staff.

Tangible Power Savings on Existing Systems

Our investigation of the discrepancy between processor power (reported via Intel’s running average power limit technology) and power measured at the power supply (measured via the OpenIPMI project’s Intelligent Platform Management Interface sensors tool) resulted in not only isolating 100 watts per node to system fan use, but also the observation that the fan power consumption was independent of load. Changing the fan settings in the basic input/output system so they would be load-responsive saved (and continues to save) 15 kilowatts on the catalyst clusters, and has caused Livermore to begin evaluating default system fan settings. This work was made possible due to the porting work on our msr-safe kernel module and libmsr library that allowed us to take the relevant measurements on Catalyst.

Research Productized in CRADA.

The addition of whitelisting individual model-specific registers and batch access (which provides dramatic performance improvement over the stock Linux kernel approach) has led Intel to require the use of msr-safe in its Global Energy Optimization runtime system.15 This runtime system, in turn, is being installed on machines purchased under the “Collaboration Oak Ridge Argonne Livermore” procurements and is expected to be used on future systems as well. The Global Energy Optimization runtime system also relies on algorithms developed as part of the creation of our research prototype Conductor runtime system.12 Conductor continues to be considered best-in-class among power-balancing runtime systems, and the effectiveness of the Global Energy Optimization runtime system (and future runtime systems) will be measured against it.

Incorporation of Research into Flux

Our power-aware scheduling work has had an increasing influence on the design of Flux, a resource manager at the Laboratory.11 Flux aims to treat all shared resources—including disk bandwidth, network bandwidth, and power—as allocatable and schedulable. This research demonstrated that power-aware job scheduling leads to significant performance improvement in power-limited systems.

Research Used in Several Other Projects

Our research has been the basis of several further efforts: the Gremlins project, which is part of the Extreme Materials at Exascale Co-Design Center; the Argo project, which is part of DOE's research into exascale operating systems; and most recently, two funded projects on operating systems and runtime power control that are under the umbrella of the DOE’s Exascale Computing Project.16,17 While libmsr and msr-safe are the most visible of our results in these projects, our work has allowed the Laboratory to develop a reputation for power management as a core competency within the Center for Applied Scientific Computing.

Project-Funded Students Continue to Contribute

Finally, our project funded 14 students for summer internships. Out of this group, Kathleen Shoga was hired by Livermore Computing immediately after completing her undergraduate work and Tapasya Patki was hired immediately after defending her Ph.D. dissertation.

Conclusion

Our project essentially solved the most important problems in power-constrained performance optimization for “classical” multicore computing: a tractable performance model, a working runtime system, a working job scheduler, and system software to validate both. But even as these solutions are being incorporated into production software, hardware continues to evolve. Future work might include:

Custom/customizable firmware. Future processors may soon offer much finer-grained power control. While that level of granularity may not be appropriate for control at the system software level, we need to make sure the firmware that takes power decisions is tuned for high-performance computing workloads. Gaining this assurance will require having influence over, and possibly access to, processor firmware. Ultimately, we may reach a point where tuning firmware per application will result in sufficient performance gains to justify the substantial overhead in expertise that it would require.

Power control and modeling of exotic architectures. The DOE Exascale Computing Project has put a great deal of emphasis on exploring “novel” architectures. Initial results show great promise for the power efficiency of general-purpose graphics processing units, but even greater efficiencies may be available with field-programmable gate arrays or ARM-brand processors.

Performance reproducibility and power. Our existing programming model relies on the assumption that experiments are reproducible. Active power management constantly reconfigures the machine while an experiment is running. While this should not affect the output of the application, this constant variation does pose a problem for the science of performance optimization. How can we tell whether or not a particular change resulted in improved performance when variation in execution time from run to run can exceed 20%? Given enough runs, we can establish some statistical confidence in the improvement, but for optimization at scale that is probably not an option. Are there other metrics beside execution time that are less affected by active power management that correlate well to statistically significant performance improvement? Resource-constrained performance optimization could be one. Also, power is not the only schedulable resource. While projects such as Livermore's Flux have focused on shared schedulable resources such as bandwidth and power, there are other tradeoffs to be made within the job. Reducing computational accuracy can result in lower power, as can a reduction in visualization fidelity. Future work could explore how we can take advantage of these tradeoffs (and express them to the users of the applications) so that scarce resources such as power are being spent on results that matter to the scientists using our systems.

Finally, our work has, in large part, defined the field of power-constrained performance optimization. We have established lasting, national collaborations with University of Arizona; University of Georgia in Athens; James Madison University in Harrisonburg, Virginia; North Carolina State University in Raleigh, University of Oregon in Eugene, University of Texas at El Paso, Intel, and the Oak Ridge and Los Alamos national laboratories. We have also established collaborations internationally, with the Tokyo Institute of Technology in Japan and the Leibniz Supercomputing Centre of the Bavarian Academy of Sciences and Humanities in Garching, Germany. In terms of impact, the idea that we can no longer write codes for a fixed machine architecture may eventually prove to be as profoundly disruptive as multicore programming. In its commitment to this research, in part through this project, Livermore is positioned to be a leader in the field.

References

- Hsu, C., and W. Feng, “A power-aware run-time system for high-performance computing.” Proc. 2005 ACM/IEEE Conf. Supercomputing. (2005). http://dx.doi.org/10.1109/SC.2005.3

- Ge, R., et al., CPU MISER: A performance-directed, run-time system for power-aware clusters. 2007 Int. Conf. Parallel Processing. (2007). http://dx.doi.org/10.1109/ICPP.2007.29

- Curtis-Maury, M., et al., “Prediction models for multi-dimensional power-performance optimization on many cores,” PACT '08: Proc. 17th Intl. Conf. Parallel Architectures and Compilation Techniques. (2008). http://dx.doi.org/10.1145/1454115.1454151

- Freeh, V., et al., “Just-in-time dynamic voltage scaling: Exploiting inter-node slack to save energy in MPI programs.” J. Parallel Distr. Comput. 69(9), 1175 (2008). http://dx.doi.org/10.1016/j.jpdc.2008.04.007

- Etinski, M., et al., “Parallel job scheduling for power constrained HPC systems.” Parallel Comput. 38(12), 615 (2012). http://dx.doi.org/10.1016/j.parco.2012.08.001

- Rountree, B., et al., “Beyond DVFS: A first look at performance under a hardware-enforced power bound.” Proc. 2012 IEEE 26th Intl. Parallel and Distributed Processing Symp. Workshops and PhD Forum. (2012). http://dx.doi.org/10.1109/IPDPSW.2012.116

- Rountree, B., et al., “Bounding energy consumption in large-scale MPI programs.” Proc. 2007 ACM/IEEE Conf. Supercomputing (2007).

- Rountree, B., et al., “Practical performance prediction under dynamic voltage frequency scaling.” Proc. 2011 Intl.Green Computing Conf. and Workshops (2011). http://dx.doi.org/10.1109/IGCC.2011.6008553

- Rountree, B., et al., “Adagio: Making DVS practical for complex HPC applications.” Proc. 23rd Intl. Conf. Supercomputing. (2009). http://dx.doi.org/10.1145/1542275.1542340

- Bailey, P., et al., “Finding the limits of power-constrained application performance.” SC '15: Proc. Intl. Conf. High Performance Computing, Networking, Storage and Analysis (2015). http://dx.doi.org/10.1145/2807591.2807637

- Patki, T., et al., “Practical resource management in power-constrained, high performance computing.” Proc. 24th Intl. Symp. High-Performance Parallel and Distributed Computing. (2015). LLNL-CONF-669277. http://dx.doi.org/10.1145/2749246.2749262

- Marathe, A., et al., “A run-time system for power-constrained HPC applications.” Proc. High Performance Computing: 30th Intl. Conf., ISC High Performance 2015, Frankfurt, Germany, July 12–16, 2015. LLNL-CONF-667408. http://dx.doi.org/10.1007/978-3-319-20119-1_28

- Shoga, K., et al., Whitelisting MSRs with MSR-Safe. 3rd Workshop on Extreme-Scale Programming Tools, New Orleans, LA, Nov. 17, 2014. LLNL-PRES-663879.

- Walker, S., and M. McFadden, Best practices for scalable power measurement and control. 2016 IEEE Intl. Parallel and Distributed Processing Symp. Workshops (2016). http://dx.doi.org/10.1109/IPDPSW.2016.91

- Eastep, J., et al., Global extensible open power manager: A vehicle for HPC community collaboration toward co-designed energy management solutions. 7th Intl. Workshop in Performance Modeling, Benchmarking and Simulation of High Performance Computer Systems, Salt Lake City, UT, Nov. 14, 2016.

- Maiterth, M., et al., Power balancing in an emulated exascale environment. 2016 IEEE Intl. Parallel and Distributed Processing Symp. Workshops (2016). http://dx.doi.org/10.1109/IPDPSW.2016.142

- Ellsworth, D., et al., Systemwide power management with Argo. 2016 IEEE Intl. Parallel and Distributed Processing Symp. Workshops (2016). http://dx.doi.org/10.1109/IPDPSW.2016.81

Publications and Presentations

- Bailey, P., et al., Adaptive configuration selection for power-constrained heterogeneous systems. 43rd Intl. Conf. Parallel Processing (ICPP-2015), Beijing, China, Sept. 1–4, 2015. LLNL-CONF-662222.

- Marathe, A., et al., “A run-time system for power-constrained HPC applications.” Proc. High Performance Computing: 30th Intl. Conf., ISC High Performance 2015, Frankfurt, Germany, July 12–16, 2015. LLNL-CONF-667408. http://dx.doi.org/10.1007/978-3-319-20119-1_28

- Patki, T., et al., “Practical resource management in power-constrained, high performance computing,” Proc. 24th Intl. Symp. High-Performance Parallel and Distributed Computing (2015). LLNL-CONF-669277. http://dx.doi.org/10.1145/2749246.2749262