Barry Chen (14-ERD-100)

Abstract

The ability to automatically detect patterns in massive sets of unlabeled data is becoming increasingly important as new, advanced types of sensors come into use. However, the biggest obstacle to applying machine learning to this and other areas of national security data science is the sheer volume of unsorted and unexploited raw data that must be analyzed. Those organizations that are able to collect and make sense of large datasets to help them make better decisions more rapidly will have a competitive advantage in the information era. Machine learning technologies play a critical role for automating the data understanding process; however, to be maximally effective, useful intermediate representations of the data are required. These representations or “features” are transformations of the raw data into a form where patterns are more easily recognized. Deep learning is a computational approach for identifying patterns in such unlabeled data, and has already been shown to often outperform traditional systems built on hand-engineered features. Our project focused on developing and extending deep learning algorithms for learning features from vast amounts of unlabeled data and developing the high-performance computing neural network training platform to support the training of massive network models. We succeeded in developing new unsupervised feature learning algorithms for images and video and created a scalable neural network training toolkit for high-performance computing. Additionally, we helped create the world’s largest freely-available image and video dataset supporting open multimedia research and used this dataset for training our deep neural networks. This research helped Livermore capture several externally funded projects, attract new talent, and establish collaborations with leading academic and commercial partners. Finally, this project demonstrated the successful training of the largest unsupervised image neural network using high-performance computing resources and helped establish the Laboratory's leadership at the intersection of machine learning and high-performance computing research.

Background and Research Objectives

As vast amounts of data in the form of images, video, audio, and text are collected, the need for computer-assisted analysis of such data grows. This data deluge presents both daunting challenges as well as remarkable opportunities. For machine learning researchers, the excitement comes from the tantalizing potential for improved system performance resulting from training larger, more expressive models on vast amounts of data. Learning discriminative features directly from massive datasets--rather than hand-engineering feature representations--is one of the primary drivers for the “super-human” image recognition results using deep convolutional neural networks on the ImageNet Large Scale Visual Recognition Challenge.1–4 These results depend on the availability not only of big data but also big labels. Unfortunately, the volume and variety of data collection far outpaces human annotation abilities, and while crowd-sourcing the labeling problem may be a viable approach for generating more labels, many data are unsuitable for crowd-sourcing due to their sensitive nature or the required technical expertise to generate high-quality labels. As scientists and engineers continue to develop new sensor and measurement devices that are able to collect new forms of data, the importance of unsupervised feature learning becomes tantamount.

Our goal was to research and develop new high-performance-computing-enabled, deep learning algorithms for the unsupervised learning of transferable feature representations of images and video from massive unlabeled datasets. This goal can be broken up into several objectives: (1) developing scalable neural network training algorithms and software that take advantage of high-performance computing architecture; (2) creating new, unsupervised, feature learning algorithms that can learn image and video features with high quality transfer performance on new tasks; and (3) curating datasets for training and quantifying algorithm performance and the evaluation of our algorithms on these datasets. We achieved all of these objectives. In the next section, we describe the accomplishments in each objective in more detail.

Scientific Approach and Accomplishments

To support research in large-scale deep learning algorithms and scalable training frameworks, we worked with our collaborators at the International Computer Science Institute in Berkeley, California, and Yahoo! in Sunnyvale, California, to create a massive dataset for model training and evaluation. The resulting dataset is called the Yahoo! Flickr Creative Commons 100M (YFCC100M).5 This dataset became the world’s largest open/no-strings-attached image and video dataset. "No-strings-attached" refers to the dataset's unrestrictive nature, which makes it possible for researchers to freely publish their research findings using the data. YFCC100M consists of about 99.2 million images and 0.8 million videos uploaded to Flickr between 2004 and 2014 under the Creative Commons license. The majority of the images and video also come with associated metadata consisting of content keywords, time/date stamps, locations, and camera types. To expand the metadata of this dataset, our subcontractors at International Computer Science Institute also spearheaded an annotation effort that produced event labels for videos in YFCC100M. This multimedia event-detection dataset is called the YLI Multimedia Event Detection (YLI-MED) and is also freely available to the research community for analysis and publication.6 Both YFCC100M and YLI-MED provided the large-scale data required for testing the scalability and performance of our deep feature learning algorithms.

In addition to being a great source of training data, YFCC100M also embodies one of the primary big data challenges: how does one find all the data associated with a desired event or concept of interest? Optimistically, it would take over 9,000 hours for a person to look at all of the images and video data in YFCC100M to find all relevant data associated with a single query. Our work supported some of International Computer Science Institute’s initial research in automatic event detection on web-scale datasets. The International Computer Science Institute developed the Evento 360 system to automatically detect events from images and videos by clustering data based on their timestamps and keyword tags.7 The Evento 360 system successfully demonstrated the utility of simple machine learning algorithms for helping users organize and query YFCC100M and was the winning system for the Association for Computing Machinery's Multimedia 2015 Grand Challenge on Event Detection and Summarization in 2015.

The Evento 360 system is an important first step toward a generalized solution to finding relevant content from large image and video datasets, but its reliance on keyword tags limits performance to well-tagged data. Ultimately, to enable machine-automated search of massive datasets like YFCC100M, we need to build a feature representation for images and video and associate them with semantic information about their contents. The first step toward realizing this vision is building universal (i.e., transferable from one data genre to another) feature representations for images and video. This work was the core technical contributions of our project. In particular, we developed the deep learning algorithms and architectures for learning transferable feature representations for images and video along with the high-performance computing algorithms that enable training large models using massive amounts of data.

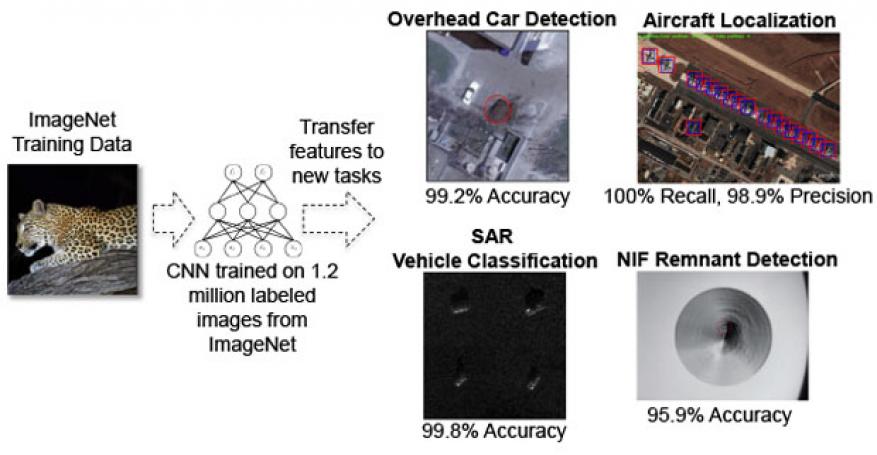

We were motivated by the tremendous success of deep, convolutional neural networks (CNNs), which are a type of feed-forward artificial neural network in which the connectivity pattern between a given network's neurons is inspired by the organization of the animal visual cortex on image recognition tasks such as ImageNet. Thus, we investigated the transferability of convolutional-neural-network image features learned via supervised training on ImageNet.1–4,8 Our general approach involved specifying various neural network architectures, training the network weights while optimizing for object classification performance on ImageNet, and using the outputs of various CNN layers (except for the last layer which was tuned specifically for ImageNet classification) as features for other image recognition tasks. One of our main findings was that this supervised feature learning approach trained using consumer generated photos effectively generalizes to many different image types and applications.

In particular, we demonstrated that CNNs trained on ImageNet consumer-generated photos could be adapted/transferred to accurately detect and count cars in overhead imagery, detect and localize specific aircraft, classify various vehicle types in synthetic aperture radar (SAR) images, and detect remnant damage sites in images of National Ignition Facility (NIF) optics with high performance (Figure 1).9 Furthermore, we also investigated the transferability of these features for tagging images by extending the popular TagProp algorithm using CNN features. We showed that CNN image features achieved better tagging metrics than hand-engineered features by between 8.1% and 16.1% on benchmark image tagging datasets IAPR-TC12 and ESP Games.10–13

We also explored various CNN architectures, varying the size and depth of network layers, and we developed a hybrid network architecture that blended the best features from two industry-leading CNNs: the multiresolution convolutional kernels of Google’s Inception network and the residual shortcut connections of Microsoft’s ResNet.3,4 By blending these two architectures, our ResCeption architecture is able to learn image features of varying sizes and allows for rich hierarchies of deeply stacked layers.9 We demonstrated that ResCeption networks achieve large performance improvement versus the AlexNet architecture and a modest one over Inception (19.3% and 0.89% relative gain, respectively) on Livermore’s overhead car counting dataset.2,9

Our next significant accomplishment was the investigation and development of new unsupervised feature learning algorithms able to learn useful feature representations without the need for labeled data. This project investigated three different approaches for unsupervised feature learning: (1) extensions of autoencoder networks, (2) self-supervised context prediction networks, and (3) generative adversarial networks.

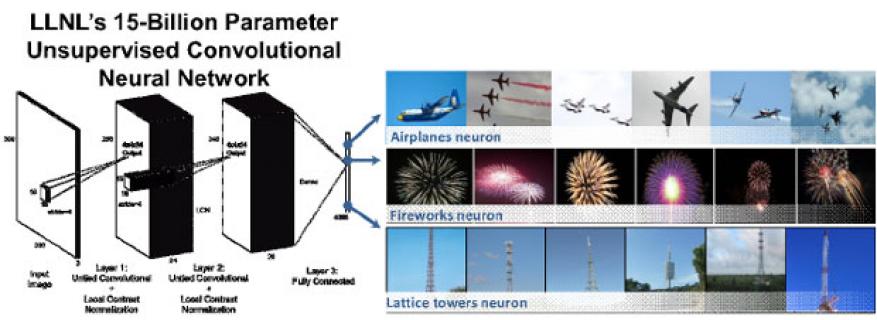

The standard deep learning approach to unsupervised feature learning is the autoencoder, which projects input data into intermediate feature representations from which the original input can be well reconstructed by the output layer.14 Working with our collaborators from Stanford University in Palo Alto, California, we extended their work on the Google Brain, which famously learned image concepts such as human faces, silhouettes of people, and cat faces, using 1 million unlabeled YouTube images.15 We adapted Stanford’s model for parallel, deep learning training software for our graphics processing unit supercomputing cluster and trained an autoencoder network similarly to the one used by Stanford.15 However, our autoencoder network had 15 times more network parameters and 100 times more training data using YFCC100M, and used only 100 graphics processing unit compute nodes.16 The resulting autoencoder was the largest unsupervised neural network for images ever trained, and it too learned interesting concepts like airplanes, fireworks, latticed towers, and pictures with text all without labeled image data (Figure 2). While we were able to train this massive network in eight days, we realized our existing codebase would not scale beyond 100 nodes due to communication bottlenecks arising from the serial staging of input data and diminishing amount of work per node resulting from a limited form of parallelization tied to input image sizes. This led us to develop the Livermore Big Artificial Neural Network training toolkit.

The Livermore Big Artificial Neural Network addresses the scaling challenges by providing staged and distributed data ingest and by leveraging the Elemental library to distribute both the model parameters and data matrices.17 We focused on optimizing the Livermore neural network for massive deep fully-connected neural networks and achieved efficient weak and strong scaling performance.18 The Livermore Big Artificial Neural Network achieves this via model parallelism where individual compute nodes are responsible for different subsets of the model and data parallelism where different nodes compute gradients for different mini-batches of data. While the Livermore Big Artificial Neural Network has efficiently distributed the computation of gradient descent optimization at the heart of neural network training, its data parallelism functionality is tantamount to computing a gradient on a very large batch of data very quickly. Unfortunately, large batch sizes do not necessarily lead to faster overall convergence times for training. Developing data parallel training algorithms that lead to faster training convergence remains an open research topic.

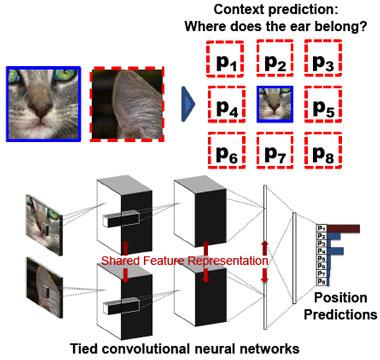

The second major approach to unsupervised feature learning is the self-supervised context prediction networks.19 The idea is to train neural networks to learn to predict the structure of the co-occurrence of input data. In the realm of images, an example context prediction task is to classify the location of a randomly selected image patch relative to another randomly selected patch. Figure 3 illustrates an example of this—the job of the neural network is to learn that the ear patch is northwest of the nose patch. In order to perform the context prediction task well, the network must learn feature representations that capture the inherent structure of the data, and the hypothesis is that these features are generalizable to other image recognition tasks. The key benefit of context prediction is that it is self-supervised, i.e., no human-generated labels are required because the computer controls what contexts to present for training and knows the relationship of these contexts.

In our project, we extended work by Doersch.19 In so doing, we explored new network architectures like our ResCeption network and new context prediction tasks such as classifying the orientation of three image patches instead of two. One of the drawbacks of two image patch contexts is that often two image patches randomly come from uninformative regions of the image, e.g., two patches of the sky. Our triple patches strike a good balance between computational complexity and informative patch selection. We tested the transferability of the features learned via context prediction with those of the gold-standard supervised CNN features and found that the context prediction features achieved impressive transfer performance. For example, when training the features on ImageNet data and finetuning networks on the CompCars classification dataset, we found that our triple-patch features outperformed Doersch’s double-patch features (87.2% vs 84.8%). Compared to the supervised CNN features, this result is only 3.2% absolute worse, which is quite remarkable given the fact that our triple-patch features are learned without costly hand labels. We also performed some initial experiments with training on the much larger YFCC100M unlabeled images and found that increasing the amount of training data leads to some improvement in classification performance, but unfortunately we were not able to fully test out the performance increases from also increasing model sizes due to time constraints. Follow-on work will explore this avenue, build an understanding of what makes a context prediction task more transferable to other image recognition tasks, and generalize this method to video data.

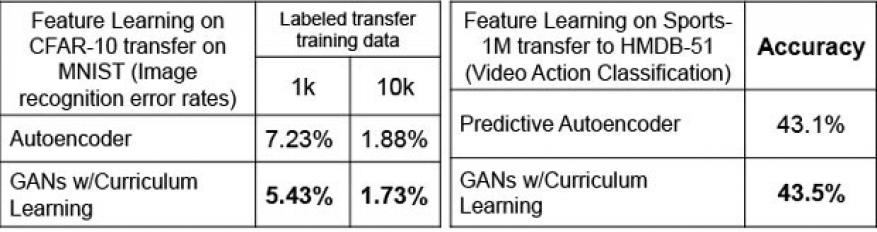

The final approach for unsupervised feature learning that we extended was the generative adversarial network training. Generative adversarial networks turn network training into a game played by two separate neural networks: one learns feature representations useful for generating realistic fake data, while the other learns features for catching forgeries.20 Like the other unsupervised feature learning techniques, generative adversarial networks take advantage of large amounts of unlabeled data. Furthermore, the generative adversarial networks game can be played almost endlessly until both networks achieve an equilibrium point where both are winning as much as losing. Achieving this equilibrium can be challenging when one of the networks overpowers the other, resulting in suboptimal features learned by both. We developed new adversarial training algorithms that stage the game in successively harder games for both networks to prevent one network from dominating. We demonstrated that this “curriculum learning” approach leads to dramatically more stable learning that converges more quickly to the equilibrium point. We applied our curriculum learning approach to learning features for images as well as video data, and found that features from our generative adversarial networks outperformed the standard autoencoder-based features (Figure 4). Furthermore, features of generative adversarial networks excelled in cases when labeled data for the subsequent task adaptation was limited. This is a particularly important characteristic for unsupervised feature learning approaches, given the costly nature of obtaining labeled data.

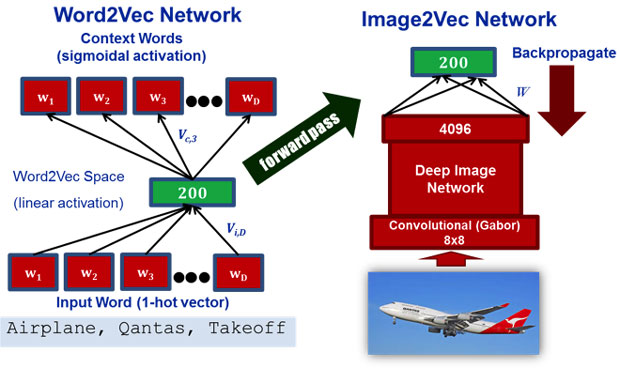

Our final significant scientific accomplishment on this project is the development of a new learning algorithm for mapping image and text features into a shared feature space where images and text of related concepts are proximal. Such a shared feature space enables image tagging and image search from either keywords or query images. Our bimodal learning algorithms start from separate text and image neural networks pretrained in the various ways described earlier. For the text network, we pretrained Word2Vec (a neural net for words) and GloVe (a global vector algorithm for word recognition) on 20 years of New York Times, while the image network--a standard CNN like VGG16--trained on ImageNet.21–23 Finally, we trained several mapping layers from the penultimate layer of VGG16 to the Word2Vec feature space using a smaller image tagging dataset like ESP Games or IAPR-TC12. This bimodal learning system, called Image2Vec, maps images and text into a shared semantic feature space (Figure 5).24 We benchmarked the performance of Image2Vec on the image tagging problem and showed that the performance was only slightly worse than the baseline TagProp systems, but unlike TagProp, Image2Vec was able to perform zero-shot learning.11 Zero-shot learning in our context is achieved when the system is able to associate correct tags to images without ever seeing examples of them during training. For example, Image2Vec was able to tag images of “missiles” despite never having seen images tagged with “missile” in the training data. These developments lay the groundwork for future research on generalizing these approaches to multimodal feature space learning (for unifying the features for images, text, video, etc.) that will enable generalized multimodal search.

Impact on Mission

Overall, the project is well aligned with Livermore's core competency in high-performance computing, simulation, and data science. By advancing the specific applications of classifying audio and video content, detecting anomalies in wide-area surveillance video, and modeling computer network behavior, our research is also relevant to the Laboratory's strategic focus area in cyber security, space, and intelligence. This project supports the Laboratory's national security mission by developing technologies that are broadly applicable to other mission-relevant applications, including general threat detection, Livermore's National Ignition Facility system monitoring and prediction, and the validation of advanced manufacturing parts.

Conclusion

This project, which sits at the very exciting intersection of high-performance computing and machine learning, successfully developed and demonstrated several major new capabilities: (1) a scalable neural network training toolkit for fitting multi-billion-parameter neural networks to massive datasets; (2) several unsupervised deep learning algorithms for images and video, demonstrating high-quality transferability of these features to new tasks; (3) a bimodal feature learning algorithm that successfully mapped image and text features into a single feature space enabling semantic image search and tagging; and (4) the largest open image and video dataset for multimedia research. These capabilities lay the foundation for further research on learning universal multimodal feature representations at massive scales and support the development of a new generation of situational awareness, nuclear nonproliferation, and counter-weapons-of-mass-destruction programs.

This project was also instrumental in recruiting seven new research staff including a world leader in multimedia retrieval research and attracted over half a dozen summer researchers. Additionally, this project helped Livermore establish new strategic partnerships with academia and industry including Stanford; University of California, Berkeley; the International Computer Science Institute; In-Q-Tel in Arlington, Virginia; IBM headquartered in Armonk, New York; and NVIDIA Corporation in Santa Clara, California.

References

- Russakovsky, O., et al., “ImageNet large scale visual recognition challenge.” CoRR, arXiv:1409.0575 (2014). https://arxiv.org/abs:1409.0575

- Krizhevsky, A., I. Sutskever, and G. Hinton, “ImageNet classification with deep convolutional neural networks.” Advances in Neural Information Processing Systems. The MIT Press, Cambridge, MA (2012).

- Szegedy, C., et al., “Going deeper with convolutions.” IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognition (2015). http://dx.doi.org/10.1109/CVPR.2015.7298594

- He, K., et al., “Deep residual learning for image recognition.” CoRR, arXiv:1512.03385 (2015). https://arxiv.org/abs/1512.03385

- Thomee, B., et al., “YFCC100M: The new data and new challenges in multimedia research.” Comm. ACM 59(2), 64 (2016). http://dx.doi.org/

- Bernd, J., et al., “The YLI-MED corpus: Characteristics, procedures, and plans.” CoRR, arXiv:1503.04250 (2015). https://arxiv.org/abs/1503.04250

- Choi, J., et al., “The placing task: A large-scale geo-estimation challenge for social-media videos and images”, Proc. Workshop on Geotagging and Its Applications in Multimedia. ACM, New York, NY (2014). LLNL-TR-663455. http://dx.doi.org/10.1145/2661118.2661125

- LeCun, Y., et al., “Gradient-based learning applied to document recognition.” Proc. IEEE 86(11), 2278 (1998). http://dx.doi.org/10.1109/5.726791

- Mundhenk, T., et al., “A large contextual dataset for classification, detection and counting of cars with deep learning.” Computer Vision—ECCV 2016. Springer International Publishing AG, Switzerland (2016). http://dx.doi.org/10.1007/978-3-319-46487-9_48

- Makadia, A., V. Pavlovic, and S. Kumar, “A new baseline for image annotation.” Computer Vision—ECCV 2016. Springer International Publishing AG, Switzerland (2008). http://dx.doi.org/10.1007/978-3-540-88690-7_24

- Mayhew, A., B. Chen, and K. Ni (2016), “Assessing semantic information in convolutional neural network representations of images via image annotation.” 2016 IEEE Intl. Conf. Image Processing (ICIP). The Institute of Electrical and Electronics Engineers, Inc., Piscataway, NJ. LLNL-CONF-670551. http://dx.doi.org/10.1109/ICIP.2016.7532762

- Grubinger, M., et al., “The IAPR benchmark: A new evaluation resource for visual information systems.” Intl. Conf. Language Resources and Evaluation. European Language Resources Association, Paris, France (2006).

- von Ahn, L., and L. Dabbish, “Labeling images with a computer game.” Proc. SIGCHI Conf. Human Factors in Computing Systems (CHI), ACM New York, NY (2004). http://dx.doi.org/10.1145/985692.985733

- Rumelhart, D., G. Hinton, and R. Williams, “Learning internal representations by error propagation.” Parallel distributed processing: Explorations in the microstructure of cognition, vol. 1. MIT Press, Cambridge, MA (1986).

- Le, Q., et al., “Building high-level features using large scale unsupervised learning.” Proc. 29th Intl. Conf. Machine Learning (2012). http://dx.doi.org/10.1109/ICASSP.2013.6639343

- Ni, K., et al., "Large-scale deep learning on the YFCC100M dataset." (2015). LLNL-CONF-661841. http://arxiv.org/abs/1502.03409

- Poulson, J., et al., “Elemental: A new framework for distributed memory dense matrix computations.” ACM Trans. Math. Software 39(2), 13:1 (2013). http://dx.doi.org/10.1145/2427023.2427030

- Van Essen, B., et al., “LBANN: Livermore big artificial neural network HPC toolkit.” Proc. Workshop on Machine Learning in High-Performance Computing Environments, ACM, New York, NY (2015). LLNL-CONF-677443B. http://dx.doi.org/10.1145/2834892.2834897

- Doersch, C., A. Gupta, and A. Efros, “Unsupervised visual representation learning by context prediction.” Proc. 2015 IEEE Intl. Conf. Computer Vision. The Institute of Electrical and Electronics Engineers, Inc., Piscataway, NJ (2015).

- Goodfellow, I., et al., “Generative adversarial nets.” Advances in neural information processing systems 27. (2014). https://arxiv.org/abs/1406.2661v1

- Mikolov, T., et al., “Distributed representations of words and phrases and their compositionality.” Advances in Neural Information Processing Systems 26. (2013) https://arxiv.org/pdf/1310.4546

- Pennington, J., R. Socher, and C. D. Manning, “GloVe: Global vectors for word representations.” Empirical Methods in Natural Language Processing. (2014). http://dx.doi.org/10.3115/v1/D14-1162

- Simonyan, K., and A. Zisserman, “Very deep convolutional networks for large-scale image recognition.” Proc. Intl. Conf. Learning Representations. (2015). https://arxiv.org/abs/1409.1556

- Boakye, K., et al., A deep learning framework for multimodal pattern discovery. (2016). LLNL-TR-683037.

Publications and Presentations

- Boakye, K., et al., A deep learning framework for multimodal pattern discovery. (2016). LLNL-TR-683037.

- Boakye, K., et al., HPC enabled massive deep learning networks for big data. ASCR Machine Learning Workshop, Rockville, MD, Jan. 5–7, 2015. LLNL-ABS-662780.

- Choi, J., et al., “The placing task: A large-scale geo-estimation challenge for social-media videos and images.” Proc. Workshop on Geotagging and Its Applications in Multimedia. ACM, New York, NY (2014). LLNL-TR-663455. http://dx.doi.org/10.1145/2661118.2661125

- Mayhew, A., B. Chen, and K. Ni, “Assessing semantic information in convolutional neural network representations of images via image annotation.” 2016 IEEE Intl. Conf. Image Processing (ICIP). The Institute of Electrical and Electronics Engineers, Inc., Piscataway, NJ (2016). LLNL-CONF-670551. http://dx.doi.org/10.1109/ICIP.2016.7532762

- Narihira, T., et al., “Mapping images to sentiment adjective noun pairs with factorized neural net.” CoRR, arXiv:1511.06838 (2015). LLNL-PROC-679026. https://arxiv.org/abs/1511.06838

- Ni, K., et al., Large-scale deep learning on the YFCC100M dataset. (2015). LLNL-CONF-661841. http://arxiv.org/abs/1502.03409

- Sawada, J., et. al., TrueNorth ecosystem for brain-inspired computing: Scalable systems, software, and applications. Supercomputing 2016, Salt Lake City, UT, Nov. 13–18, 2016. LLNL-CONF-712782

- Van Essen, B., et al., “LBANN: Livermore big artificial neural network HPC toolkit.” Proc. Workshop on Machine Learning in High-Performance Computing Environments, ACM, New York, NY (2015). LLNL-CONF-677443. http://dx.doi.org/10.1145/2834892.2834897