Jovana Helms (16-FS-042)

Abstract

In this study we developed a computational methodology for finding potential points of power-grid vulnerability such that, if compromised, they could cause consequences to the targeted facility or equipment. To do this, we first investigated the types of vulnerabilities and consequences that we could simulate computationally. The ones that can be simulated are the ones that the GridDyn power-grid simulator can model. Based on that, we translated the problem into a mathematical inverse problem that we could then pose concretely as an optimization problem. Given that optimization problem, we employed multi-start optimization techniques to solve the problem. Although we use optimization techniques, we do not need to find a global optimum: we are only looking for sets of solutions that are “good enough.”

We developed the Squirrel software package to accomplish these tasks. Squirrel provides abstract interfaces that allow one to plug in different types of vulnerabilities, consequences, and even simulations of interest. It also allows one to experiment with a wide range of sampling and optimization methods to create different multi-start optimization solvers. The abstract nature of Squirrel's interface is powerful in that it allows one to plug in different types of simulations, so it can be used even outside the domain of power grids.

Background and Research Objectives

Potential adversaries ranging from sophisticated nation-states to hobbyist hackers have demonstrated the intent―and in some cases, the capability―to cause damage to the country's energy sector through cyber attacks on control systems. In an effort to reduce the risk to critical infrastructure from cyber attacks, the National Institute of Standards and Technology was charged (under Executive Order 13636) with creating a voluntary framework to reduce cyber risks to critical infrastructure. This framework consists of standards and best practices to help critical infrastructure owners mitigate their cyber risks. While useful, a best-practices or compliance-based risk-mitigation approach has limitations. Without being able to quantify risk, it is not possible to defensibly prioritize security investments or evaluate trade-offs between security and functionality. The purpose of this feasibility study is to explore the development of a quantitative framework for detecting vulnerabilities in the power grid.

Traditional quantitative risk-assessment methodologies (such as the probabilistic risk-assessment approach used by NASA and the Nuclear Regulatory Commission) define risk as the product of threat, vulnerability, and consequence. These approaches assume that a system is well-characterized and events can be treated probabilistically. The introduction of an intelligent adversary who does not necessarily behave in a predictable, probabilistic manner renders these assumptions invalid, severely limiting the utility of traditional risk-assessment methodologies in this area.

A difficulty-based risk assessment methodology was recently developed to understand risk to nuclear weapons security from physical attacks. Rather than working forward through an event tree, this approach starts by defining consequence tiers of interest to a stakeholder, creating a limited number of representative scenarios that could result in consequences in those tiers, and then assessing the difficulty of executing each scenario. The approach is adversary agnostic―it makes no assumptions about adversary capabilities or intent―and does not require the characterization of every potential scenario perturbation. We believe that the fundamental concepts of this approach can be leveraged to develop a quantitative risk-assessment methodology that would be applicable to cyber risks to energy infrastructure.

The current state-of-the-art in power-grid contingency analysis is N-1, N-1-1, and N-2 contingency analysis. N-1 contingency analysis systematically simulates the failure of a single power-grid component. N-1-1 and N-2 contingency analyses both systematically simulate the failure of two power-grid components, the former simulating consecutive failures and the latter simulating simultaneous failures. These contingency analyses are essentially a brute-force method that work through every combination of failures and are, therefore, very limited in the number of failures for which they can test due to the combinatorial complexity of the brute-force approach.

This feasibility study consisted of two primary objectives. First, we sought to define several tiers of consequences, such that each tier was roughly the same severity level. Then, with consequence tiers defined, we sought to develop a methodology for finding critical failures in an power-grid model that would lead to these consequences occurring. For this latter task, we used the GridDyn power-grid transmission simulator developed at Lawrence Livermore National Laboratory.

The task of defining consequence tiers was found to be very customer-specific. We were able to describe five tiers of consequences by leveraging relationships with electric utilities through the California Energy Systems for the 21st Century (CES-21) project. We found that some of the consequences of interest to the electric utilities are not representable in GridDyn; in particular, GridDyn does not simulate the behavior or responses of humans, such as operator decision-making or work-crew travel times. However, most of the consequences were found to be compatible with the data generated from a GridDyn simulation, such as measuring load loss or voltage changes.

Once we had an understanding of the consequences, we focused on the critical-failure-analysis methodology. We were able to pose the problem in the framework of inverse problems, a popular field in applied mathematics. This enabled us to develop a software framework centered around multi-start optimization methodologies that couple random sampling techniques with numerical optimization methods to quickly and efficiently find critical failures. This methodology proved quite successful for the test problems within the scope of this feasibility study, and we intend to expand the methodology to larger, more realistic power-grid models in the future.

Scientific Approach and Accomplishments

The purpose of this feasibility study is to develop a proof-of-concept for a computational methodology for finding potential points of infrastructure vulnerability (termed “critical failures”) that could cause a known set of consequences of interest. We were able to develop both the mathematical framework and the software framework necessary to investigate this question, having demonstrated our approach’s utility for proof-of-concept investigations, and laying the groundwork for more sophisticated mathematical and software developments in the future.

The first challenge was to identify consequences of interest to the power-grid community to guide our investigation. To accomplish this, we worked with utility partners with whom we have relationships through the CES-21 project to understand the consequences that they view as most important and the classification criteria they use. Examples from one public utility include the following:

- electrical system islanding (a condition in which a distributed generator continues to power a location even though electrical-grid power is no longer available)

- uncontrolled loss of at least 300 MW for more than 15 minutes

- emergency load-shedding (i.e., switching off parts of the network to prevent system instability) of at least 100 MW

- system-wide voltage reduction of at least 3 percent

- loss of service to at least 50,000 customers for at least one hour

Once these consequences of interest were identified, the next challenge was translating them into a mathematical framework that allowed us to investigate the problem computationally. This involved translating the consequences into quantities that could be measured by mathematical power-grid simulation software; in our case, we used the GridDyn simulator developed at the laboratory. We were unable to measure some of the above-mentioned consequences as they involve behavior not modeled by GridDyn (or any other power-grid simulator). Consequences that involve human responses can not be simulated. Consequence 3, for example, is not possible to model in this framework since emergency load-shedding depends on human grid-operator decisions. Also, Consequence 5 can only be partially simulated. Given demographic information, we can compute when 50,000 customers would lose power, but the time for which they would be without power is dependent on human response time. The other consequences, however, involve quantities that can be measured with the output of a power-grid simulator, and so can be observed within a simulation.

Given that we knew how to check for a consequence within a simulation, we then had to develop a means of determining how to cause a consequence in a mathematically manipulatable manner. Again, this process became a method of identifying mathematical quantities that we could measure and manipulate in a power-grid simulation; the difference is that, in this case, we were interested in quantities associated with the input to a simulation, while the consequences we were interested in were quantities associated with the output of a simulation. Typical manipulatable quantities include loads on buses, transmission-line impedance, and connectivity patterns. Quantities such as bus voltages are (in most cases) output quantities rather than inputs.

Finally, any simulation requires a starting input model. Our input model had four mathematical objects:

- a baseline power grid model

- a set of possible power grid model manipulations. We also call this the "search space."

- a target outcome

- a power-grid simulation

Given these four objects, we could pose a question (such as “How can one cause system-wide voltage reduction of at least 3 percent?” as in Consequence 4, above) as a mathematical search for the critical failures, those manipulations that cause the outcome of interest. We restated the problem as follows.

Problem: Given a consequence of interest, a space of possible manipulations, and a (power-grid) simulator, find the set of critical failures such that each failure causes the consequence of interest.

This problem belongs to a well-known class of mathematical problems known as inverse problems. To solve this inverse problem, we recast it as a local optimization problem. To do this, we needed to convert the above-mentioned problem into one with an objective function that we could attempt to optimize. For that purpose, we introduced the outcome heuristic. Associated with each outcome is a function that takes as input a simulation output and generates a numerical quantity representing how close we are to the outcome of interest. In many cases, the heuristic is straightforward; for example, in Consequence 4, the heuristic is the system-wide average voltage minus the target voltage. We also optionally included a term from the manipulation representing the “cost” of a chosen manipulation.

With these functions in place, the problem becomes one of optimization. However, this problem is different from a standard optimization problem: whereas many optimization problems are focused on finding the global optimum of a given objective function, we posed our optimization problems such that we had to find the subset of local optima that satisfy the consequence of interest. In terms of the optimization problem, this means we sought subsets of our search space that were “good enough." We posed the problem this way to avoid doing a brute-force checking of each possible manipulation, which is computationally unfeasible, especially for large manipulation search spaces. Instead, we allowed the optimization procedure to guide us to manipulations that have the most severe effects on the power grid. Therefore, we posed the above problem as an optimization problem in which we sought to minimize a consequence-specific objective function to constraints dictated by the physics of the power-transmission grid.

Given this optimization problem, we approached it using multi-start optimization, a class of optimization methods in which one samples many optimization starting points and independently runs an optimization procedure from each of them. Multi-start optimization is a practical and highly parallelizable class of methods that is practical to use on difficult optimization spaces.

In this project, we developed the proof-of-concept mathematical and software framework that demonstrates we can solve this problem in a practical setting. We next discuss the software that we developed to solve this problem and how its design gives it the flexibility to use it as both a multi-start optimization research platform and for other application domains.

The Squirrel Software

To solve the optimization problem discussed above, we developed the Squirrel software package. Squirrel combines sampling and optimization methods in a multi-start optimization framework. This prototype software package is not a solver by itself, but is a framework for combining functionality in a structured manner to create a variety of solvers. While this may seem at first less functional than simply building a solver, it provides us with a great deal of power and flexibility.

Squirrel provides abstract interfaces for forward simulators, manipulation classes, outcome classes, sampling methods, and optimization methods. By providing interfaces rather than concrete implementations, we can pick and choose what we want for each component. This makes it easy to create a wide variety of functionality within Squirrel. By trading-out sampling and optimization methods, we can create different solvers; by trading-out outcomes and manipulations, we can solve different problems in a domain area; and by trading-out the forward simulator, we can use Squirrel in entirely different application domains.

Below, we discuss these components of Squirrel and how they work together, as well as the concrete implementations of those abstract interfaces that we have written so far. Squirrel requires the user to provide three domain-specific pieces of code:

- Simulation—a “forward solver.” Squirrel will run a forward simulation. For example, this could be a power-grid simulation, a nuclear reactor simulation, or something lower-level such as a linear solver.

- Manipulation—a means of altering the starting condition of a simulation. For example, in a power-grid simulation, this might mean altering the loads at the buses in the network. In a linear solver, this might mean manipulating the entries in the matrix. The manipulation defines the mathematical search space, whether it is discrete, continuous, or a mixture of both.

- Outcome—a simulation outcome of interest, as well as a means of asking “How close is this simulation’s outcome to the outcome of interest?” For example, in a power-grid simulation, this might be a certain threshold of load loss. The means of asking how close an outcome is to a goal is a heuristic, and is always included as a term in the optimization objective function.

In addition to these three user-provided components, the user must select sampling and optimization methods for Squirrel to use for multi-start optimization:

- Sampler—a sampling method for choosing initial manipulations for the optimizer. This method is typically random, but does not have to be. Some examples of simpler sampling methods include uniform random sampling and Latin hypercube sampling.

- Optimizer—an optimization method for finding a local optimum in the search space. If the local optimum is sufficiently small, then we say we have found an "outcome of interest." Some examples of optimization methods include line search and interior-point methods.

Squirrel provides a number of sampling and optimization methods, as well as hooks to external libraries that perform these operations, though the user can also supply their own.

We have developed a C++ template-based design for the Manipulation types that allows us to create standard representations for continuous, discrete, and mixed search spaces. This means that the sampler and optimizer do not need to know exactly what the manipulation is, but may know only certain relevant information, such as the type of space and its dimensionality. This allows the sampler to operate on a wide variety of manipulations in a standardized way, and allows us to use continuous, discrete, and mixed-integer optimization methods using a standard interface.

Much of our research in scaling to high-dimensional search spaces will focus on developing high-performance adaptive sampling methods that allow us to intelligently choose samples within our search space.

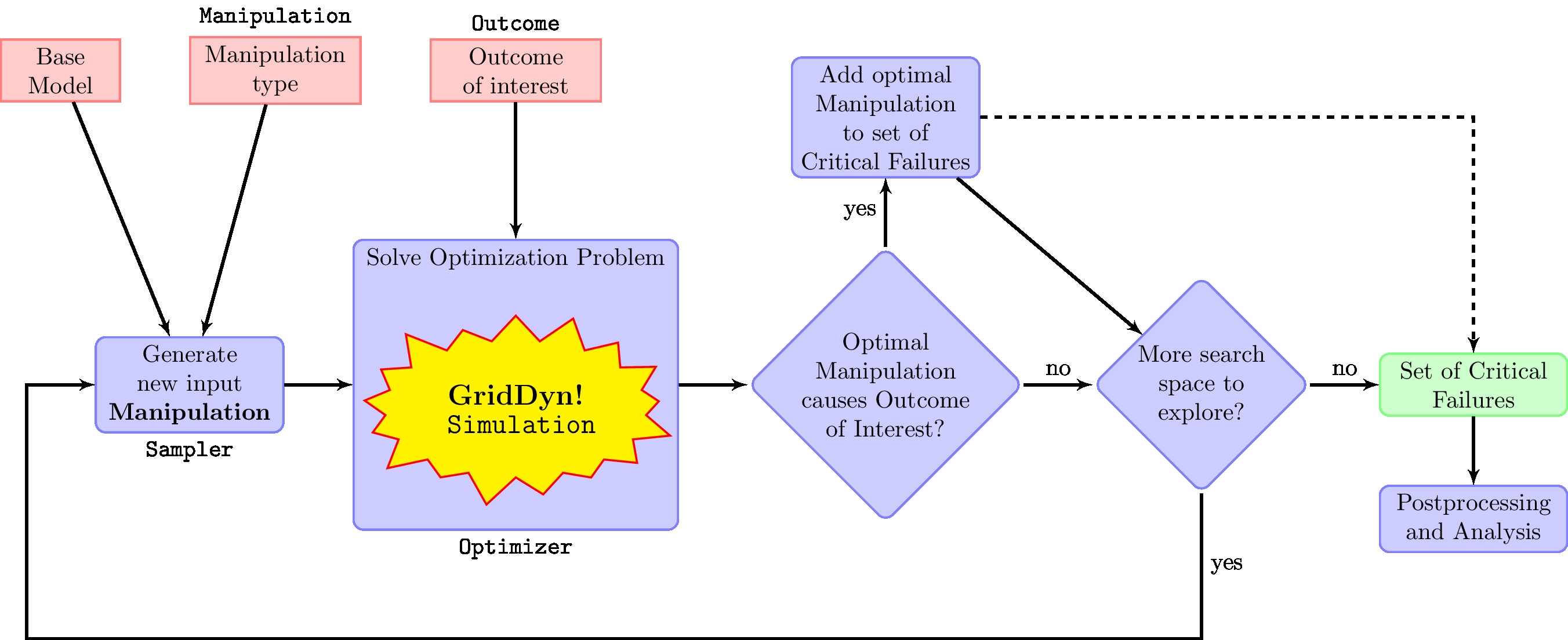

Figure 1 depicts a typical task workflow in Squirrel. Given a simulation starting point (Base Model), a means of altering the simulation’s starting conditions (Manipulation), and an outcome of interest (Outcome), Squirrel executes the following steps:

- Sample a new Manipulation.

- Use optimization methods to move toward a manipulation that causes the Outcome of Interest. Squirrel uses the forward simulator in this step as it runs a new simulation for each manipulation it tests.

- The output of the optimization step (Optimizer) is the “best manipulation” found. If that manipulation successfully causes the Outcome of Interest, add that manipulation to a Set of Critical Failures.

- Repeat Steps 1 through 3 as often as desired, possibly using past iterations to sample more intelligently.

- Output the Set of Critical Failures, possibly performing some analysis of the set.

Component Functionality

Informally, we refer to a package of domain-specific components used to interface with Squirrel as "acorns." We have written a GridDyn acorn that interfaces with Squirrel and uses GridDyn as the forward simulator. We have also written the following components:

- Manipulations—These include transmission-line impedance manipulations, line-break manipulations, and bus load-scaling manipulations.

- Outcomes—These include low-voltage conditions on a specific bus and power load lost over the whole grid.

In this project, we successfully demonstrated the use of Squirrel on power-grid simulations using these manipulations and acorns.

Impact on Mission

This research supports the Laboratory’s energy and climate security strategic focus area by providing a fundamental capability to the area of energy-infrastructure security. Our work would enhance the Laboratory's ability to articulate the value and impact of other work in the area of infrastructure security, and enable strategic engagement with federal agencies and industry. We leveraged the Laboratory’s core competencies in electric grid modeling and our industry partner relationships.

Conclusion

In this project, we have developed the proof-of-concept mathematical and software framework that demonstrates we can develop a quantitative risk-assessment methodology applicable to cyber risks to energy infrastructure in a practical setting. In the future, we will enrich the types of manipulations and outcomes we can use, develop sophisticated and high-performance multi-start optimization techniques, and develop dimensionality-reduction methods to analyze the resulting set of critical failures. The use and development of adaptive methods will help us to scale-up to large problem sizes. We will use this technology to develop a quantitative methodology for analyzing risk to hazards in power-grid systems.

References

Martí, R. 2003. “Multi-Start Methods.” Handbook of Metaheuristics, 355-368.

Martí, R., et al. 2013. “Multi-Start Methods for Combinatorial Optimization.” European Journal of Operational Research 226: 1-8. doi:10.1016/j.ejor.2012.10.012.

Nocedal, J., and S. Wright. 2006. Springer Series in Operations Research: Numerical Optimization. Springer Science & Business Media.