Tapasya Patki (17-FS-008)

Executive Summary

Efficiently managing multiple shared computing resources simultaneously is a challenge for future high-performance computing systems. With this project, we will show how application performance is impacted by different constraints and will determine the feasibility of and accuracy constraints on predicting application performance in multi-constraint computational environments.

Project Description

The path toward exascale supercomputing requires adhering to system power budgets, improving communication performance, ensuring resiliency, and managing large amounts of data. Thus, the next generation of supercomputers will have multiple constraints. Simultaneously managing shared system resources such as cores, power, network, input, and output on these systems will create performance-optimization challenges and introduce conflicting optimization objectives that are expected to result in increased performance variability. Even with a single resource such as power, understanding application performance variation is extremely challenging; no tools exist for making predictions with reasonable accuracy at scale. There is limited focus in the community with regard to determining the lower and upper bounds on performance variability and understanding how different constraints impact fundamental application characteristics (such as memory usage and scalability). Most research is experimental, architecture-dependent, and targeted toward site-specific adaptive system software. We are studying the impact of multiple constraints on application performance. Our goal is to determine the range of application performance variation and understand the feasibility of predicting application performance accurately at scale. To do this, we will use novel tools for collecting relevant, high-quality application data from which we will derive meaningful features, representative samples, and relationships. Then we will develop machine-learning techniques (e.g., partial dependence analysis) to understand application performance, as well as the range of run-to-run variability.

Managing multiple shared resources simultaneously is a challenge for future high-performance computing systems. We expect to show how application performance is impacted by different constraints and determine the feasibility of predicting application performance in multi-constraint computational environments, and if so, with what accuracy and at what scale. We will study the impact of multiple constraints (such as power-capping systems, temperature limits, and network bandwidth) on application performance. We will begin by gathering high-quality performance data in multi-constraint scenarios, which involves developing novel tools and constraint models for data collection. Then, we will extract relevant features from the aforementioned data and develop statistical-analysis tools and machine-learning techniques to understand application performance variation. Our feasibility study will be an important step toward the exascale goal, will help identify the range of performance variation introduced by multiple constraints, and better understand performance reproducibility. We will consider two complementary approaches to building rich predictive models. The first approach attempts to exploit correlations between the constraint variables and the collected features by constructing an augmented feature set. Alternately, the second approach quantifies the range of the constraint variables into multiple partitions and builds a predictive model in each of the cases. We also will explore the feasibility of performance-prediction models applied to online analysis in other advanced system software, such as run-time systems and job schedulers. We will also develop performance analyses that will enable us to understand and quantify run-to-run variation for the first time.

Mission Relevance

Redesigning the computer system software stack to support multiple objectives is a critical part of preparing for future high-performance computing systems. Our proposed study will be a first step toward achieving this. This study will help us understand run-to-run variability in application performance under multiple constraints on our current systems, which will enable us to manage resources on large-scale systems more efficiently in the future, leading to higher throughput. This study supports Lawrence Livermore National Laboratory’s core competency in high-performance computing, simulation, and data science. Our study is relevant to the NNSA goal of modernizing our infrastructure to ensure that the Laboratory has the core capabilities required to execute mission responsibilities.

FY17 Accomplishments and Results

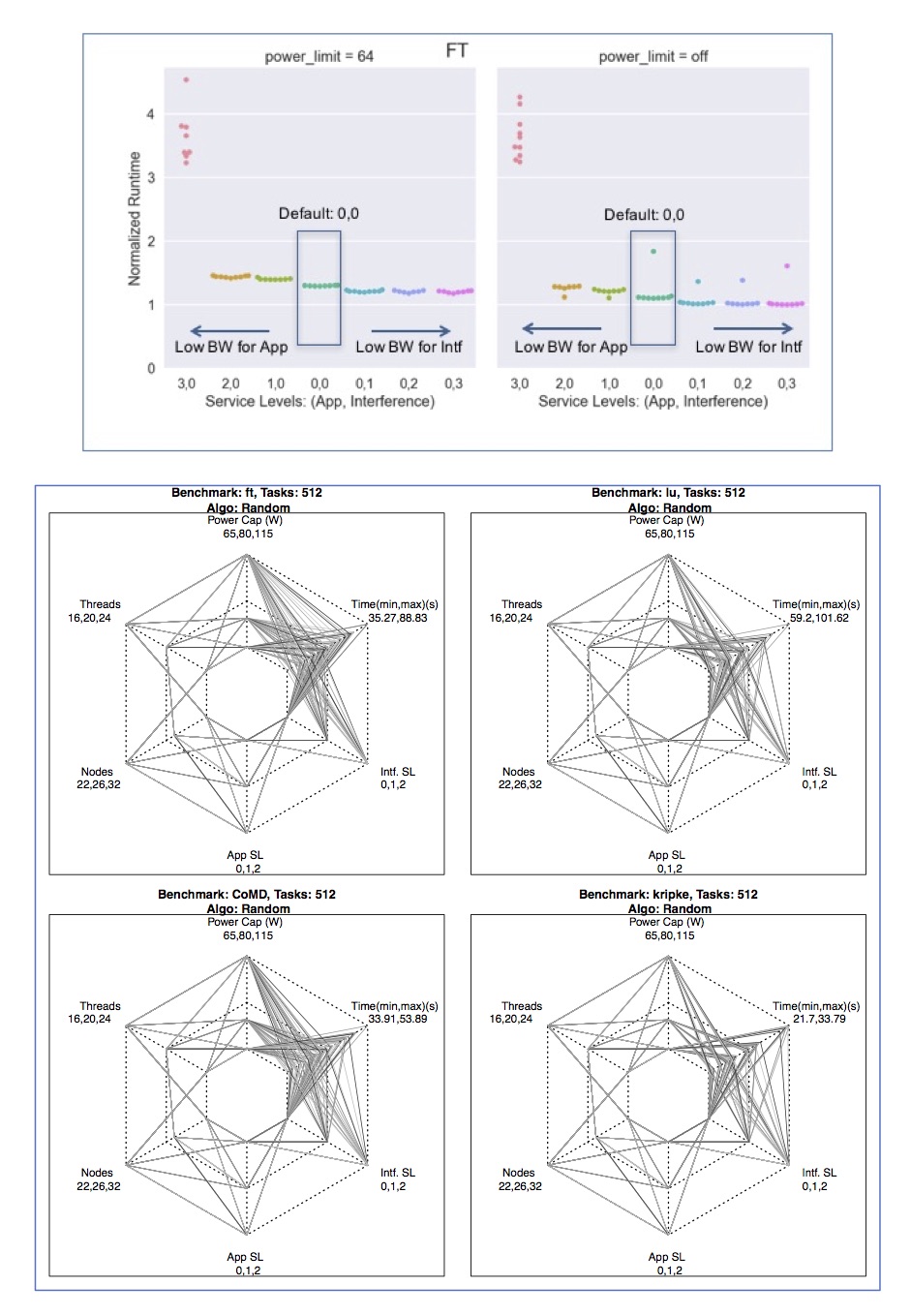

In FY17, we explored the impact of network quality-of-service (QoS) and power-capping knobs on application performance to understand multi-constraint environments. Specifically, we (1) collected data on five different applications in a controlled setting at various configurations; (2) used a communication-heavy message-passing interface all-to-all benchmark to create background interference, using four total-task counts (512 tasks to 4,096 tasks), three thread counts (16, 20, and 24 threads per node), three processor power caps (65, 80, and 115 watts), three placement algorithms (spread, packed, and random), and six network QoS levels between the application and the interference (00,01,02,10, and 20); and (3) ran queue simulations where the performance of two applications was considered simultaneously. So far, more than 3,000 experiments have been conducted as a part of this feasibility study.

Publications and Presentations

Ates, E., et al. Forthcoming. "Understanding Application Performance Variation in Power and Network-Constrained High-Performance Computing Environments." ISC High Performance 2018, June 24-28, 2018. Frankfurt,Germany. LLNL-POST-735429.