Alan Kaplan | 16-ERD-034

Overview

The presidential BRAIN Initiative aims to revolutionize our knowledge of the human brain, as well as advance treatment and prevention procedures for brain disorders. Researchers involved with this initiative work with biomedical "big data;" they require expertise in algorithm design, statistical methods, and high-performance computing to fully exploit these heterogeneous datasets at scale. To help meet these requirements, we partnered with neuroscientists from the University of California, San Francisco, in a project that lies at the intersection of neuroscience, statistics, large-scale data analytics, and machine learning. We developed novel analytic approaches for large-scale multi-dimensional data acquired from patients monitored for clinical assessment. This dataset was compiled from patients surgically implanted with intracranial sensors that record the electrical activity of the brain’s cerebral cortex and were monitored 24 hours a day for several weeks. This dataset specifically targeted the relationship between neurological signals and natural, uninstructed patient behavior and emotional states. In addition, synchronized high-definition video with audio recorded natural unstructured patient behavior. By correlating neural signals with events captured on video, we were able to relate brain function to observable behavior.

Background and Research Objectives

The goal of the presidential BRAIN Initiative (Jorgenson et al. 2015, Markoff 2013) is to provide a better understanding of human brain function to facilitate prevention and treatment of neurologic and neuropsychiatric disorders. Analogous to the Human Genome Project, the twelve-year initiative will provide the most comprehensive characterization of the human brain to date. Prior to and as part of this effort, numerous recent advances in neurotechnology (including those at Lawrence Livermore National Laboratory) have granted unprecedented access to the brain’s inner workings. Monitoring techniques such as electrocorticography (ECoG) record neural signals with unparalleled spatiotemporal resolution in patients awaiting neurosurgical interventions. Such devices record neural activity for weeks at a time. Neural signals are also often recorded in conjunction with other data sources (e.g., video, audio, and physiological signals), enabling the mapping of neural signals to observed human activities (Dastjerdi 2013).

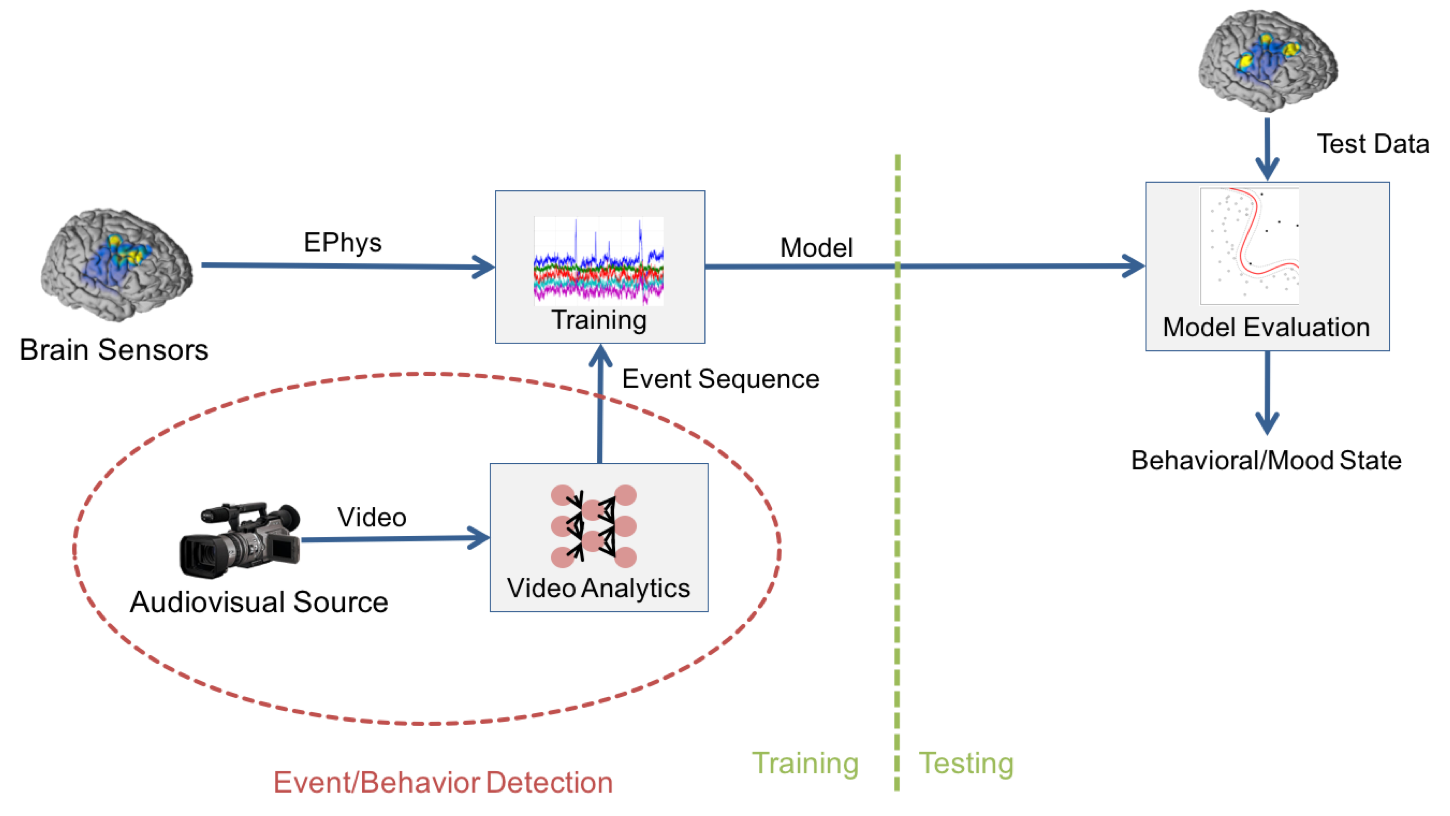

Our research objective was to develop analytic approaches for large-scale multiple-dimensional biomedical big data. With the aid of this one-of-a-kind dataset, the combined neuroscience expertise of investigators from the University of California San Francisco (UCSF) and the statistical and machine learning expertise of the investigators from the Laboratory, plus the Laboratory’s world-class computational resources, we developed novel approaches to neural-signal analysis and multimodal modeling to determine the neural patterns underlying human activities observed in video data. We employed the following three research thrusts to achieve that goal:

- We focused brain-signal characterization on developing methods for processing ECoG recordings from human patients in a manner that enabled classification of neural activity. Of particular scientific interest was the characterization of neural activity across broad spatial, functional, and temporal scales.

- To relate human behavior to neurological signals, events from video data were time-segmented using state-of-the-art video and audio analytical techniques. While such techniques have proven successful in recognizing objects in images, the nature of patient behavior necessitated the incorporation of additional salient cues, such as audio and motion, into our algorithms.

- Joint modeling of ECoG and video data enabled inference from one domain to the other. The fully data-driven model captured associations between an individual’s neural activity (as measured by the ECoG array) and the individual’s activity status.

We described models developed for ECoG data used to classify the emotional state of the patient. Using the video data as ground truth (see figure), we evaluated classification performance using receiver operating characteristic curves (graphical plots that illustrate the diagnostic ability of a binary classifier system as its discrimination threshold is varied). We then modeled the data using Gaussian distributions over the three dimensions of space, time, and frequency.

Impact on Mission

Our research objective of developing analytic approaches for large-scale multiple-dimensional biomedical big data supports the Laboratory’s core competencies in high-performance computing, simulation, and data science, as well as that of bioscience and bioengineering.

Bioengineering and data sciences have been identified as two of the three areas meriting special attention in enhancing and creating core competencies to meet mission needs, and our multidisciplinary team spans several technical domains. Additionally, multimodal fusion of disparate data sources remains a significant challenge for DOE and DOD researchers. Finally, developments in video analytics technology based on patient observation and classification will have applications in counterterrorism, intelligence, and defense in the form of biometrics, video surveillance, visual sentiment analysis, and activity recognition.

Conclusion

To improve prevention and treatment of neurologic and neuropsychiatric disorders, we partnered with medical researchers in the neurosciences to develop an approach to analyzing big sets of multimodal data. We achieved this by combining neuroscience, statistics, large-scale data analytics, multimodal modeling, and machine learning to determine the neural patterns underlying human activities observed in video data. Additionally, through this project, we established a strong collaboration with UCSF researchers. We continue to engage with our collaborators and plan for future efforts with respect to improving clinical video analytics and ECoG modeling. In addition, we have engaged and strengthened our connections with other UCSF faculty in the area of data science for neurological analysis and modeling. Our growth in expertise has led to other opportunities in the areas of traumatic brain injury and computational neuroimaging.

References

Dastjerdi, M., et al. 2013. "Numerical Processing in the Human Parietal Cortex During Experimental and Natural Conditions." Nature Communications 4: 2528. doi: 10.1038/ncomms3528.

Jorgenson, L. A., et al. 2015. "The BRAIN Initiative: Developing Technology to Catalyse Neuroscience Discovery." Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences 370 (1668). doi: 10.1098/rstb.2014.0164.

Markoff, J. 2013. "Obama Seeking to Boost Study of Human Brain." The New York Times , February 17, 2013. https://www.nytimes.com/2013/02/18/science/project-seeks-to-build-map-o….

Mikolov, T., et al. 2010. "Recurrent Neural Network Based Language Model." INTERSPEECH 2010, 11th Annual Conference of the International Speech Communication Association, Makuhari, Chiba, Japan, 26–30 September 2010.

Publications and Presentations

Kaplan, A. 2018. "Toward a Scalable, Multi-Modal Data-Driven Approach to Systems Neuroscience." Livermore, CA. (presentation) LLNL-PRES-744186.

——— . 2018. "Spatial Structure in Data-Driven Modeling and Classification." Kavli Institute Faculty Seminar, San Francisco, CA. (presentation) LLNL-PRES-692179.

Kaplan, A., et al. 2016. "Data Driven Neurological Pattern Discovery." BAASiC Technical Meeting, Livermore, CA. (presentation). LLNL-PRES-695740.

——— . 2016. "Dynamic Models for Electroencephalography." Center for Advances Signal and Image Sciences Workshop , Livermore, CA. (presentation) LLNL-PRES-692179.

Kim, H., et al. 2017. "Fine-Grained Human Activity Recognition Using Deep Neural Networks." Center for Advances Signal and Image Sciences Workshop, Livermore, CA. (poster) LLNL-POST-731136.

Tran, E., et al. 2017. "Deciphering Emotions Using Convolutional Neural Networks on Video Data." Center for Advances Signal and Image Sciences Workshop, Livermore, CA. (poster) LLNL-POST-731426.

Tran, E., et al. 2018. "Facial Expression Recognition Using a Large Out-of-Context Dataset." In 2018 IEEE Winter Applications of Computer Vision Workshops (WACVW), 52–59. IEEE. (presentation) LLNL-PRES-747624.