Ignacio Laguna Peralta (15-ERD-039)

Executive Summary

We are investigating multiple resilient programming abstractions for large-scale high-performance computing applications. These abstractions will encapsulate several failure recovery models to reduce the amount of code and reasoning behind implementing these techniques at large scale, and will have applications in exascale simulations, such as those to be used for stockpile science.

Project Description

The DOE has identified resilience as one of the major challenges to achieving exascale computing—specifically, the ability of the system and applications to work through frequent faults and failures. An exascale machine (a quintillion floating point operations per second) will be comprised of many more hardware and software components than today’s petascale machines, which will increase the overall probability of failures. Most NNSA defense-program simulations use the message-passing interface programming model. However, the message-passing interface standard does not provide resilience mechanisms. It specifies that if a failure occurs, the state is undefined and applications must abort. To address the exascale resilience problem, we are developing multiple resilient programming abstractions for large-scale high-performance computing applications, with an emphasis on compute node and process failures (one of the most notable failures) in the message-passing interface programming model. We will investigate the performance of the abstractions in several applications and study their costs in terms of programming capability.

We expect to deliver resilience programming abstractions that will enable efficient fault tolerance in stockpile stewardship simulations at exascale. The abstractions will comprise a set of programming interfaces for the message-passing interface programming model and node and process failures. The abstractions will encapsulate several failure recovery models to reduce the amount of code and reasoning behind implementing these models at large scale. As a result of this research, Laboratory code teams will be more productive in their large-scale simulations by concentrating more on the science behind the simulation rather than on the coding aspects to deal with frequent failures, especially in exascale simulations.

Mission Relevance

This project directly supports one of the core competencies of Lawrence Livermore, specifically high-performance computing, simulation, and data science. Simulations (to extend the lifetime of nuclear weapons in the stockpile, for example) will require the resilience abstractions that we will provide in this project to make effective use of exascale computing in support of DOE’s goal in nuclear security, as well as NNSA’s goal to understand the condition of the nuclear stockpile.

FY17 Accomplishments and Results

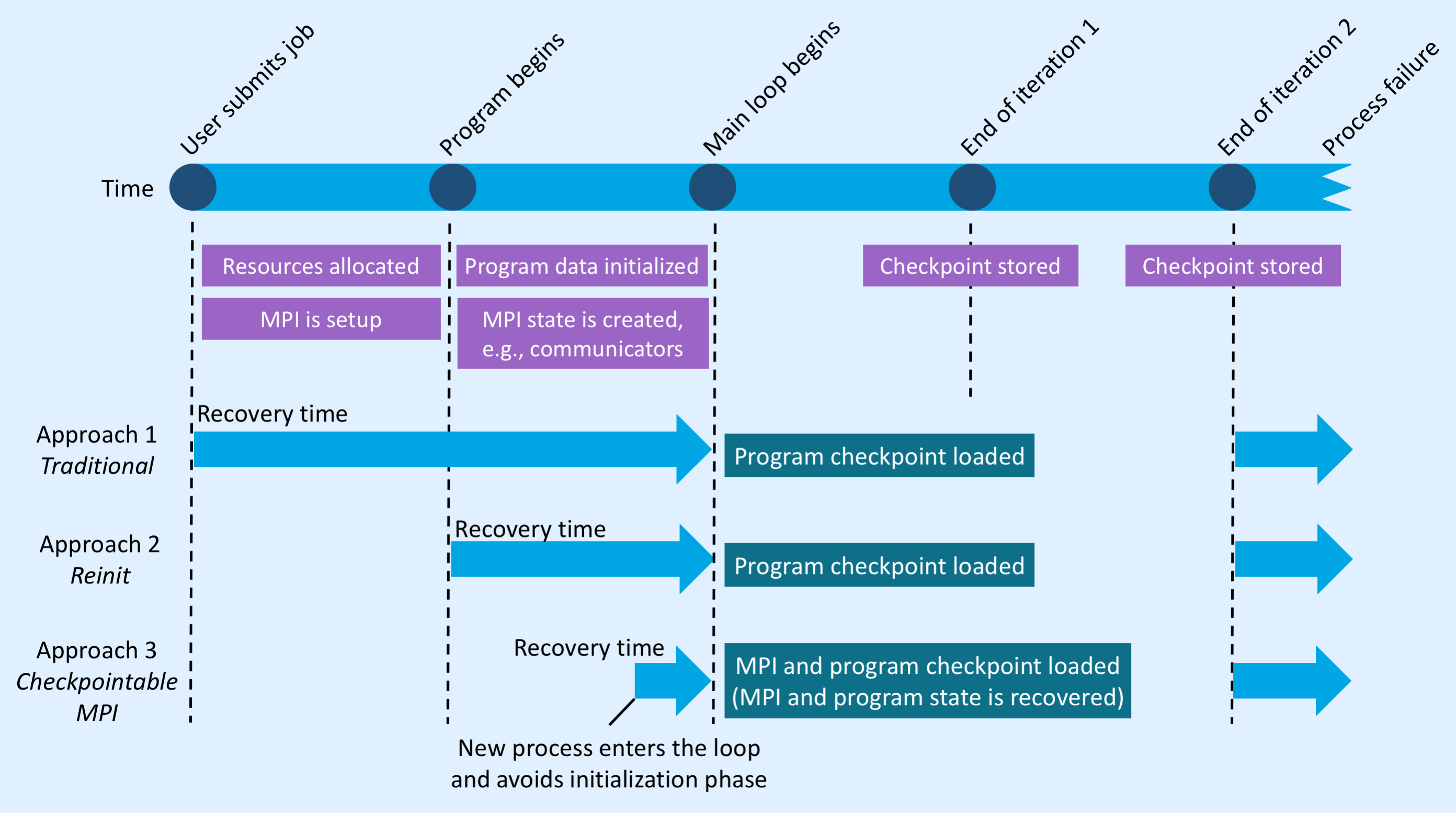

In FY17 we (1) studied multiple programming abstractions for fault tolerance in applications using the message-passing interface, including return error codes checking, try–catch models, resilient loops, and global restart models; (2) measured the complexity of these models in terms of programming complexity and inter-relationships of applications and libraries; (3) determined, based on the results, that the global restart models are the most suitable for Livermore high-performance computing applications, and built a prototype (Reinit) in a widely used resource manager (slurm) and message-passing interface library (MVAPICH); and (4) measured the performance and complexity of this model and determined it meets our expectations.

Publications and Presentations

Sultana, N., et al., 2017. "Designing a Reinitializable and Fault Tolerant MPI Library." LLNL-POST-734163.