Peer-Timo Bremer (13-ERD-002)

Abstract

Industrial volumetric inspection with computed tomographic imaging is used in applications ranging from scans of weapons to airport security to images of National Ignition Facility target capsules. This imaging technique employs individual x-ray shots that are assembled computationally into a cross-sectional image. This nondestructive evaluation technique is crucial in a number of mission-critical applications as well as of significant interest in medical and engineering applications. We have developed a new, more integrated approach that has led to a number of new approaches, new tools that are actively used throughout the complex, and intellectual property in the process of being commercialized. In particular, we have developed "segmentation ensembles," a fundamentally new approach to segment and classify digital images in a wide variety of applications. This has led to multiple research publications, several new proposals, new collaborations, and an ongoing discussion with a venture capital company interested in commercializing the technology.

Background and Research Objectives

Industrial volumetric inspection, using computed tomographic (CT) imaging, is a core technology in many applications crucial to national security. A type of nondestructive evaluation, CT imaging is used in applications ranging from weapons scans to images of Livermore's National Ignition Facility target capsule, and from material characterizations to airport security. While each area may use different modalities (x-ray, microwave, etc.) and offers unique challenges, the overarching problem is to find objects, materials, or other features in potentially noisy and cluttered images corrupted by artifacts and with limited resolution.

The standard processing pipeline is divided into four stages:

- Acquisition, which produces an initial set of images

- Reconstruction, which combines the images into a three-dimensional volume

- Segmentation, which partitions the volume into individual objects or features

- Classification, which based on the given feature properties, makes a decision as to whether a bag may contain a bomb or a manufactured part contains a flaw

While acquisition is primarily technology-dependent and comparatively well understood, stages two to four are software-dependent and the focus of intense theoretical and algorithmic research in a variety of application areas. However, existing research efforts have been largely isolated and restricted to the three sub-fields without significant collaborations or integrated solutions. The goal of this project has been to take advantage of Livermore’s unique access to expertise across all areas to develop new, more integrated approaches.

Initially, the project had been aimed at nondestructive evaluation in general, and in fact some of the team members are actively working in a number of different application areas such as chip verification and manufacturing. As some of the areas are classified and others have proven difficult to obtain relevant data sets, this project shifted the focus slightly to airport security, and more specifically, the automatic threat detection in checked luggage. Because of an independent collaboration of some of our team members with the ALERT (Awareness and Localization of Explosives-Related Threats) Center of Excellence at Northeastern University, we acquired a large and challenging data set ideal for developing and testing our technology. Nevertheless, as will be discussed below, our results are widely applicable and have been demonstrated, for example, in natural images and a medical data set.

Scientific Approach and Accomplishments

We have made significant contributions in all areas related to automatic threat detection. In particular, members of our team have developed a new metal-artifact reduction technique (metallic hardware significantly degrades image quality) to significantly improve CT image reconstruction. Furthermore, we have developed a new, patented solution to integrate the segmentation and classification stages of the threat detection pipeline with the potential for significant impact at LLNL and beyond.

Image Reconstruction

As part of this project, our team has developed and implemented a number of advanced image-reconstruction techniques. These have led to two important outcomes. First, we developed a novel beam-hardening correction approach that was presented at the 2014 Conference on Image Formation in X-Ray Computed Tomography in Salt Lake City.1 The x-ray CT measures the attenuation of an x-ray beam through an object. For a monochromatic beam, the Beer–Lambert law establishes a linear (in log space) relationship between the measurement of the attenuation of the x-ray beam and the attenuation coefficient of the object. Unfortunately the bremsstrahlung-based x-ray sources used in CT have a polychromatic energy spectra. The rate of absorption and scattering of x-rays depends on the x-ray energy and the material composition. Lower-energy x-rays are absorbed at a higher rate, which causes the beam to harden. The violation of the assumed linear relationship between the measurements and the object attenuation map by the polychromatic x-ray CT spectra introduces beam-hardening artifacts into the reconstructed CT images. These artifacts compromise the quantitative accuracy of CT images and degrade their qualitative appearance by introducing streaks, shading, and cupping on the image. We introduced a new computationally efficient and accurate model-based Bayesian hierarchical clustering algorithm that not only improves the qualitative appearance of the image, but also the quantitative accuracy.

In addition to the new algorithms, this project has been instrumental in starting a line of software development that ultimately led to the Livermore Tomography Tools, which has replaced a previous software package and is now supporting a number of mission-critical programmatic applications at Livermore.

Ensemble Segmentations

Our second line of research has focused on developing better segmentation and classification techniques. In particular, the focus has been less on developing yet another set of isolated techniques, but rather on integrating the approaches to significantly improve the state of the art. The fundamental challenge in areas such as airport security is images that are typically corrupted by noise and artifacts, making any existing segmentation technique prone to failure. Similarly, existing classification techniques are highly specific and carefully tuned, causing them to be brittle in the presence of segmentation problems. Fundamentally, the issue is that segmentation works on a low semantic level (i.e., the pixel values), while classification typically operates on a very high semantic level (i.e., objects or even object compositions). Connecting both approaches directly as done in virtually all existing approaches has proven difficult. Instead, we have developed segmentation ensembles, which can combine an arbitrary segmentation technology with an arbitrary classification approach to couple both stages in a very general and flexible manner. In particular, rather than a single best-guess segmentation, our approach uses randomization of the segmentation process to create a large ensemble of potentially correct objects. This ensemble is robust against noise and artifacts in the sense that it typically contains the “correct” solution, albeit at the cost of a large number of incorrect guesses. We then evaluate all potential guesses with respect to the high-level classifier, using its high-level semantics to inform the low-level segmentation process. This has led to substantially better results than traditional techniques, as well as a number of publications, a successful participation in a Transportation Security Administration segmentation challenge, and a new patent on ensemble segmentations.

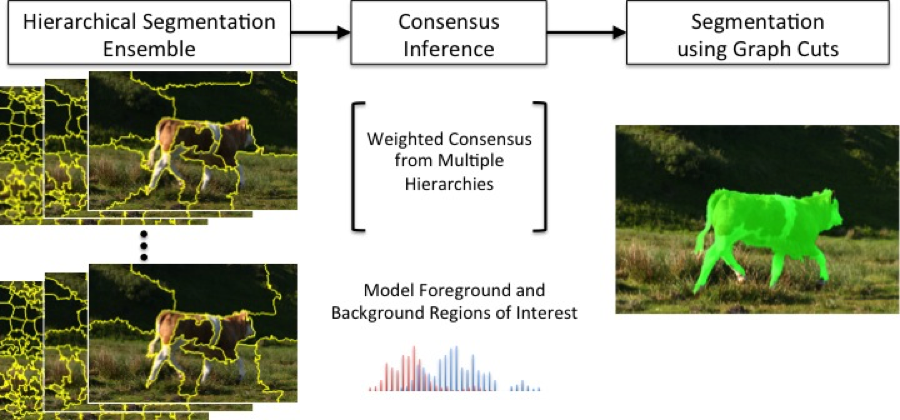

Our first publication dealt with an early prototype of the technology applied to natural images. In particular, we showed how segmentation ensembles can outperform the state of the art in unsupervised foreground/background classification.2 Figure 1 shows an overview of the approach.

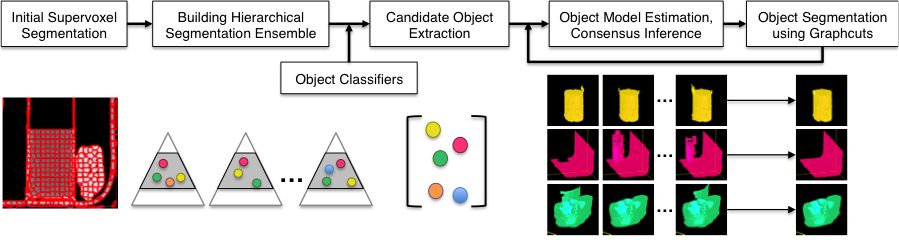

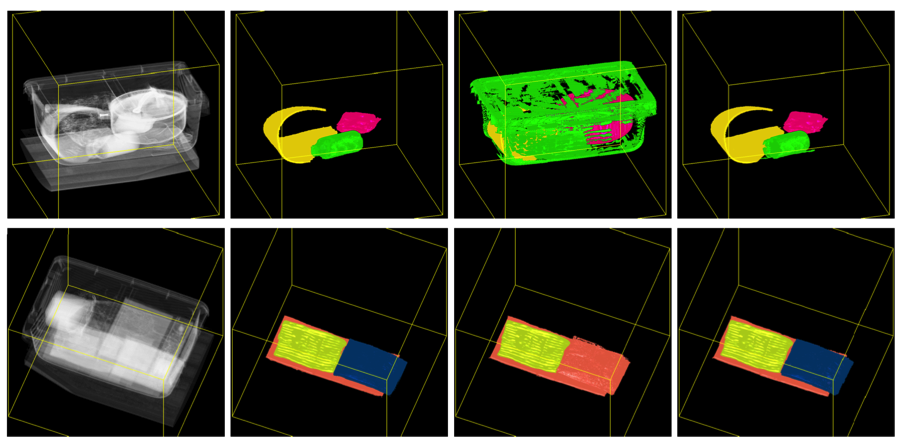

Our second publication focuses directly on the airport-security case by developing a new approach to exploit high-level training data to improve low-level segmentations.3 In particular, we show that by using trained classifiers to select high-quality segments from a random segmentation ensemble and combine only those to form a final segmentation, we can significantly outperform existing approaches even when carefully hand-tuning the latter. Figure 2 shows an overview of the algorithm and Figure 3 some comparisons with existing approaches.

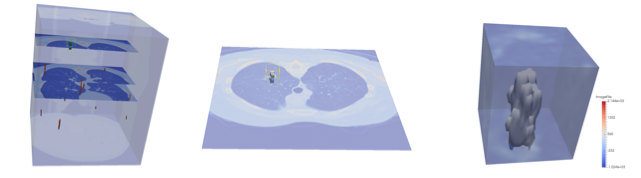

Finally, we have been in contact with a venture capital firm based in San Francisco, Maverick Capital, who is interested in applying this technology to lung-nodule detection in cancer screening. This application is expected to grow significantly because new medical research suggests that early screen for lung nodules may significantly reduce cancer mortality. Because manual scans from trained radiologists are both time consuming and expensive, an automatic or semi-automatic technique could provide substantial financial and medical benefits. Together with Maverick Capital, we have obtained a large (2-TB) data set containing lung scans from 300 patients from the National Institutes of Health under a data transfer agreement. Figure 4 shows some promising initial results demonstrating how our technology can be adapted.

At the conclusion of this project, we have explored several exit strategies to continue the research, move it into practice, and apply it to various internal and external use cases. We have prepared proposal submissions to the Department of Homeland Security and the Domestic Nuclear Detection Office. Furthermore, the intellectual property developed by this project has attracted interest to explore the use of our technology in the medical area. Finally, we are actively exploring collaboration opportunities at LLNL such as applying our segmentation to damage detection in images of National Ignition Facility laser glass.

Impact on Mission

Nondestructive evaluation, as a means of examining and identifying flaws and defects in materials without damaging them, is closely aligned with the Laboratory’s national security mission and strategic focus area of stockpile stewardship by eliminating destructive evaluation and disassembly of weapon components. This nondestructive evaluation technique is crucial in a number of mission-critical applications as well as of significant interest in medical and engineering applications. Our project advanced LLNL’s capabilities in x-ray imaging for nondestructive evaluation in areas ranging from biology to experimental physics, and employs methodologies relevant to the core competency in high-performance computing, simulation, and data science. We have developed a new, more integrated approach that has led to a number of new approaches, new tools that are actively used throughout the NNSA complex, and intellectual property in the process of being commercialized.

Conclusion

This project advanced the state of the art of industrial computed tomography segmentation for nondestructive evaluation of materials—using integrated segmentation algorithms coupled directly to reconstruction and new ensemble-based detection algorithms, we developed a new framework significantly more capable of distinguishing objects and materials in cluttered environments corrupted by artifacts and with limited resolution. In particular, we have developed "segmentation ensembles," a fundamentally new approach to segment and classify digital images in a wide variety of applications, which has led to multiple research publications, several new proposals, new collaborations, and an ongoing discussion with a venture capital company interested in commercializing the technology. In addition, our technology has generated a patent application based on results from this project, "Identification of Uncommon Objects in Containers," and has been fundamental in developing the Livermore Tomography Tools framework, a site-wide technology that has attracted significant interest from other national laboratories. Furthermore, we have supported Livermore’s ongoing collaboration with the Center of Excellence at Northeastern University in Boston, Massachusetts, by enabling a highly successful entry to the segmentation effort they are leading. At least in part due to this project, LLNL and its Center for Nondestructive Characterization remain heavily involved in shaping the research direction of the U.S. Transportation Security Administration through collaboration with the Center of Excellence. Finally, we have developed a first version of the LENS (Livermore Ensemble Segmentation) framework to make our research easily accessible throughout the Laboratory. Beyond research and software development efforts we have described, the intellectual property developed with this project has the potential to lead to new commercial opportunities and cooperative research and development agreements. In particular, we are working on extending LENS for automatic lung nodule detection, which could significantly reduce the cost and increase the efficiency of lung cancer screenings. We are currently preparing a federal business opportunity announcement to solicit additional outside partners interested in commercializing our technology. In addition to our technical accomplishments, this project has employed three postdoctoral researchers that have been converted to full-time staff positions and are in significant demand for their skills, knowledge, and expertise.

References

- Champley, K., and P.-T. Bremer, Efficient and accurate correction of beam hardening artifacts. 3rd Intl. Conf. Image Formation in X-Ray Computed Tomography, Salt Lake City, UT, June 22–25, 2014. LLNL-CONF-649613.

- Kim, H., J. J. Thiagarajan, and P.-T. Bremer, Image segmentation using consensus from hierarchical segmentation ensembles, IEEE International Conf. Image Processing, Paris, France, Oct. 27–30, 2014. LLNL-PROC-652239.

- Kim, H., J. J. Thiagarajan, and P.-T. Bremer, “A randomized ensemble approach to industrial CT,” Proc. ICCV 2015, Intl. Conf. Computer Vision, Santiago, Chile, Dec. 13–16, 2015. LLNL-PROC-677352.

Publications and Presentations

- Bremer, P.-T., “Visual exploration of high-dimensional data through subspace analysis and dynamic projections.” Comput. Graph. Forum. 34(3), 271 (2015). LLNL-CONF-668584. http://dx.doi.org/10.1111/cgf.12639

- Champley, K. M., and T. Bremer, Efficient and accurate correction of beam hardening artifacts. 3rd Intl. Conf. Image Formation in X-Ray Computed Tomography, Salt Lake City, UT, June 22–25, 2014. LLNL-CONF-649613.

- Kim, H., J. J. Thiagarajan, and P.-T. Bremer, “A randomized ensemble approach to industrial CT,” Proc. ICCV 2015, Intl. Conf. Computer Vision, Santiago, Chile, Dec. 13–16, 2015. LLNL-PROC-677352.

- Kim, H., J. J. Thiagarajan, and P.-T. Bremer, Image segmentation using consensus from hierarchical segmentation ensembles. IEEE Intl. Conf. Image Processing, Paris, France, Oct. 27–30, 2014. LLNL-PROC-652239.

- Thiagarajan, J. J., P. T. Bremer, and K. N. Ramamurthy, Understanding non-linear structures via inverse maps. (2014). LLNL-POST-652033.

- Thiagarajan, J. J., K. N. Ramamurthy, and P.-T. Bremer, Multiple kernel interpolation for inverting non-linear dimensionality reduction and dimension estimation. IEEE Intl. Conf. Acoustic, Speech and Signal, Florence, Italy, May 4–9, 2104. LLNL-CONF-645866.

- Thiagarajan, J. J., et al., Automatic image annotation using inverse maps from semantic embeddings. IEEE Intl. Conf. Image Processing, Paris, France, Oct. 27–30, 2014. LLNL-CONF-650594.