Ming Jiang (16-ERD-036)

Project Description

Simulation workflows are highly complex and often require a manual tuning process that is cumbersome for users. Developing a simulation workflow is as much an art as a science, requiring finding and adjusting the right combination of parameters to complete the simulation. Such workflows come into play in many codes, including arbitrary Lagrangian–Eulerian (ALE) hydrodynamics codes, which are often used to simulate fluid flows in shocked substances. These codes include (1) Livermore’s ALE3D, a three-dimensional arbitrary Lagrangian–Eulerian multiple-physics numerical simulation code, (2) KULL, used to model high-energy-density physics; (3) BLAST, a finite-elements code for shock hydrodynamics; and (4) HYDRA, used to model radiation hydrodynamics. In general, ALE codes require tuning a number of parameters to control how a computational mesh moves during the simulation. The process can be disruptive and time-consuming—a few hours of simulation can require many days of manual tuning. There is an urgent need to semi-automate this process to save time for the user and improve the efficiency of the codes. To address this need, we plan to develop novel predictive analytics for simulations and an in situ infrastructure. The infrastructure would run the predictive analytics simultaneously with the simulation to predict failures and dynamically adjust the workflow accordingly. Our goal is to predict simulation failures ahead of time and proactively avoid them to the extent possible. We will investigate supervised-learning algorithms to develop classifiers that can predict simulation failures by using the simulation state as learning features. We will investigate the use of flow analysis techniques to extract high-level flow features for our predictive analytics. Similar to predicting simulation failures, we will investigate supervised-learning algorithms to find the correlation between adjustments in parameters and changes in the simulation state. Together with the knowledge of which simulation state can lead to failures, we can generate workflows that can avoid those failures in a systematic way.

The planned research has the potential to significantly reduce the development time for simulation workflows and minimize the user effort. It will address the urgent need to move towards semi-automating the pipeline for ALE simulations, thus enabling large-scale uncertainty quantification studies. Such studies seek to reduce the uncertainties in computational and real-world applications. Our approach is based on analyzing where and when simulations fail, as well as developing solutions to how existing workflows can avoid those failures. We will leverage the expertise of simulation users by codifying their knowledge and experience into this framework. We expect the outcome for predictive analytics to include novel machine-learning algorithms designed specifically for predicting and avoiding simulation failures. We expect our in situ infrastructure to be a novel approach for integration that combines traditional high-performance computing simulations with cutting-edge data analytics.

Mission Relevance

Our effort in predictive analytics and in situ infrastructure supports the Laboratory’s core competency in high-performance computing, simulation, and data science. ALE simulations are of vital importance in numerous Laboratory applications, particularly in the stockpile stewardship science strategic focus area. Our work will directly benefit Livermore's ALE codes, which support numerous stockpile stewardship efforts, and will enable large-scale uncertainty quantification studies using ALE simulations.

FY16 Accomplishments and Results

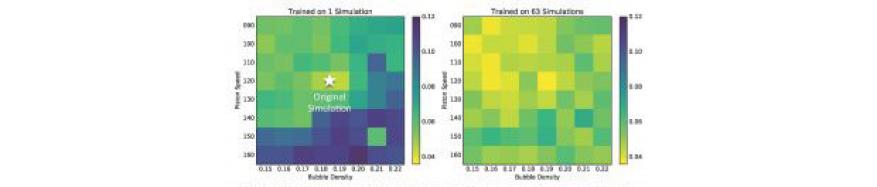

In FY16 we (1) completed initial integration of a unit-testing framework for LAGER—the Learning Algorithm-Generated Empirical Relaxer, our machine-learning algorithm code to handle simulation failures—as well as the data-generation framework for our KULL test cases (see figure); (2) extended LAGER’s random forest algorithm for KULL to use both geometric and physical learning features; (3) developed initial visual analytics using parallel coordinates for high-dimensional training data and an interactive tree visualizer for random forest model output; (4) used Apache Spark, which is an open-source cluster computing framework, and Zeppelin, which is a Web-based notebook for interactive data analytics, to complete the initial big-data-analytics framework for data aggregation of learning features from simulation data; (5) developed an initial approach to validate metrics for the quality of a mesh relaxation strategy using convergence analysis through the Miranda radiation hydrodynamics code designed for large-eddy simulation of multicomponent flows with turbulent mixing; and (6) completed a survey of the workflow system and developed a proxy application to evaluate the Pegasus workflow management system by using BLAST and VisIt, an open-source interactive parallel visualization and graphical analysis tool for viewing scientific data.

Publications and Presentations

- Jiang, M., et al., A supervised learning framework for arbitrary Lagrangian-Eulerian simulations. 15th IEEE Intl. Conf. Machine Learning and Applications, Anaheim, CA, Dec. 18–20, 2016. LLNL-CONF-698953.