Brian Van Essen (16-ERD-039)

Abstract

Addressing many of our country’s national security priorities depends on the discovery of complex patterns in massive multiple modes of data and the subsequent use of these patterns to guide decisions. This methodology is relevant to countering threats from weapons of mass destruction, cybersecurity, design of medical countermeasures, and protection of critical U.S. infrastructure. Vast amounts of labeled data and the computational horsepower of general-purpose computing on graphics processing units has driven recent breakthroughs in the applicability of deep learning to image processing, natural language processing, and recommendation/decision engines. To extend these breakthroughs to scientific applications and many national security applications, we need another surge in bringing even more computational horsepower to bear on training deep neural networks with massive amounts of unlabeled data. This project focused on developing a scalable deep learning training toolkit optimized to use the unique resources of high performance computing systems. Additionally, this project explored the new capabilities of neuromorphic computer architectures, that is, architectures that mimic the neuro-biological architectures present in the nervous system, and how they can be applied to applications of interest to the DOE. Finally, we extended the Livermore Brain (big artificial neural network) toolkit to create a more fully featured and scalable deep learning framework.

Background and Research Objectives

As multimodal data continue to be collected in the form of pictures, video, audio, text, and other sensor types, the need to quickly identify key patterns and the associated data only grows. At the same time, new developments in computing algorithms and architectures are opening new possibilities to address these needs. Our project focused on developing scalable learning architectures for large-scale computational learning systems to help analysts quickly discover and explain patterns in their large collections of multimodal data. This deep-learning system could enable analysts to rapidly assess multiple hypotheses by helping them to find a more complete set of relevant data spanning all modalities and to explain why they are related, freeing analysts to reason at higher levels of abstraction and helping them to be more effective at connecting the dots. Specifically, our goal was to scale up existing deep-learning algorithms to run on high-performance computing platforms. In addition, we investigated emerging computing architectures that are based on massive parallelism at extremely low power and that implement artificial neural networks in hardware similar to biological brains. The latest generation of artificial neural networks can train on many hidden-layers. Classification systems using deep networks learn directly from raw signals, creating an increasingly abstract and important set of patterns or “features” in every successive network layer.

In general, state-of-the-art deep learning toolkits are not optimized for the training of large scale deep neural networks, or the use of high-performance-computing-specific technologies. The majority of the publicly available or open-source toolkits (including Caffe, Keras, Theano, Torch, TensorFlow, CNTK) are only optimized to take advantage of multiple graphics processing units in the shared memory space of a single compute node. As a result, the size of the models that they can train is limited to the available memory on a single node and its attached units. Additionally, these toolkits have minimal ability to scale up the rate at which they can process large data sets. A small number of these toolkits (TensorFlow, CNTK, FireCaffe, Intel-Caffe) provide limited support for modest data parallelism through the implementation of centralized parameter servers or distributed parameter sets. These toolkits can increase the rate of processing a data set to a modest degree (up to about eight-way parallelism), but the provided techniques are limited by the challenges of training models with large mini-batches (e.g. poor convergence).

General model parallelism is unavailable in any publicly known toolkit, but both Torch and TensorFlow do provide a very limited form of model parallelism. These tools allow each layer to be distributed to an independent node and thus train on larger models. However, the inherent loop-carried dependence of stochastic gradient descent, which is a method for updating a set of parameters in an iterative manner to minimize an error function, prevents these toolkits from providing any performance improvement with the inclusion of additional resources. The only previously known toolkit that provided general model parallelism was the Stanford DeepPy framework.1,2 Previously, we had used the Livermore Brain toolkit to train an unsupervised neural network on the YFCC100M dataset, but found that the toolkit would not scale beyond 100 graphics-processing-unit-enabled high-performance-computing nodes.3

The goal of our project was to research and develop new high-performance-computing-enabled algorithms for training deep neural networks and exploring the efficacy of neuromorphic architectures for low-power deployment of DOE-relevant applications. This goal had three sub-objectives, all of which we achieved in the course of this project: (1) to train large-scale, fully-connected, deep neural networks; (2) to enable data-parallel training algorithms that can scale to high-performance computing class systems; and (3) to explore the efficacy of neural-synaptic hardware architectures for executing large, deep-neural-network models.

Scientific Approach and Accomplishments

The prototype of the Livermore Brain framework has many capabilities: It (1) supported model parallelism; (2) limited data parallelism through large mini-batches; (3) fully connected network layers; (4) limited unsupervised feature learning with single layer auto-encoders; and (5) supervised network training.4 It uses the Elemental distributed memory dense linear algebra library to achieve true model parallelism by distributing the weight and activation matrices for each layer in the neural network across multiple message-passing-interface ranks.5 Our work transformed this prototype toolkit into a more mature, open-source, deep-learning framework with support for: (1) large scale data parallelism; (2) graphics processing unit acceleration; (3) layer types and training features; (4) parallel checkout/restore; (5) distributed high-performance multi-stream input/output; and (6) integration with Google’s TensorBoard neural network visualization tool.

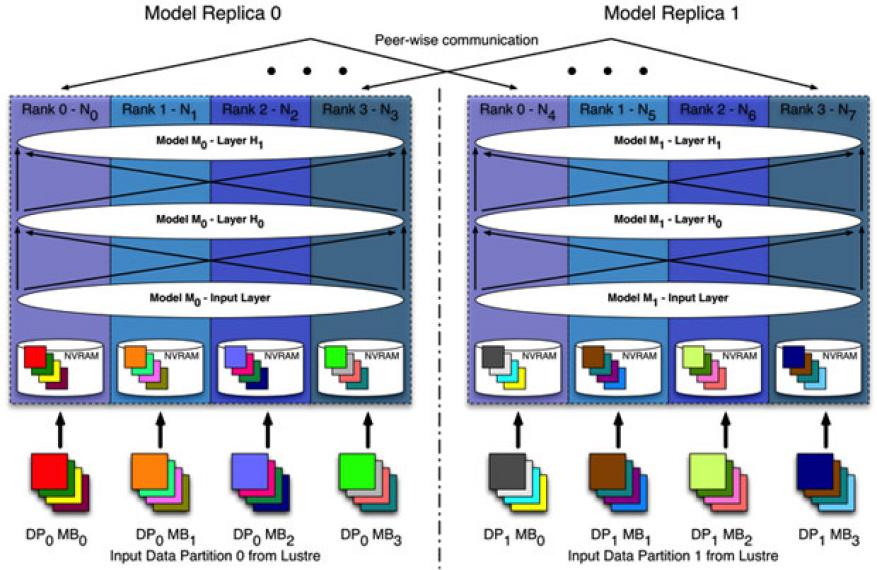

We developed a novel communication infrastructure, shown in Figure 1, that allowed us to effectively combine both model and data parallelism. The standard approach for data parallelism is a synchronous (or asynchronous) parameter server.1 Leveraging the high-bandwidth, low-latency, InfiniBand interconnect of high-performance computing systems, we developed a scalable, peer-wise communication infrastructure for distributed stochastic gradient descent. The communication model allows for message-passing-interface-based reductions across ranks in each model and is extensible to allow inter-model communication optimizations. When no inter-model optimization is performed, this framework provides a simple summation, which creates a distributed mini-batch stochastic gradient descent algorithm. Independent of our efforts, FireCaffe demonstrated a similar distributed mini-batch stochastic gradient descent approach, also using message-passing-interface tree-based reductions, in an extension to the Caffe toolkit.6

Our inter-model communication framework was designed to allow different optimization techniques to be applied to the stochastic-gradient-descent gradient updates exchanged between models. Using the framework, we implemented two state-of-the-art quantization techniques—one-bit quantization and threshold quantization—and developed our own novel quantization technique combining the best of these two methods. Our adaptive quantization is tuned for high-performance computing systems, leverages our novel message-passing-interface-reduction framework, and outperforms both the one-bit and threshold quantization approaches.9 Using this communication framework, we scaled up the Livermore Brain to 192 nodes of the Livermore Catalyst cluster, with good performance. Furthermore, we demonstrated that the framework can be effectively used to maximize performance by tuning the balance of model versus data parallelism, given a fixed number of resources and problem size. This communication framework demonstrated a unique feature within the deep learning community and will be critical for scaling up to Sierra/CORAL class architectures.

In addition to the work on integrating both model and data parallelism, a significant part of this project focused on transitioning the prototype of the Livermore Brain into a working toolkit. To provide a more extensible and maintainable platform, the entire codebase was revamped to make it easier to incorporate new layer types, data readers, and neural network training optimizations. Specifically, we added support for:

- Stochastic-gradient-descent-based optimizers: momentum, Nesterov, Adagrad, RMSprop, and Adam

- Regularization techniques: dropout

- Network layers: convolution, pooling, supervised/unsupervised targets, and regression targets

- Training algorithms: multi-level greedy layer-wise autoencoders

- Parallel, distributed input/output readers that leverage node-local, non-volatile, random-access memory

- Parallel checkpoint and recovery

- Network exploration and visualization: TensorBoard integration

- Callback support: debugging, summarization, performance statistics, and dumping internal matrices

Both convolutional and pooling layers support the use of multiple graphics processing units per node, typically one unit per message-passing-interface rank. These layers also support local data parallelism, internal to a single model parallel instance. The convolutional and pooling neural network filters are replicated on each message-passing-interface rank that is training a single model instance and the current mini-batch is evenly distributed across the ranks. This allows multiple graphics processing units on multiple nodes to be used for strong scaling mini-batch processing time. Updates to the convolutional and pooling kernels are synchronized within each model instance. Therefore, a convolutional neural network can easily have three levels of nested parallelism: (1) top-level data parallelism, in which multiple instances of a model are trained on independent partitions of the training data; (2) mid-level model parallelism, in which each model instance is partitioned and trained across multiple high-performance computing nodes; and (3) low-level data parallelism, in which each mini-batch within a model instance is distributed across multiple nodes and multiple graphics-processing units.

This multi-tiered approach to model and data parallelism allows the distribution of compute resources to be optimized for the size of the neural network model that is being trained and the volume of training data. To broaden the impact of the Livermore Brain, we added support to read the Pilot 1 data of the Biological Applications of Advanced Strategic Computing program and released the neural network under the Apache open source license for collaboration throughout the DOE.

In our work we also explored the efficacy of using neuromorphic architectures for problems of interest to the DOE and the scalability of the current generation neuro-synaptic accelerators as embodied by the IBM TrueNorth architecture. This project was able to make significant headway into four application areas: (1) image processing; (2) combined machine learning and high-performance computing simulations; (3) image reconstruction; and (4) heuristic graph optimizations.

We developed neuromorphic versions of neural networks that were able to identify automobiles in the center of cluttered overhead aerial imagery and welding defects in a selective laser additive manufacturing process.10 These networks performed on par with their traditional neural network implementations but with significantly less energy consumption. Specializing these neural network models for the TrueNorth architecture required adapting the network to the limitations of the IBM TrueNorth toolchain and the constraints of the TrueNorth architecture. We were also able to implement a scalable version of a neural network that would propose bounding boxes for the primary object in an image, based on the region-based convolutional network and "you only look once" network architectures.11,12 This neural network provided an excellent example of the scalability of the TrueNorth architecture, as it was able to be seamlessly scaled up to provide higher quality region proposals at a cost of increased neuromorphic hardware.

Additionally, we were able to develop the low-level neuromorphic hardware description for a convolution-based recurrent neural network. These low-level components will be used to implement a deep neural network that would be trained via reinforcement learning, which can identify and correct mesh tangling events in arbitrary Lagrangian-Eulerian simulation codes. This work was done in collaboration with the Deep Reinforcement Learning for Optimizing Simulation Workflows project.

To explore neuromorphic applications that are not derived from neural network models, we developed the low-level hardware description for both an image reconstruction application based on sparse coding and a heuristic graph optimization based on quadratic unconstrained binary optimization. Both of these implementations are preliminary proof-of-concept projects that demonstrate feasibility but require substantial effort to achieve the necessary scaling to make them practical for any significant problem. Specifically, the sparse coding implementation used reconstruction dictionaries composed of nine 3x3 atoms each and the quadratic unconstrained binary optimization algorithm can find solutions on graphs of up to 16 nodes. Future research efforts will extend this work to develop more scalable solutions.

Impact on Mission

The ability to rapidly learn patterns from massive data, enabled by the large-scale computational learning architectures we developed, is broadly applicable and important for many Livermore missions and directly supports our core competency in high-performance computing, simulation, and data science. This project is also relevant to Livermore's strategic focus area of cybersecurity, space, and intelligence, enabling the training and low-power deployment of high-quality, deep-learning models. In addition, this project impacts other mission-relevant applications, including the analysis of data for biosecurity and critical infrastructure-protection programs, and the optimization of advanced manufacturing systems.

Conclusion

We successfully delivered on the goals of creating a scalable deep learning training toolkit and mapping out the capabilities of the IBM TrueNorth neurosynaptic architecture for DOE applications, demonstrating that the TrueNorth architecture is well suited for image processing applications where energy efficient, low-power solutions are required (e.g., triage of large data streams near the point of collection). We have also created prototype hardware that establishes a roadmap for using neuromorphic architectures in combined high-performance computing and machine-learning applications, as well as heuristic graph optimizations. These experiments and lessons learned have paved the way for the research direction of Livermore's Advanced Simulation and Computing group's new Beyond Moore’s Law program. The Livermore Brain toolkit is a key component of multiple exascale computing projects and programmatic efforts throughout the Laboratory. Our work on mapping DOE applications to neuromorphic architectures has helped inform the DOE’s strategic plans about next generation high-performance computing systems, giving rise to a push for cognitive computing platforms, and paving the foundation of a roadmap for the use of non-von Neumann architectures within the Advanced Simulation and Computing programs. Overall, these capabilities provide a foundation for new research on massive scale deep learning and non-von Neumann computing that will impact national security, nuclear nonproliferation, counter-weapons-of-mass-destruction programs, and the application of high-performance computing for precision medicine under the Presidential Precision Medicine Initiative and Cancer Moonshot Initiative.

Our work has spearheaded new research on both scalable deep-learning training techniques and neuromorphic computing architectures. These efforts and investments were instrumental in recruiting new talent and generating interest in the Laboratory’s research portfolio. We recruited two full-time research staff members, five PhD summer students, and established collaborations with four research universities: University of Illinois at Urbana-Champaign, University of Dayton in Ohio, University of Wisconsin–Madison, and Syracuse University in New York. This project also helped us create new research ties to the U.S. Army Research Laboratory in Alephi, Maryland; the Air Force Research Laboratory headquartered at Wright-Patterson Air Force Base near Dayton, Ohio; NVIDIA in Santa Clara, California; and IBM Research-Almaden in San Jose, California.

References

- Le, Q., et al., “Building high-level features using large scale unsupervised learning.” Proc. 29th Intl. Conf. Machine Learning, p. 8595. IEEE, New York, NY (2012). http://dx.doi.org/10.1109/ICASSP.2013.6639343

- Coates, A., et al., “Deep learning with COTS HPC systems.” ICML 28 (2013).

- Ni, K., et al., Large-scale deep learning on the YFCC100M dataset. CoRR, arXiv:1502.03409 (2015). LLNL-CONF-661841. http://arxiv.org/abs/1502.03409

- Van Essen, B., et al., “LBANN: Livermore big artificial neural network HPC toolkit.” Proc. Workshop on Machine Learning in High-Performance Computing Environments, ACM, New York, NY (2015). LLNL-CONF-677443B. http://dx.doi.org/10.1145/2834892.2834897

- Poulson, J., et al., “Elemental: A new framework for distributed memory dense matrix computations.” ACM Trans. Math. Software 39(2), 13:1 (2013). http://dx.doi.org/10.1145/2427023.2427030

- Iandola, N., et al., FireCaffe: Near-linear acceleration of deep neural network training on compute clusters. CoRR, arXiv:1511.00175 (2015). http://arxiv.org/abs/1511.00175

- Seide, F., et al., “1-bit stochastic gradient descent and its application to data-parallel distributed training of speech DNNs.” Proc. INTERSPEECH 2014, WikiCFP (2014).

- Strom, N., “Scalable distributed DNN training using commodity GPU cloud computing.” Proc. INTERSPEECH, Technical University of Berlin, Berlin, Germany (2015).

- Dryden, N., et al., Communication quantization for data-parallel training of deep neural networks. Machine Learning in HPC Environments, Salt Lake City, UT, Nov.13–18, 2016. LLNL-CONF-700919.

- Sawada, J., et. al., TrueNorth ecosystem for brain-inspired computing: Scalable systems, software, and applications. Supercomputing 2016, Salt Lake City, UT, Nov. 13–18, 2016. LLNL-CONF-712782.

- Girshick, R., et al., "Region-based convolutional networks for accurate object detection and segmentation." IEEE Trans. Pattern Anal. Mach. Intell. 38(1), 142 (2016). http://dx.doi.org/10.1109/TPAMI.2015.2437384

- Redmon, J., et al., You only look once: Unified, real-time object detection. CoRR, arXiv:1506.02640 (2016). http://arxiv.org/abs/1506.02640

Publications and Presentations

- Dryden, N., et al., Communication quantization for data-parallel training of deep neural networks. Machine Learning in HPC Environments, Salt Lake City, UT, Nov.13–18, 2016. LLNL-CONF-700919.

- Sawada, J., et. al., TrueNorth ecosystem for brain-inspired computing: Scalable systems, software, and applications. Supercomputing 2016, Salt Lake City, UT, Nov. 13–18, 2016. LLNL-CONF-712782.