Peter Lindstrom | 18-FS-018

Overview

Data movement is increasingly becoming the dominant bottleneck in high-performance computing (HPC). The vast majority of such data is numerical in nature and is most often represented in floating point format. One recently proposed strategy for mitigating the data movement bottleneck is inline compression, which exploits redundancy in the data, decompressing it on demand only when needed. By partitioning the data into small chunks, each iteration of a computation decompresses, computes on, and then compresses each updated chunk. The cost of compression and decompression can be significant, however. The goal of this project was to investigate the possibility of avoiding full decompression by performing computations on the compressed or only partially decompressed data. Results show that doing so not only improves performance but sometimes substantially increases accuracy. Using the zfp floating-point compressed representation, we demonstrated performance and accuracy improvements in simple computational kernels involving small multidimensional blocks of data.

Background and Research Objectives

The idea of digital data compression to boost memory bandwidth and capacity is not new. Such data compression, whether done at the software or hardware level, is employed in image, video, and audio transmission and in input/output (I/O) subsystems. In inline compression, small pieces of data are decompressed on demand as the application is running and accessing the data, hiding the fact that the data is even stored in a compressed form. This is in contrast to streaming compression, in which the entire dataset is compressed once as a whole, usually just before writing it to disk. A popular example of hardware-supported inline compression is texture compression (Beers et al. 1996, Nystad et al. 2012), which partitions an image into small blocks of pixels (e.g., 4 x 4 pixels; see figure) and compresses each block to a fixed number of bits, enabling repeated random access to pixels at block granularity.

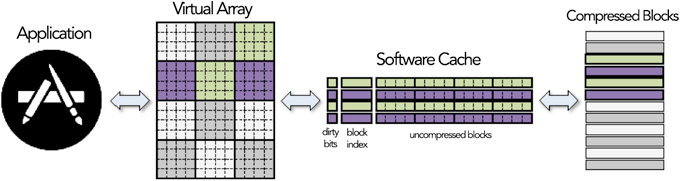

Schematic diagram of the process of creating a two-dimensional zfp compressed array. The array is partitioned into discrete blocks of 4-X-4-pixel floating-point values that are subsequently cached and compressed.

Surprisingly, with the petabyte- to exabyte-sized datasets being generated today in HPC simulations, observations, and experiments, relatively little work has been done on inline floating-point compression, and it may be the case that no hardware has been built to compress such numerical data. Our own zfp compressor (Lindstrom 2014, Diffenderfer et al. 2018) is the first attempt to support inline compression of floating-point arrays and builds on the same principle as texture compression. The zfp compressor partitions d -dimensional arrays into discrete blocks of 4 d values and applies a sequence of steps to perform the compression, each transforming the binary representation of the values to one that ultimately has most of its redundant information removed.

To perform computations on zfp arrays (e.g., to compute the sum of two matrices represented as two-dimensional arrays), corresponding blocks are first fully decompressed to their IEEE Standard 754 floating-point representation. After performing the arithmetic using built-in hardware floating-point instructions, the sum, which is stored in a separate block of floating-point values, is fully compressed back to memory. The performance cost of such complete compression and decompression can be high, and the conversion (in both directions) between zfp’s internal representation and the IEEE Standard 754 floating-point representation often introduces round-off errors. Thus, it would be preferable to perform computations directly on the compressed representation.

Compressed representations remove any redundant information that can be inferred; therefore, the meaning of a bit that appears late in the bit stream depends on the values of earlier bits. Such non-linearities generally preclude direct operations on the compressed bit stream. However, several of zfp’s compression steps are linear in nature. Such linearities have recently been exploited in homomorphic encryption (Gentry 2009) but they have seen little use in data compression.

The objective of this project was twofold: (1) investigate how computations can be accelerated by avoiding redundant decompression and compression steps; and (2) investigate how accuracy can be improved by avoiding or delaying lossy conversion steps between IEEE Standard 754 floating-point representation and zfp. Our findings for common operations on blocks (such as reductions, maps, arithmetic, and finite differences) serve as foundations for more complex numerical computations. The results of our research indicate that performing computations on compressed data that has been only partially decompressed will enable significant performance improvements in some cases (i.e., as much as a seven-fold speedup), as well as a significant improvement in accuracy in others.

Impact on Mission

This study advances the science, technology, and engineering competencies that are the foundation of the NNSA mission. Specifically, it enhances the Laboratory's core competencies in high-performance computing, simulation, and data science.

HPC remains one of the core disciplines at Lawrence Livermore National Laboratory. With the end of Moore’s law and the increasing cost of data movement, coupled with increasing contention for precious memory bandwidth due to dramatic increases in core count, the problem of data movement is likely to be around for the foreseeable future. The use of data compression as a strategy to mitigate data movement is ubiquitous, but hardware implementation has largely been limited to consumer electronics (e.g., smart phones). Because data volumes in HPC usually dwarf those generated by consumer devices, we expect hardware-compression support to be adopted in HPC soon.

This study is the first-ever on how to accelerate and increase the accuracy of computations applied to data stored in compressed form. The reduction in precision achieved via compression and related techniques may (through algorithmic solutions) save the Laboratory's time and funding in comparison to solving the same problem via brute force through procurement of more powerful (and more expensive) hardware. In particular, we expect substantial savings in memory footprint, memory and communication bandwidth, I/O time, and secondary scratch and archival storage through the use of compression, resulting in higher productivity, faster time to solution, and a substantial reduction in resource and power usage. This feasibility study is a small first step toward realizing compressed numerics. We expect that our preliminary results will serve to support our pursuit of in-depth research of this subject area in the future.

Conclusion

We have investigated techniques for performing computations on compressed data that has been only partially decompressed. These techniques will enable faster computations and improved accuracy by eliminating conversion steps that introduce errors. We observed seven-fold speedups and significant reductions in errors by avoiding or simplifying one or more steps in the zfp floating-point compressor. With an increasing appetite for lossless (and also lossy) compression, we expect increasing interest and support for continued research in this area.

References

Beers, A., et al. 1996. "Rendering from Compressed Textures." Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques , New Orleans, LA, 373–378. doi: 10.1145/237170.237276.

Diffenderfer, J., et al. 2018. "Error Analysis of ZFP Compression for Floating-Point Data." SIAM Journal on Scientific Computing , May 2018 (in review).

Gentry, C. 2009. "Fully Homomorphic Encryption Using Ideal Lattices." Proceedings of ACM Symposium on Theory of Computing 2009 , Bethesda, MD, May 2009. doi: 10.1145/1536414.1536440.

Lindstrom, P. 2014. "Fixed-Rate Compressed Floating-Point Arrays." IEEE Transactions on Visualization and Computer Graphics 20(12): 2674–2683. doi: 10.1109/TVCG.2014.2346458.

Nystad, J., et al. 2012. "Adaptive Scalable Texture Compression." High Performance Graphics , 105–114. doi: 10.2312/EGGH/HPG12/105-114.